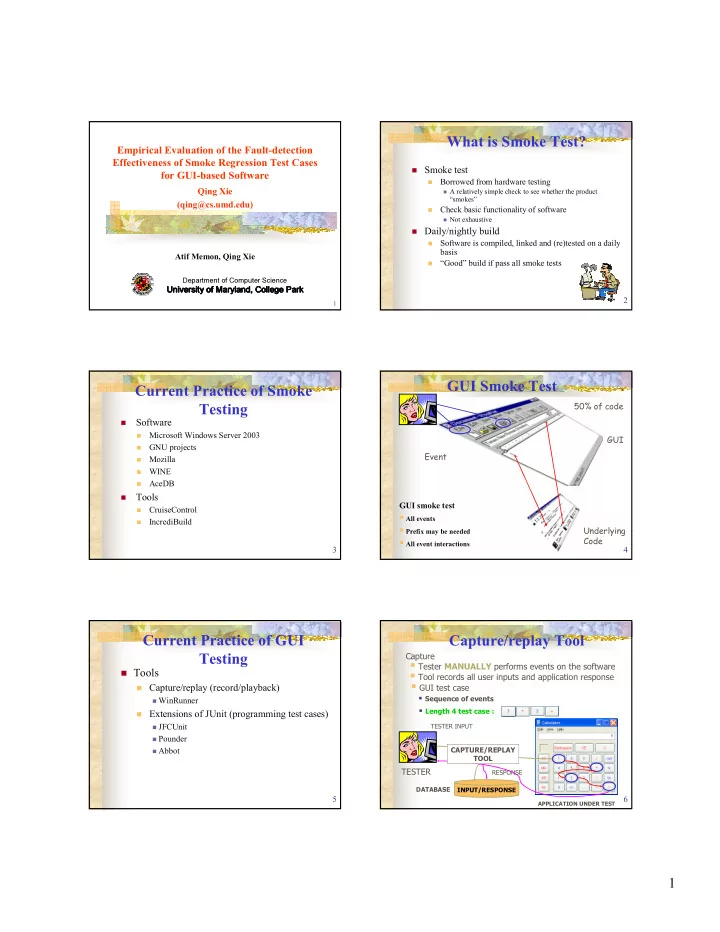

What is Smoke Test? Empirical Evaluation of the Fault-detection Effectiveness of Smoke Regression Test Cases � Smoke test for GUI-based Software Borrowed from hardware testing � Qing Xie � A relatively simple check to see whether the product “smokes” (qing@cs.umd.edu) Check basic functionality of software � � Not exhaustive � Daily/nightly build Software is compiled, linked and (re)tested on a daily � basis Atif Memon, Qing Xie “Good” build if pass all smoke tests � ������������������������������ ������������������������������������ ������������������������������������ ������������������������������������ ������������������������������������ 2 1 GUI Smoke Test Current Practice of Smoke 50% of code Testing Software � Microsoft Windows Server 2003 GUI � GNU projects � Event Mozilla � WINE � AceDB � Tools � GUI smoke test CruiseControl � � All events IncrediBuild � Underlying � Prefix may be needed Code � All event interactions 3 4 Current Practice of GUI Capture/replay Tool Testing Capture � Tester MANUALLY performs events on the software � Tools � Tool records all user inputs and application response � Capture/replay (record/playback) � GUI test case � Sequence of events � WinRunner � Length 4 test case : � Extensions of JUnit (programming test cases) � JFCUnit TESTER INPUT � Pounder CAPTURE/REPLAY � Abbot TOOL TESTER RESPONSE DATABASE INPUT/RESPONSE 5 6 APPLICATION UNDER TEST 1

Capture/replay Tool What is Needed? Replay � Retrieves test case � Goal: Automatically test GUI every night � Replays it on modified application � Model of GUI � Gets actual response Event Flow Graph � � Verifies it against expected State of GUI � Obtain automatically using reverse engineering � REPLAY INPUT technique CAPTURE/REPLAY � Use the model TOOL Smoke test case generator � COMPARE Expected state generator � INPUT/RESPONSE � Test executor EXPECTED ACTUAL DATABASE * Fully automated RESPONSE RESPONSE APPLICATION UNDER TEST 14 7 8 Smoke Tests for GUIs GUI Model – Event Flow Graph � GUI test case File Edit Help Sequence of events follows Open … � � Test case length Save Length 1 – all events in the GUI � Length 2 – all possible test cases of the form <e i , e j >, � Event-flow Graph where event e j can be performed immediately after event e i Length 1: FILE � Purpose is to test basic functionality quickly Execute all GUI events and event interactions Length 2: FILE SAVE � � Smoke test suite All length 1 and 2 test cases Definition : Event Definition : Event e e Y Y follows follows e e X X iff iff e e Y Y can be performed can be performed � Necessary prefix immediately after e immediately after e X X . . 9 10 � Component View of DART State of GUI A GUI consists of Objects Creates smoke test Expected State cases from GUI Test Case Form model Generator Generator GUI Window State wsNormal G e n e r a t e s e x p e c t e d Reverse Engineering s t a t e a s c o r r e c t Width 1088 b e h a v i o r Tool AutoScroll TRUE Event Flow Graph Traverses and GUI extracts GUI model Model State of GUI Button t r u m e n t s c o d e Executes smoke test I n s Label t o g e t t e s t cases automatically and t Caption Cancel generates error reports c o v e r a g e r e p o r Align alNone Test Code Enabled TRUE Executor Caption Files of type: Instrumenter Visible TRUE Color clBtnFace DART: Daily Automated Regression Tester Height 65 Font (tFont) 11 12 2

�������������������� �������������������� �������������������� �������������������� Experiments ����������������������� ������������������������ ����������������������� ����������������������� � ����������� � � ����������� ����������� ����������� ������������ State of GUI EFG �������� �������� �������� �������� � Fault detection ability ����������� ����������� ����������� ����������� AUTOMATED GENERATION Total faults detected � SETUP Relationship between fault detection ability and ����������� ����������� ����������� ����������� � EXPECTED STATE ITERATIVE TEST CASES smoke test case length ����������������������� ������������������������ ����������������������� ����������������������� � �������������� � � �������������� �������������� �������������� � Code coverage Relationship between code coverage and smoke test � cases �������� �������� �������� �������� Unexecuted code � AUTOMATED FIX BUGS � Cost EXECUTION ERROR COVERAGE AUTOMATED REPORT REPORT 13 14 REPORTING DEVELOPERS Experimental Process Subject Applications � Choose subject applications � Generate smoke test cases with expected state Subject Application Windows Widgets LOC Classes Methods Branches � Seed faults TerpWord 11 132 4893 104 236 452 TerpSpreadSheet 9 165 12791 125 579 1521 � Execute test cases TerpPaint 10 220 18376 219 644 1277 Compare actual GUI state to the expected state TerpCalc 1 92 9916 141 446 1306 � TOTAL 31 609 45976 589 1905 4556 � Measure Number of Faults detected � Code Coverage � Time � Space � 15 16 Test Cases Generation Fault Seeding � Seed 200 faults in each application � Create 200 versions for each application Potential Test Cases Actual Generated Test Cases � Exactly one fault in each version Length Length Subject Application 1 2 3 1 2 3 Total TerpWord 126 1140 12461 126 1140 3880 5146 � History-based TerpSpreadSheet 162 2742 56076 162 2742 2318 5222 TerpPaint 215 8077 502133 215 8077 0 8292 � From a bug tracking tool bugzilla TerpCalc 87 7366 623702 87 7366 0 7453 TOTAL 590 19325 1194372 590 19325 6198 26113 17 18 3

Test Case Execution Total Faults Detected � Execute all test cases (5000+) on all 200 versions of the 4 subject applications � 5000 * 200 * 4 = 4,000,000+ � Pentium 4 2.2GHz 256MB RAM � Compare actual and expected state � Report mismatches and crashes 19 20 Smoke Tests and Code Faults Detected vs. Test Case Coverage Length TerpCalc 21 22 Unexecuted Code Conclusions � Short GUI smoke tests are effective Some mouse/keyboard events not generated (40%) � event handlers ( e.g. , right-click) not executed � There are classes of faults that cannot be detected � Exceptions not raised (30%) � Short smoke tests execute a large percentage of � accounted for a large percentage of missed code code � Unable to execute code related to some widgets (10%) � Smoke testing process is feasible in terms of time � e.g. , the close button in all windows and storage space � Controlled environment (10%) � � Future Work reset environment variables before each run � Increase code coverage code related not executed ( e.g. , list of recently accessed files) � � Increase completeness of expected state generator Some require longer than 2 events (10%) � � Combine GUI-based smoke test and code-based � smoke test 23 24 4

THANK YOU http://guitar.cs.umd.edu 25 5

Recommend

More recommend