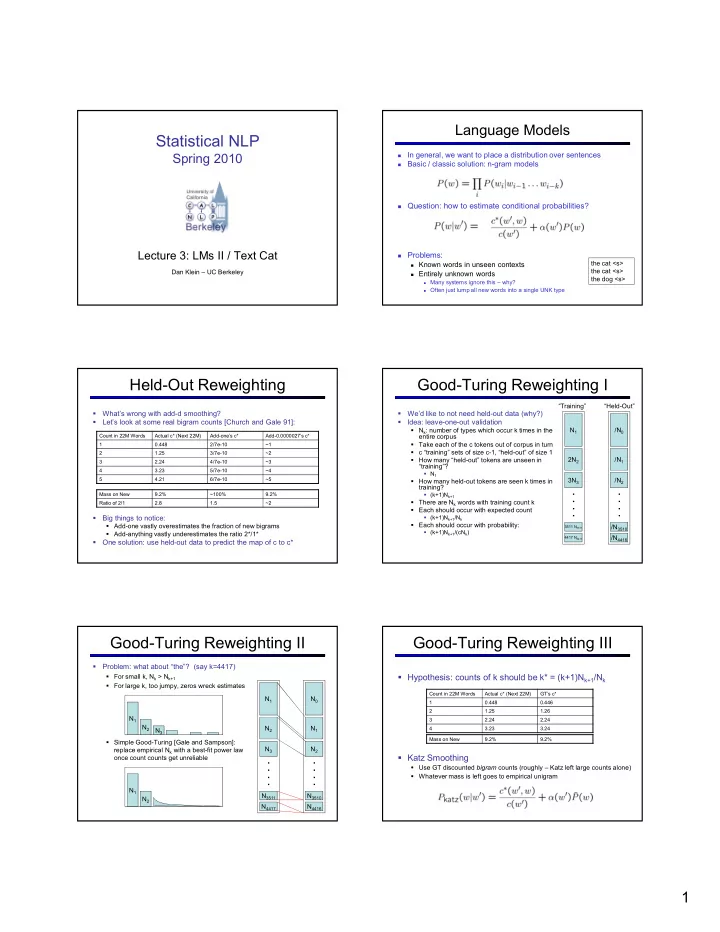

Language�Models Statistical�NLP In�general,�we�want�to�place�a�distribution�over�sentences Spring�2010 � Basic�/�classic�solution:�n*gram�models � � Question:�how�to�estimate�conditional�probabilities? Lecture�3:�LMs�II�/�Text�Cat � Problems: the�cat�<s> � Known�words�in�unseen�contexts the�cat�<s> Dan�Klein�– UC�Berkeley � Entirely�unknown�words the�dog�<s> � Many�systems�ignore�this�– why? � Often�just�lump�all�new�words�into�a�single�UNK�type Held*Out�Reweighting Good*Turing�Reweighting�I “Training” “Held*Out” What’s�wrong�with�add*d�smoothing? We’d�like�to�not�need�held*out�data�(why?) � � � Let’s�look�at�some�real�bigram�counts�[Church�and�Gale�91]: � Idea:�leave*one*out�validation � N k :�number�of�types�which�occur�k�times�in�the� N 1 /N 0 Count�in�22M�Words Actual�c*�(Next�22M) Add*one’s�c* Add*0.0000027’s�c* entire�corpus � Take�each�of�the�c�tokens�out�of�corpus�in�turn 1 0.448 2/7e*10 ~1 � c�“training”�sets�of�size�c*1,�“held*out”�of�size�1 2 1.25 3/7e*10 ~2 2N 2 /N 1 � How�many�“held*out”�tokens�are�unseen�in� 3 2.24 4/7e*10 ~3 “training”?� 4 3.23 5/7e*10 ~4 � N 1 5 4.21 6/7e*10 ~5 � How�many�held*out�tokens�are�seen�k�times�in� 3N 3 /N 2 training? Mass�on�New� 9.2% ~100% 9.2% � (k+1)N k+1 .�.�.�. .�.�.�. � There�are�N k words�with�training�count�k Ratio�of�2/1 2.8 1.5 ~2 � Each�should�occur�with�expected�count� � Big�things�to�notice: � (k+1)N k+1 /N k � Each�should�occur�with�probability: � Add*one�vastly�overestimates�the�fraction�of�new�bigrams /N 3510 3511�N 3511 � (k+1)N k+1 /(cN k ) � Add*anything�vastly�underestimates�the�ratio�2*/1* /N 4416 4417�N 4417 � One�solution:�use�held*out�data�to�predict�the�map�of�c�to�c* Good*Turing�Reweighting�II Good*Turing�Reweighting�III � Problem:�what�about�“the”?��(say�k=4417) � For�small�k,�N k >�N k+1 � Hypothesis:�counts�of�k�should�be�k*�=�(k+1)N k+1 /N k � For�large�k,�too�jumpy,�zeros�wreck�estimates Count�in�22M�Words Actual�c*�(Next�22M) GT’s�c* N 1 N 0 1 0.448 0.446 2 1.25 1.26 N 1 3 2.24 2.24 N 2 N 2 N 1 4 3.23 3.24 N 3 Mass�on�New� 9.2% 9.2% � Simple�Good*Turing�[Gale�and�Sampson]:� N 3 N 2 replace�empirical�N k with�a�best*fit�power�law� � Katz�Smoothing once�count�counts�get�unreliable .�.�.�. .�.�.�. � Use�GT�discounted� ������� counts�(roughly�– Katz�left�large�counts�alone) � Whatever�mass�is�left�goes�to�empirical�unigram N 1 N 3511 N 3510 N 2 N 4417 N 4416 1

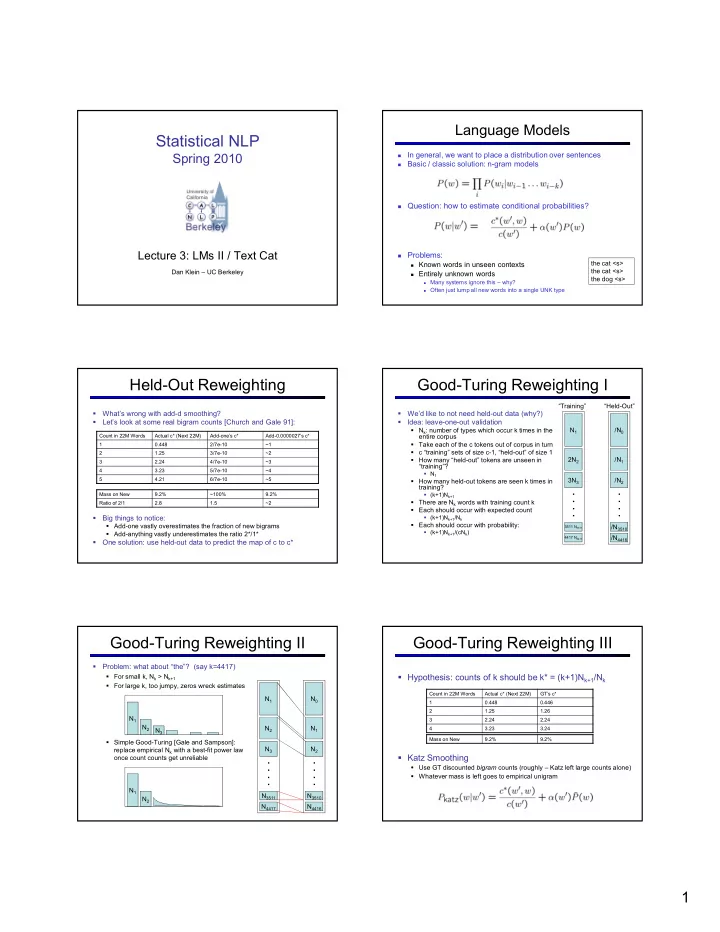

Kneser*Ney:�Continuation Kneser*Ney:�Discounting � Idea�2:�Type*based�fertility�rather�than�token�counts Kneser*Ney smoothing:�very�successful�estimator�using�two�ideas � � Shannon�game:��There�was�an�unexpected�____? Idea�1:�observed�n*grams�occur�more�in�training�than�they�will�later: � � delay? � Francisco? Count�in�22M�Words Avg�in�Next�22M Good*Turing�c* � “Francisco”�is�more�common�than�“delay” 1 0.448 0.446 � …�but�“Francisco”�always�follows�“San” 2 1.25 1.26 � …�so�it’s�less�“fertile” 3 2.24 2.24 4 3.23 3.24 � Solution:�type*continuation�probabilities Absolute�Discounting � � In�the�back*off�model,�we�don’t�want�the�probability�of�w�as�a�unigram Save�ourselves�some�time�and�just�subtract�0.75�(or�some�d) � � Instead,�want�the�probability�that�w�is� ������� �� ��������������� Maybe�have�a�separate�value�of�d�for�very�low�counts � � For�each�word,�count�the�number�of�bigram�types�it�completes Kneser*Ney What�Actually�Works? � Kneser*Ney smoothing�combines�these�two�ideas � Trigrams�and�beyond: � Absolute�discounting � Unigrams,�bigrams� generally�useless � Trigrams�much�better�(when� there’s�enough�data) � 4*,�5*grams�really�useful�in� MT,�but�not�so�much�for� � Lower�order�continuation�probabilities speech � Discounting � Absolute�discounting,�Good* Turing,�held*out�estimation,� Witten*Bell � KN�smoothing�repeatedly�proven�effective�(ASR,�MT,�…) � Context�counting � Kneser*Ney�construction� oflower*order�models � [Teh,�2006]�shows�KN�smoothing�is�a�kind�of�approximate� [Graphs�from inference�in�a�hierarchical�Pitman*Yor process�(and�better� Joshua�Goodman] � See�[Chen+Goodman]� approximations�are�superior�to�basic�KN) reading�for�tons�of�graphs! Tons�of�Data? Data�>>�Method? � Having�more�data�is�better… 10 9.5 100,000�Katz 9 100,000�KN 8.5 1,000,000�Katz ������� 8 1,000,000�KN 7.5 10,000,000�Katz 7 10,000,000�KN 6.5 all�Katz 6 all�KN 5.5 1 2 3 4 5 6 7 8 9 10 20 ������������ � …�but�so�is�using�a�better�estimator � Another�issue:�N�>�3�has�huge�costs�in�speech�recognizers [Brants et�al,�2007] 2

Recommend

More recommend