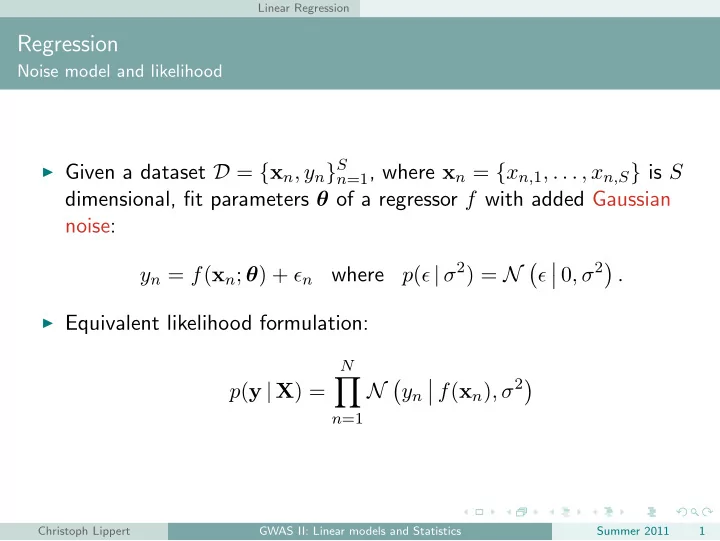

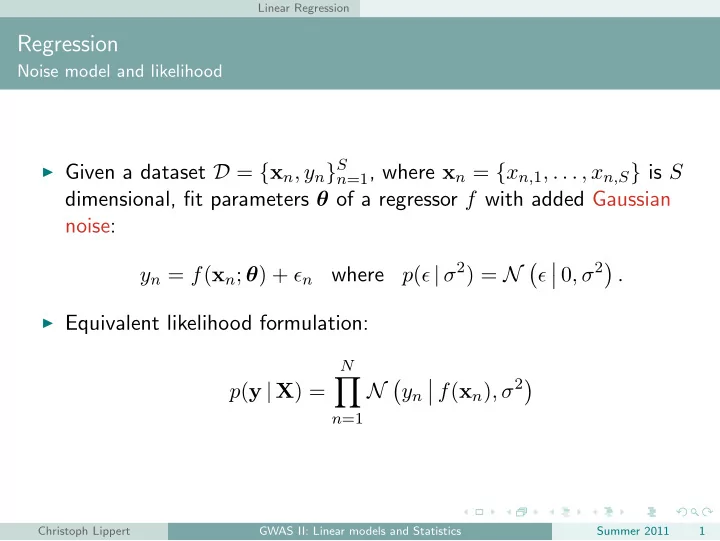

Linear Regression Regression Noise model and likelihood ◮ Given a dataset D = { x n , y n } S n =1 , where x n = { x n, 1 , . . . , x n,S } is S dimensional, fit parameters θ of a regressor f with added Gaussian noise: � � � 0 , σ 2 � p ( ǫ | σ 2 ) = N y n = f ( x n ; θ ) + ǫ n where ǫ . ◮ Equivalent likelihood formulation: N � � � � f ( x n ) , σ 2 � p ( y | X ) = N y n n =1 Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 1

Linear Regression Regression Choosing a regressor ◮ Choose f to be linear: N � � � � x n · θ + c, σ 2 � p ( y | X ) = N y n n =1 ◮ Consider bias free case, c = 0 , otherwise include an additional column of ones in each x n . Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 2

Linear Regression Regression Choosing a regressor ◮ Choose f to be linear: N � � � � x n · θ + c, σ 2 � p ( y | X ) = N y n n =1 ◮ Consider bias free case, c = 0 , otherwise include an additional column of ones in each x n . Equivalent graphical model Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 2

Linear Regression Linear Regression Maximum likelihood ◮ Taking the logarithm, we obtain N � � � � x n · θ , σ 2 � ln p ( y | θ σ 2 ) = ln N y n n =1 N = − N 1 � 2 ln 2 πσ 2 − ( y n − x n · θ ) 2 2 σ 2 n =1 � �� � Sum of squares ◮ The likelihood is maximized when the squared error is minimized. ◮ Least squares and maximum likelihood are equivalent. Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 3

Linear Regression Linear Regression Maximum likelihood ◮ Taking the logarithm, we obtain N � � � � x n · θ , σ 2 � ln p ( y | θ σ 2 ) = ln N y n n =1 N = − N 1 � 2 ln 2 πσ 2 − ( y n − x n · θ ) 2 2 σ 2 n =1 � �� � Sum of squares ◮ The likelihood is maximized when the squared error is minimized. ◮ Least squares and maximum likelihood are equivalent. Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 3

Linear Regression Linear Regression Maximum likelihood ◮ Taking the logarithm, we obtain N � � � � x n · θ , σ 2 � ln p ( y | θ σ 2 ) = ln N y n n =1 N = − N 1 � 2 ln 2 πσ 2 − ( y n − x n · θ ) 2 2 σ 2 n =1 � �� � Sum of squares ◮ The likelihood is maximized when the squared error is minimized. ◮ Least squares and maximum likelihood are equivalent. Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 3

Linear Regression Linear Regression and Least Squares y n y f ( x n , w ) x x n (C.M. Bishop, Pattern Recognition and Machine Learning) N E ( θ ) = 1 � ( y n − x n · θ ) 2 2 n =1 Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 4

Linear Regression Linear Regression and Least Squares ◮ Derivative w.r.t a single weight entry θ i � � N d d − 1 � ln p ( y | θ , σ 2 ) = ( y n − x n · θ ) 2 d θ i d θ i 2 σ 2 n =1 ◮ Set gradient w.r.t. θ to zero Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 5

Linear Regression Linear Regression and Least Squares ◮ Derivative w.r.t a single weight entry θ i � � N d d − 1 � ln p ( y | θ , σ 2 ) = ( y n − x n · θ ) 2 d θ i d θ i 2 σ 2 n =1 ◮ Set gradient w.r.t. θ to zero Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 5

Linear Regression Linear Regression and Least Squares ◮ Derivative w.r.t a single weight entry θ i � � N d d − 1 � ln p ( y | θ , σ 2 ) = ( y n − x n · θ ) 2 d θ i d θ i 2 σ 2 n =1 ◮ Set gradient w.r.t. θ to zero N ∇ θ ln p ( y | θ , σ 2 ) = 1 � ( y n − x n · θ ) x T n = 0 σ 2 n =1 ⇒ θ ML =? = Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 5

Linear Regression Linear Regression and Least Squares ◮ Derivative w.r.t. a single weight entry θ i � � N d d − 1 � ln p ( y | θ , σ 2 ) = ( y n − x n · θ ) 2 d θ i d θ i 2 σ 2 n =1 N = 1 � ( y n − x n · θ ) x i σ 2 n =1 ◮ Set gradient w.r.t. θ to zero N ∇ θ ln p ( y | θ , σ 2 ) = 1 � ( y n − x n · θ ) x T n = 0 σ 2 n =1 ⇒ θ ML = ( X T X ) − 1 X T = y � �� � Pseudo inverse x 1 , 1 . . . x 1 , S ◮ Here, the matrix X is defined as X = . . . . . . . . . x N, 1 . . . x N,S Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 6

Linear Regression Linear Regression and Least Squares ◮ Derivative w.r.t. a single weight entry θ i � � N d d − 1 � ln p ( y | θ , σ 2 ) = ( y n − x n · θ ) 2 d θ i d θ i 2 σ 2 n =1 N = 1 � ( y n − x n · θ ) x i σ 2 n =1 ◮ Set gradient w.r.t. θ to zero N ∇ θ ln p ( y | θ , σ 2 ) = 1 � ( y n − x n · θ ) x T n = 0 σ 2 n =1 ⇒ θ ML = ( X T X ) − 1 X T = y � �� � Pseudo inverse x 1 , 1 . . . x 1 , S ◮ Here, the matrix X is defined as X = . . . . . . . . . x N, 1 . . . x N,S Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 6

Linear Regression Linear Regression and Least Squares ◮ Derivative w.r.t. a single weight entry θ i � � N d d − 1 � ln p ( y | θ , σ 2 ) = ( y n − x n · θ ) 2 d θ i d θ i 2 σ 2 n =1 N = 1 � ( y n − x n · θ ) x i σ 2 n =1 ◮ Set gradient w.r.t. θ to zero N ∇ θ ln p ( y | θ , σ 2 ) = 1 � ( y n − x n · θ ) x T n = 0 σ 2 n =1 ⇒ θ ML = ( X T X ) − 1 X T = y � �� � Pseudo inverse x 1 , 1 . . . x 1 , S ◮ Here, the matrix X is defined as X = . . . . . . . . . x N, 1 . . . x N,S Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 6

Hypothesis Testing Hypothesis Testing Example: ◮ Given a sample D = { x 1 , . . . , x N } . ◮ Test whether H 0 : θ s = 0 (null hypothesis) or H 1 : θ s � = 0 (alternative hypothesis) is true. ◮ To show that θ s � = 0 we can perform a statistical test that tries to reject H 0 . ◮ type 1 error: H 0 is rejected but does hold. ◮ type 2 error: H 0 is accepted but does not hold. Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 7

Hypothesis Testing Hypothesis Testing Example: ◮ Given a sample D = { x 1 , . . . , x N } . ◮ Test whether H 0 : θ s = 0 (null hypothesis) or H 1 : θ s � = 0 (alternative hypothesis) is true. ◮ To show that θ s � = 0 we can perform a statistical test that tries to reject H 0 . ◮ type 1 error: H 0 is rejected but does hold. ◮ type 2 error: H 0 is accepted but does not hold. Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 7

Hypothesis Testing Hypothesis Testing Example: ◮ Given a sample D = { x 1 , . . . , x N } . ◮ Test whether H 0 : θ s = 0 (null hypothesis) or H 1 : θ s � = 0 (alternative hypothesis) is true. ◮ To show that θ s � = 0 we can perform a statistical test that tries to reject H 0 . ◮ type 1 error: H 0 is rejected but does hold. ◮ type 2 error: H 0 is accepted but does not hold. Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 7

Hypothesis Testing Hypothesis Testing Example: ◮ Given a sample D = { x 1 , . . . , x N } . ◮ Test whether H 0 : θ s = 0 (null hypothesis) or H 1 : θ s � = 0 (alternative hypothesis) is true. H 0 holds H 0 doesn’t hold H 0 accepted true negatives false negatives ◮ To show that θ s � = 0 we can type-2 error H 0 rejected false positives true positives perform a statistical test that type-1 error tries to reject H 0 . ◮ type 1 error: H 0 is rejected but does hold. ◮ type 2 error: H 0 is accepted but does not hold. Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 7

Hypothesis Testing Hypothesis Testing Example: ◮ Given a sample D = { x 1 , . . . , x N } . ◮ Test whether H 0 : θ s = 0 (null hypothesis) or H 1 : θ s � = 0 (alternative hypothesis) is true. H 0 holds H 0 doesn’t hold H 0 accepted true negatives false negatives ◮ To show that θ s � = 0 we can type-2 error H 0 rejected false positives true positives perform a statistical test that type-1 error tries to reject H 0 . ◮ type 1 error: H 0 is rejected but does hold. ◮ type 2 error: H 0 is accepted but does not hold. Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 7

Hypothesis Testing Hypothesis Testing ◮ Given a sample D = { x 1 , . . . , x N } . ◮ Test whether H 0 : θ s = 0 (null hypothesis) or H 1 : θ s � = 0 (alternative hypothesis) is true. ◮ The significance level α defines the threshold and the sensitivity of the test. This equals the probability of a type-1 error. ◮ Usually decision is based on a test statistic . ◮ The critical region defines the values of the test statistic that lead to a rejection of the test. Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 8

Hypothesis Testing Hypothesis Testing ◮ Given a sample D = { x 1 , . . . , x N } . ◮ Test whether H 0 : θ s = 0 (null hypothesis) or H 1 : θ s � = 0 (alternative hypothesis) is true. ◮ The significance level α defines the threshold and the sensitivity of the test. This equals the probability of a type-1 error. ◮ Usually decision is based on a test statistic . ◮ The critical region defines the values of the test statistic that lead to a rejection of the test. Christoph Lippert GWAS II: Linear models and Statistics Summer 2011 8

Recommend

More recommend