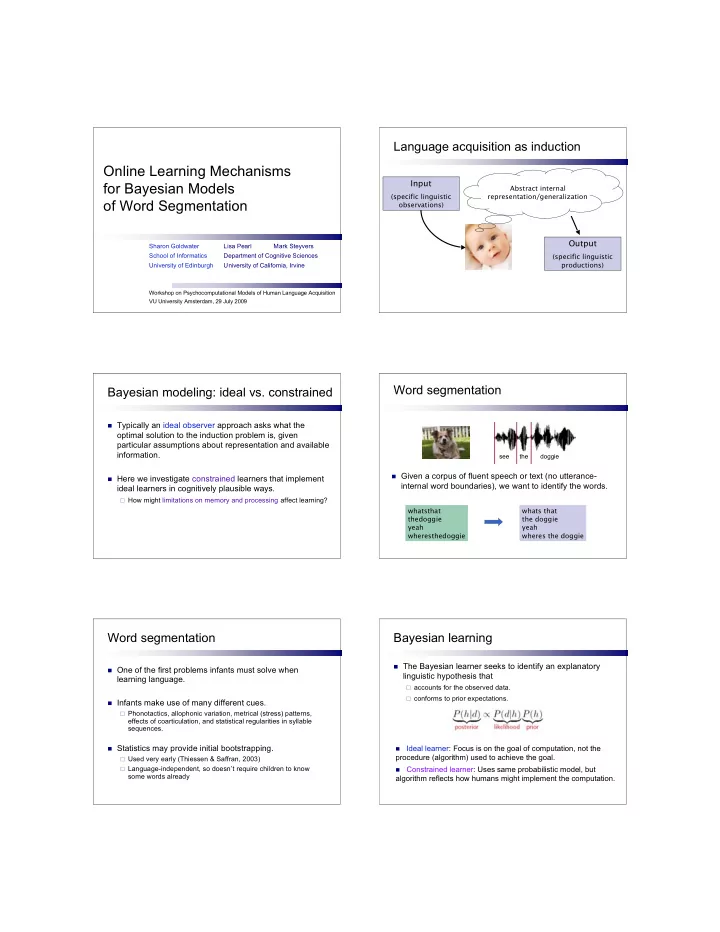

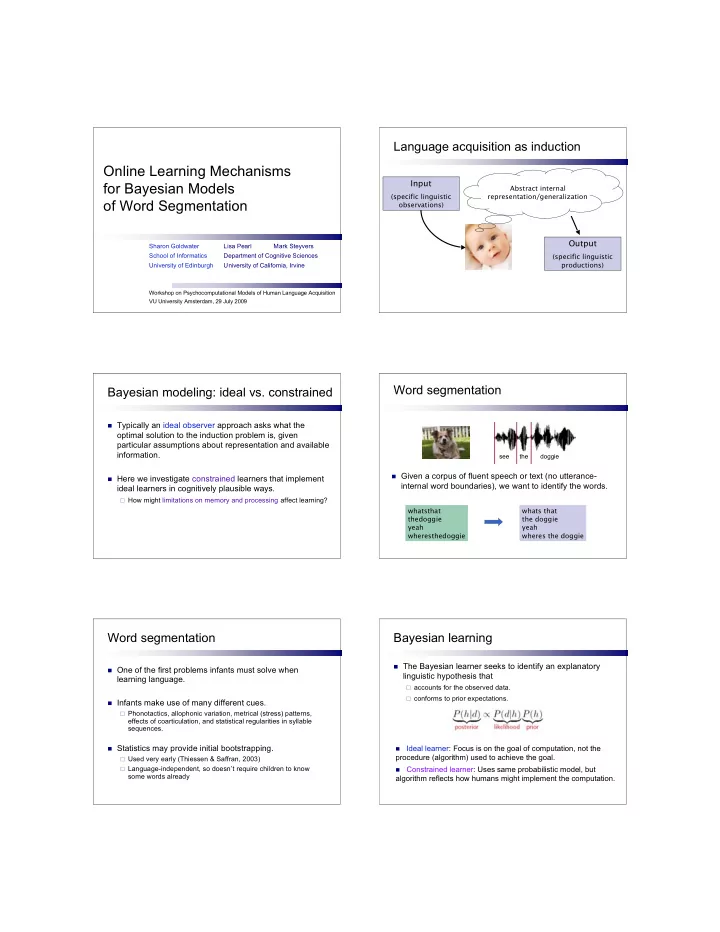

Language acquisition as induction Online Learning Mechanisms Input for Bayesian Models Abstract internal (specific linguistic representation/generalization of Word Segmentation observations) Output Sharon Goldwater Lisa Pearl Mark Steyvers School of Informatics Department of Cognitive Sciences (specific linguistic productions) University of Edinburgh University of California, Irvine Workshop on Psychocomputational Models of Human Language Acquisition VU University Amsterdam, 29 July 2009 Word segmentation Bayesian modeling: ideal vs. constrained Typically an ideal observer approach asks what the optimal solution to the induction problem is, given particular assumptions about representation and available information. see the doggie Given a corpus of fluent speech or text (no utterance- Here we investigate constrained learners that implement internal word boundaries), we want to identify the words. ideal learners in cognitively plausible ways. How might limitations on memory and processing affect learning? whatsthat whats that thedoggie the doggie yeah yeah wheresthedoggie wheres the doggie Word segmentation Bayesian learning The Bayesian learner seeks to identify an explanatory One of the first problems infants must solve when linguistic hypothesis that learning language. accounts for the observed data. conforms to prior expectations. Infants make use of many different cues. Phonotactics, allophonic variation, metrical (stress) patterns, effects of coarticulation, and statistical regularities in syllable sequences. Statistics may provide initial bootstrapping. Ideal learner: Focus is on the goal of computation, not the procedure (algorithm) used to achieve the goal. Used very early (Thiessen & Saffran, 2003) Language-independent, so doesn’t require children to know Constrained learner: Uses same probabilistic model, but some words already algorithm reflects how humans might implement the computation.

Bayesian segmentation An ideal Bayesian learner for word segmentation In the domain of segmentation, we have: Model considers hypothesis space of segmentations, preferring those where Data: unsegmented corpus (transcriptions) The lexicon is relatively small. Hypotheses: sequences of word tokens Words are relatively short. The learner has a perfect memory for the data Order of data presentation doesn’t matter. The entire corpus (or equivalent) is available in memory. = 1 if concatenating words forms corpus, Encodes assumptions or = 0 otherwise. biases in the learner. Note: only counts of lexicon items are required to Optimal solution is the segmentation with highest prior compute highest probability segmentation. (ask us how!) probability. Goldwater, Griffiths, and Johnson (2007, 2009) Investigating learner assumptions Corpus: child-directed speech samples If a learner assumes that words are independent units, what Bernstein-Ratner corpus: is learned from realistic data? [unigram model] 9790 utterances of phonemically transcribed child-directed speech (19-23 months), 33399 tokens and 1321 unique types. What if the learner assumes that words are units that help Average utterance length: 3.4 words predict other units? [bigram model] Average word length: 2.9 phonemes Example input: Approach of Goldwater, Griffiths, & Johnson (2007): use a Bayesian ideal observer to examine the consequences of yuwanttusiD6bUk youwanttoseethebook lUkD*z6b7wIThIzh&t looktheresaboywithhishat making these different assumptions. &nd6dOgi andadoggie ≈ yuwanttulUk&tDIs youwanttolookatthis ... ... Results: Ideal learner Results: Ideal learner sample segmentations Precision: #correct / #found Unigram model Bigram model Recall: #found / #true Word Tokens Boundaries Lexicon youwant to see thebook you want to see the book Prec Rec Prec Rec Prec Rec look theres aboy with his hat look theres a boy with his hat and adoggie and a doggie Ideal (unigram) 61.7 47.1 92.7 61.6 55.1 66.0 you wantto lookatthis you want to lookat this lookatthis lookat this Ideal (bigram) 74.6 68.4 90.4 79.8 63.3 62.6 havea drink have a drink okay now okay now whatsthis whats this whatsthat whats that The assumption that words predict other words is good: bigram model whatisit whatis it generally has superior performance look canyou take itout look canyou take it out ... ... Both models tend to undersegment, though the bigram model does so less (boundary precision > boundary recall)

How about online learners? Considering human limitations Online learners use the same probabilistic model, but process the data incrementally (one utterance at a time), rather than in a batch. What is the most direct translation of the ideal learner to an online learner that must process utterances one at a time? Dynamic Programming with Maximization (DPM) Dynamic Programming with Sampling (DPS) Decayed Markov Chain Monte Carlo (DMCMC) Dynamic Programming: Maximization Considering human limitations For each utterance: • Use dynamic programming to compute probabilites of all segmentations, given the current lexicon. What if humans don’t always choose the most • Choose the best segmentation. probable hypothesis, but instead sample among the • Add counts of segmented words to lexicon. different hypotheses available? you want to see the book 0.33 yu want tusi D6bUk 0.21 yu wanttusi D6bUk 0.15 yuwant tusi D6 bUk … … Algorithm used by Brent (1999), with different model. Dynamic Programming: Sampling Considering human limitations For each utterance: • Use dynamic programming to compute probabilites of all segmentations, given the current lexicon. What if humans are more likely to sample potential • Sample a segmentation. word boundaries that they have heard more recently • Add counts of segmented words to lexicon. (decaying memory = recency effect)? you want to see the book 0.33 yu want tusi D6bUk 0.21 yu wanttusi D6bUk 0.15 yuwant tusi D6 bUk … … Particle filter: more particles more memory

Decayed Markov Chain Monte Carlo Decayed Markov Chain Monte Carlo For each utterance: For each utterance: • Probabilistically sample s boundaries from all utterances • Probabilistically sample s boundaries from all utterances encountered so far. encountered so far. • Prob(sample b ) = b a -d where b a is the number of potential • Prob(sample b ) = b a -d where b a is the number of potential boundary locations between b and the end of the current boundary locations between b and the end of the current utterance and d is the decay rate (Marthi et al. 2002). utterance and d is the decay rate (Marthi et al. 2002). • Update lexicon after the s samples are completed. • Update lexicon after the s samples are completed. you want to see the book you want to see the book what’s this s samples s samples Probability of Probability of sampling boundary sampling boundary yuwant tusi D6 bUk yuwant tu si D6 bUk wAtsDIs Boundaries Boundaries Utterance 1 Utterance 1 Utterance 2 Decayed Markov Chain Monte Carlo Results: unigrams vs. bigrams Decay rates tested: 2, 1.5, 1, 0.75, 0.5, 0.25 F = 2 * Prec * Rec Probability of Prec + Rec sampling within current utterance Precision: d = 2 .942 #correct / #found d = 1.5 .772 Recall: d = 1 .323 #found / #true d = 0.75 .125 Results from 2nd half of corpus d = 0.5 .036 DMCMC Unigram: d=1, s=10000 DMCMC Bigram: d=0.5, s=15000 d = 0.25 .009 Results: unigrams vs. bigrams Results: unigrams vs. bigrams F = 2 * Prec * Rec F = 2 * Prec * Rec Prec + Rec Prec + Rec Precision: Precision: #correct / #found #correct / #found Recall: Recall: #found / #true #found / #true Like the Ideal learner, the DPM bigram learner performs better than the However, the DPS and DMCMC bigram learners perform worse than unigram learner, though improvement is not as great as in the Ideal the unigram learners. The bigram assumption is not helpful. learner. The bigram assumption is helpful.

Results: unigrams vs. bigrams for the lexicon Results: unigrams vs. bigrams for the lexicon F = 2 * Prec * Rec F = 2 * Prec * Rec Prec + Rec Prec + Rec Precision: Precision: #correct / #found #correct / #found Recall: Recall: #found / #true #found / #true Results from 2nd half of corpus Lexicon = a seed pool of words for children to use to figure out Like the Ideal learner, the DPM bigram learner yields a more reliable language-dependent word segmentation strategies. lexicon than the unigram learner. Results: under vs. oversegmentation Results: unigrams vs. bigrams for the lexicon F = 2 * Prec * Rec Precision: Prec + Rec #correct / #found Recall: Precision: #found / #true #correct / #found Recall: #found / #true Results from 2nd half of corpus However, the DPS and DMCMC bigram learners yield much less Undersegmentation: boundary precision > boundary recall reliable lexicons than the unigram learners. Oversegmentation: boundary precision < boundary recall Results: under vs. oversegmentation Results: under vs. oversegmentation Precision: Precision: #correct / #found #correct / #found Recall: Recall: #found / #true #found / #true The DMCMC learner, like the Ideal learner, tends to undersegment. The DPM and DPS learners, however, tend to oversegment.

Recommend

More recommend