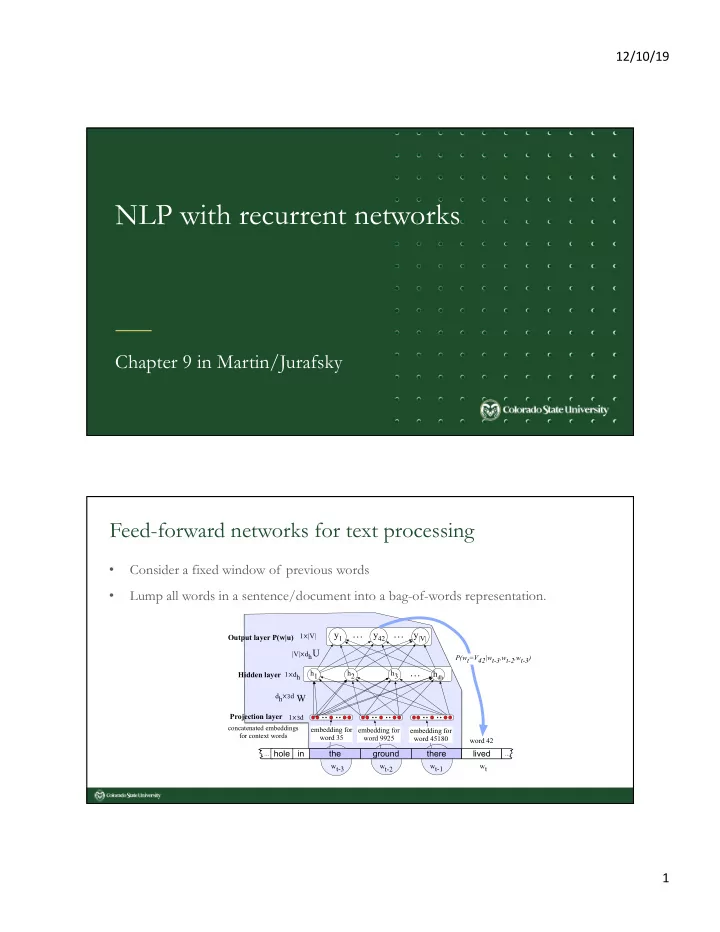

12/10/19 NLP with recurrent networks Chapter 9 in Martin/Jurafsky Feed-forward networks for text processing • Consider a fixed window of previous words • Lump all words in a sentence/document into a bag-of-words representation. … … y 1 y 42 y |V| 1 ⨉ |V| Output layer P(w|u) U |V| ⨉ dh P(wt=V42|wt-3,wt-2,wt-3) … h1 h2 h3 h dh Hidden layer 1 ⨉ dh dh ⨉ 3 d W Projection layer 1 ⨉ 3 d concatenated embeddings embedding for embedding for embedding for for context words word 35 word 9925 word 45180 word 42 hole in the ground there lived ... ... wt-3 wt-2 wt-1 wt 1

12/10/19 Limitations of feed-forward networks for text processing • In general this is an insufficient model of language – because language has long-distance dependencies : “The computer(s) which I had just put into the machine room on the fourth floor is (are) crashing.” • Alternative: recurrent networks Simple recurrent networks • Recurrent networks deal with sequences of inputs / outputs yt x t , y t , h t – input/output/state at step t • ht xt 2

12/10/19 Recurrent networks • Recurrent network illustrated as a feed forward network yt h t = g ( Uh t − 1 + Wx t ) V y t = f ( Vh t ) ht U W ht-1 xt Generating output from a recurrent network function F ORWARD RNN( x , network ) returns output sequence y y3 h 0 ← 0 for i ← 1 to L ENGTH ( x ) do V h i ← g ( U h i − 1 + W x i ) y2 h3 y i ← f ( V h i ) return y W V U h2 y1 x3 U W V h1 x2 W U h0 x1 3

12/10/19 Training recurrent networks If we "unroll" a recurrent network, we can treat it like a feed-forward network. t3 This approach is called backpropagation through y3 V time t2 y2 h3 W V U t1 h2 y1 x3 U W V h1 x2 W U h0 x1 Applications of RNNs Part of speech tagging RNN Janet will back the bill 4

12/10/19 Labeling sequences with RNNs You can classify a sequence using RNNs: Softmax Useful for sentiment analysis hn RNN x1 x2 x3 xn Multi-layer RNNs You can stack multiple recurrent layers: yn y1 y2 y3 RNN 3 RNN 2 RNN 1 x1 x2 x3 xn 5

12/10/19 Bi-directional RNNs A sequence is processed in both directions using a bi-directional RNN: y1 y2 y3 yn + + + + RNN 2 (Right to Left) RNN 1 (Left to Right) x1 x2 x3 xn Bi-directional RNNs A bi-directional RNN for sequence classification: Softmax + h1_back RNN 2 (Right to Left) hn_forw RNN 1 (Left to Right) x1 x2 x3 xn 6

12/10/19 Long-term memory in RNNs Although in principle RNNs can retain memory of past inputs it has seen, they tend to focus on the most recent history. Several RNN architectures have been proposed to address this issue and maintain contextual information over time (LSTMs, GRUs). Other applications of RNNs Machine translation Cho, K., van Merrienboer, B., Gulcehre, C., Bougares, F., Schwenk, H., & Bengio, Y. (2014). Learning phrase representations using RNN encoder-decoder for statistical machine translation. EMNLP 2014. Sutskever, I. Vinyals, O. & Le. Q. V. Sequence to sequence learning with neural networks. In Proc. Advances in Neural Information Processing Systems 27 3104–3112 (2014). Voice recognition Analysis of DNA sequences 7

12/10/19 Machine translation using encoder/decoder networks Composed of two phases: • Encoder: learns a representation of the meaning of the whole sentence • Decoder: translates the encoded representation of the sentence into individual words. https://arxiv.org/pdf/1406.1078.pdf Deep learning for DNA sequences RNNs and convolutional networks have been shown to be useful for predicting various properties of DNA sequence and discovering biological signals that are relevant to a phenomenon of interest Ameni Trabelsi, Mohamed Chaabane, Asa Ben-Hur. Comprehensive evaluation of deep learning architectures for prediction of DNA/RNA sequence binding specificities. Bioinformatics, 35:14, i269–i277, 2019 (ISMB 2019 special issue). 8

12/10/19 Deep learning for DNA sequences Using word2vec helps improve accuracy! 9

Recommend

More recommend