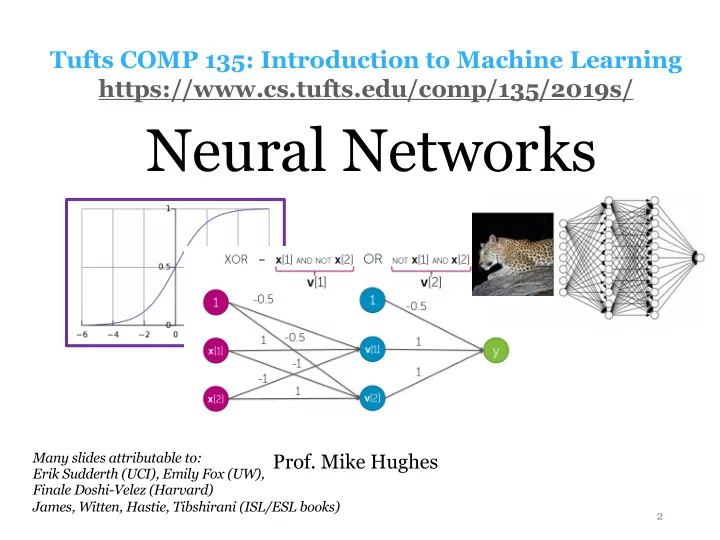

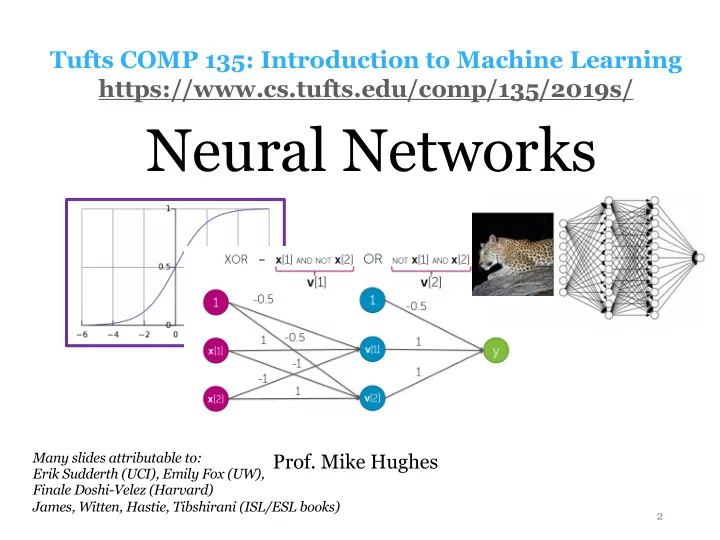

Tufts COMP 135: Introduction to Machine Learning https://www.cs.tufts.edu/comp/135/2019s/ Neural Networks Many slides attributable to: Prof. Mike Hughes Erik Sudderth (UCI), Emily Fox (UW), Finale Doshi-Velez (Harvard) James, Witten, Hastie, Tibshirani (ISL/ESL books) 2

Objectives Today : Neural Networks day 10 • How to learn feature representations • Feed-forward neural nets • Single neuron = linear function + activation • Multi-layer perceptrons (MLPs) • Universal approximation • The Rise of Deep Learning: • Success stories on Images and Language Mike Hughes - Tufts COMP 135 - Fall 2020 3

What will we learn? Evaluation Supervised Training Learning Data, Label Pairs Performance { x n , y n } N measure Task n =1 Unsupervised Learning data label x y Reinforcement Learning Prediction Mike Hughes - Tufts COMP 135 - Fall 2020 4

Task: Binary Classification y is a binary variable Supervised (red or blue) Learning binary classification x 2 Unsupervised Learning Reinforcement Learning x 1 Mike Hughes - Tufts COMP 135 - Fall 2020 5

Example: Hotdog or Not https://www.theverge.com/tldr/2017/5/14/15639784/hbo- silicon-valley-not-hotdog-app-download Mike Hughes - Tufts COMP 135 - Fall 2020 6

Text Sentiment Classification Mike Hughes - Tufts COMP 135 - Fall 2020 7

Image Classification Mike Hughes - Tufts COMP 135 - Fall 2020 8

Feature Transform Pipeline Data, Label Pairs { x n , y n } N n =1 Feature, Label Pairs Performance Task { φ ( x n ) , y n } N measure n =1 label data φ ( x ) y x Mike Hughes - Tufts COMP 135 - Fall 2020 9

Predicted Probas vs Binary Labels Mike Hughes - Tufts COMP 135 - Fall 2020 10

Decision Boundary is Linear { x ∈ R 2 : σ ( w T ˜ x ) = 0 . 5 } ←→ { x ∈ R 2 : w T ˜ x = 0 } Mike Hughes - Tufts COMP 135 - Fall 2020 11

Logistic Regr. Network Diagram 0 or 1 Credit: Emily Fox (UW) https://courses.cs.washington.edu/courses/cse41 6/18sp/slides/ Mike Hughes - Tufts COMP 135 - Fall 2020 12

A “Neuron” or “Perceptron” Unit Non-linear Linear function activation with weights w function Credit: Emily Fox (UW) Mike Hughes - Tufts COMP 135 - Fall 2020 13

“Inspired” by brain biology Slide Credit: Bhiksha Raj (CMU) Mike Hughes - Tufts COMP 135 - Fall 2020 14

Challenge: Find w for these functions X_1 X_2 y X_1 X_2 y 0 0 0 0 0 0 0 1 0 0 1 1 1 0 0 1 0 1 1 1 1 1 1 1 Credit: Emily Fox (UW) Mike Hughes - Tufts COMP 135 - Fall 2020 15

Challenge: Find w for these functions X_1 X_2 y X_1 X_2 y 0 0 0 0 0 0 0 1 0 0 1 1 1 0 0 1 0 1 1 1 1 1 1 1 Mike Hughes - Tufts COMP 135 - Fall 2020 Credit: Emily Fox (UW) 16

What we can’t do with linear decision boundary classifiers X_1 X_2 y 0 0 0 0 1 1 1 0 1 1 1 0 Mike Hughes - Tufts COMP 135 - Fall 2020 17

Idea: Compose Neurons together! φ ( x ) y x transform classify Mike Hughes - Tufts COMP 135 - Fall 2020 18

Can you find w to solve XOR? AND/ ? ? OR ? ? ? ? ? ? ? ? ? Mike Hughes - Tufts COMP 135 - Fall 2020 19

Can you find w to solve XOR? ? ? ? ? ? ? ? ? ? Mike Hughes - Tufts COMP 135 - Fall 2020 20

Can you find w to solve XOR? Mike Hughes - Tufts COMP 135 - Fall 2020 21

1D Input + 3 hidden units Mike Hughes - Tufts COMP 135 - Fall 2020 22

1D Input + 3 hidden units Example functions (before final threshold) f ( x 1 ) f ( x 1 ) Intuition: Piece-wise step function Partitioning input space into regions Mike Hughes - Tufts COMP 135 - Fall 2020 23

MLPs can approximate any functions with enough hidden units! Mike Hughes - Tufts COMP 135 - Fall 2020 24

Neuron Design What’s wrong with hard step activation function? Non-linear Linear function activation with weights w function Credit: Emily Fox (UW) Mike Hughes - Tufts COMP 135 - Fall 2020 25

Neuron Design What’s wrong with hard step activation function? Not smooth! Gradient is zero almost everywhere, so hard to train weights! Non-linear Linear function activation with weights w function Credit: Emily Fox (UW) Mike Hughes - Tufts COMP 135 - Fall 2020 26

Which Activation Function? Non-linear Linear function activation with weights w function Credit: Emily Fox (UW) Mike Hughes - Tufts COMP 135 - Fall 2020 27

Activation Functions Advice Credit: Emily Fox (UW) Mike Hughes - Tufts COMP 135 - Fall 2020 28

Exciting Applications: Computer Vision Mike Hughes - Tufts COMP 135 - Fall 2020 29

Object Recognition from Images Mike Hughes - Tufts COMP 135 - Fall 2020 30

Deep Neural Networks for Object Recognition Scores for each possible object category Decision: “ leopard ” Mike Hughes - Tufts COMP 135 - Fall 2020 31

Deep Neural Networks for Object Recognition Scores for each possible object category Decision: “ mushroom ” Mike Hughes - Tufts COMP 135 - Fall 2020 32

Each Layer Extracts “Higher Level” Features Mike Hughes - Tufts COMP 135 - Fall 2020 33

More layers = less error! ImageNet challenge 1000 categories, 1.2 million images in training set top 5 classification error (%) shallow 2010 2011 2012 2013 2014 2015 Credit: KDD Tutorial by Sun, Xiao, & Choi: http://dl4health.org/ Figure idea originally from He et. al., CVPR 2016 Mike Hughes - Tufts COMP 135 - Fall 2020 34

2012 ImageNet Challenge Winner Mike Hughes - Tufts COMP 135 - Fall 2020 35

State of the art Results Mike Hughes - Tufts COMP 135 - Fall 2020 36

Semantic Segmentation Mike Hughes - Tufts COMP 135 - Fall 2020 37

Object Detection Mike Hughes - Tufts COMP 135 - Fall 2020 38

Exciting Applications: Natural Language (Spoken and Written) Mike Hughes - Tufts COMP 135 - Fall 2020 39

Reaching Human Performance in Speech-to-Text https://arxiv.org/pdf/1610.05256.pdf Mike Hughes - Tufts COMP 135 - Fall 2020 40

Gains in Translation Quality https://ai.googleblog.com/2016/09/a-neural-network-for-machine.html Mike Hughes - Tufts COMP 135 - Fall 2020 41

Any Disadvantages? Mike Hughes - Tufts COMP 135 - Fall 2020 42

Deep Neural Networks can overfit! 1 layer Many layers Few units / layer Many units / layer Underfitting Overfitting Mike Hughes - Tufts COMP 135 - Fall 2020 43

Ways to avoid overfitting • More training data! • L2 / L1 penalties on weights • More tricks (next week) …. • Early stopping • Dropout • Data augmentation Mike Hughes - Tufts COMP 135 - Fall 2020 44

Objectives Today : Neural Networks Unit 1/2 • How to learn feature representations • Feed-forward neural nets • Single neuron = linear function + activation • Multi-layer perceptrons (MLPs) • Universal approximation • The Rise of Deep Learning: • Success stories on Images and Language Mike Hughes - Tufts COMP 135 - Fall 2020 45

Recommend

More recommend