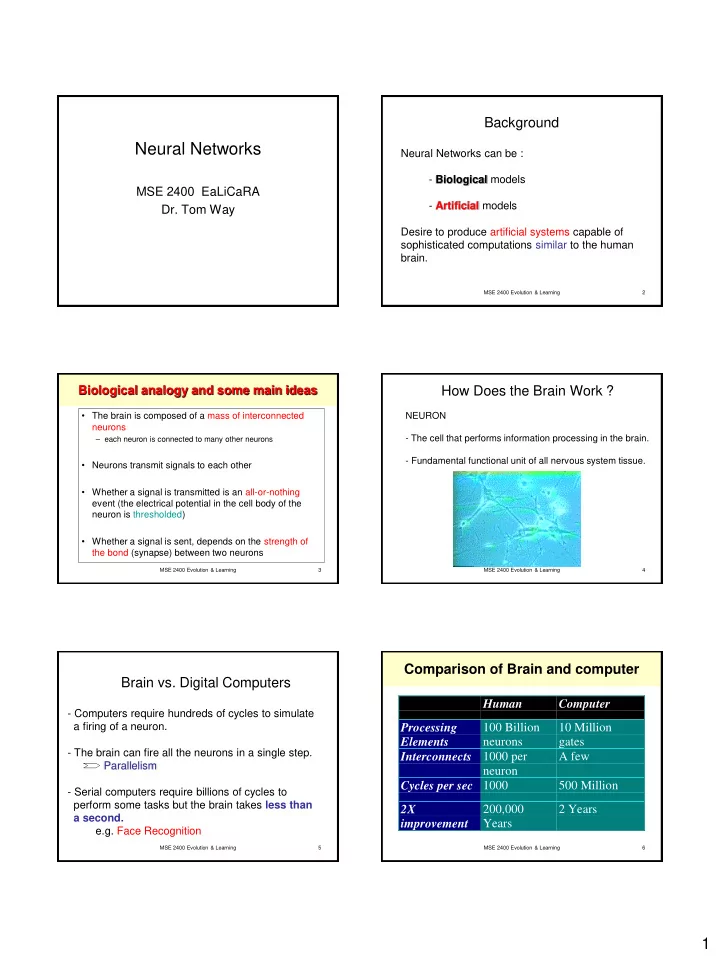

Background Neural Networks Neural Networks can be : - Biological models MSE 2400 EaLiCaRA - Artificial models Dr. Tom Way Desire to produce artificial systems capable of sophisticated computations similar to the human brain. MSE 2400 Evolution & Learning 2 Biological analogy and some main ideas How Does the Brain Work ? • The brain is composed of a mass of interconnected NEURON neurons – each neuron is connected to many other neurons - The cell that performs information processing in the brain. - Fundamental functional unit of all nervous system tissue. • Neurons transmit signals to each other • Whether a signal is transmitted is an all-or-nothing event (the electrical potential in the cell body of the neuron is thresholded) • Whether a signal is sent, depends on the strength of the bond (synapse) between two neurons MSE 2400 Evolution & Learning 3 MSE 2400 Evolution & Learning 4 Comparison of Brain and computer Brain vs. Digital Computers Human Computer - Computers require hundreds of cycles to simulate a firing of a neuron. 100 Billion 10 Million Processing Elements neurons gates - The brain can fire all the neurons in a single step. Interconnects 1000 per A few Parallelism neuron Cycles per sec 1000 500 Million - Serial computers require billions of cycles to perform some tasks but the brain takes less than 200,000 2 Years 2X a second. improvement Years e.g. Face Recognition MSE 2400 Evolution & Learning 5 MSE 2400 Evolution & Learning 6 1

Biological neuron What is an artificial neuron ? synapse axon • Definition : Non linear, parameterized function nucleus with restricted output range cell body dendrites y • A neuron has n 1 – A branching input (dendrites) y f w w i x 0 i – A branching output (the axon) w0 i 1 • The information circulates from the dendrites to the axon via the cell body • Axon connects to dendrites via synapses x1 x2 x3 – Synapses vary in strength – Synapses may be excitatory or inhibitory MSE 2400 Evolution & Learning 7 MSE 2400 Evolution & Learning 8 Learning in Neural Networks Supervised learning • The procedure that consists in estimating the parameters • The desired response of the neural of neurons so that the whole network can perform a specific task network in function of particular inputs is well known. • 2 types of learning • A “Professor” may provide examples and – The supervised learning – The unsupervised learning teach the neural network how to fulfill a • The Learning process (supervised) certain task – Present the network a number of inputs and their corresponding outputs – See how closely the actual outputs match the desired ones – Modify the parameters to better approximate the desired outputs MSE 2400 Evolution & Learning 9 MSE 2400 Evolution & Learning 10 Unsupervised learning Zipcode Example • Idea : group typical input data in function of resemblance criteria un-known a priori • Data clustering • No need of a professor – The network finds itself the correlations between the data – Examples of such networks : • Kohonen feature maps Examples of handwritten postal codes drawn from a database available from the US Postal service MSE 2400 Evolution & Learning 11 MSE 2400 Evolution & Learning 12 2

Neural Networks Character recognition example • A mathematical model to solve engineering problems – • Image 256x256 pixels Group of highly connected neurons to realize compositions of non linear functions • 8 bits pixels values • Tasks (grey level) – Classification – Discrimination 256 256 8 158000 2 10 different images – Estimation • Necessary to extract • 2 types of networks – Feed forward Neural Networks features – Recurrent Neural Networks MSE 2400 Evolution & Learning 13 MSE 2400 Evolution & Learning 14 Feed-forward Networks Feed Forward Neural Networks - Arranged in layers . • Information is propagated Output layer from inputs to outputs - Each unit is linked only in the unit in next layer. • Can pass through one or 2nd hidden more hidden layers layer - No units are linked between the same layer, back to the previous layer or skipping a layer. 1st hidden layer - Computations can proceed uniformly from input to output units. ….. x1 x2 xn - No internal state exists. MSE 2400 Evolution & Learning 15 MSE 2400 Evolution & Learning 16 Feed-Forward Example Recurrent Network (1) H3 H5 - The brain is not and cannot be a feed-forward network. I1 W 35 = 1 W 13 = -1 t = -0.5 t = 1.5 - Allows activation to be fed back to the previous unit. W 57 = 1 I1 W 25 = 1 - Internal state is stored in its activation level. O7 t = 0.5 - Can become unstable W 16 = 1 I2 -Can oscillate. W 67 = 1 W 24 = -1 t = -0.5 t = 1.5 W 46 = 1 H4 H6 MSE 2400 Evolution & Learning 17 MSE 2400 Evolution & Learning 18 3

Recurrent Neural Networks Recurrent Network (2) • Can have arbitrary topologies • Can model systems with - May take long time to compute a stable output. internal states (dynamic ones) 0 1 0 • Delays are associated to a 0 - Learning process is much more difficult. specific weight 1 • Training is more difficult 0 • - Can implement more complex designs. Performance may be 0 1 problematic – Stable Outputs may be more - Can model certain systems with internal states. difficult to evaluate x1 x2 – Unexpected behavior (oscillation, chaos, …) MSE 2400 Evolution & Learning 19 MSE 2400 Evolution & Learning 20 Multi-layer Networks and Perceptrons Perceptrons - First studied in the late 1950s. - Have one or more - Networks without hidden - Also known as Layered Feed-Forward Networks. layers of hidden units. layer are called perceptrons. - The only efficient learning element at that time was - With two possibly for single-layered networks. very large hidden - Perceptrons are very layers, it is possible limited in what they can - Today, used as a synonym for a single-layer, feed-forward network. to implement any represent, but this makes function. their learning problem much simpler. MSE 2400 Evolution & Learning 21 MSE 2400 Evolution & Learning 22 Perceptrons MSE 2400 Evolution & Learning 23 MSE 2400 Evolution & Learning 24 4

Need for hidden units What can Perceptrons Represent? • If there is one layer of enough hidden Some complex Boolean function can be units, the input can be recoded (perhaps represented. just memorized) For example: • This recoding allows any mapping to be Majority function - will be covered in this lecture. represented Perceptrons are limited in the Boolean functions • Problem: how can the weights of the they can represent. hidden units be trained? MSE 2400 Evolution & Learning 25 MSE 2400 Evolution & Learning 26 Backpropagation Backpropagation flow - In 1969 a method for learning in multi-layer network, Backpropagation, was invented by Bryson and Ho. - The Backpropagation algorithm is a sensible approach for dividing the contribution of each weight. - Works basically the same as perceptrons MSE 2400 Evolution & Learning 27 MSE 2400 Evolution & Learning 28 Backpropagation Network training Backpropagation Algorithm – Main Idea – error in hidden layers • 1. Initialize network with random weights • 2. For all training cases (called examples): The ideas of the algorithm can be summarized as follows : – a. Present training inputs to network and 1. Computes the error term for the output units using the calculate output observed error. – b. For all layers (starting with output layer, back to input layer): 2. From output layer, repeat • i. Compare network output with correct output - propagating the error term back to the previous layer and (error function) This is - updating the weights between the two layers • ii. Adapt weights in current layer what until the earliest hidden layer is reached. you want MSE 2400 Evolution & Learning 29 MSE 2400 Evolution & Learning 30 5

How many hidden layers? How big a training set? • Determine your target error rate, e • Usually just one (i.e., a 2-layer net) • Success rate is 1- e • Typical training set approx. n/ e , where n is the • How many hidden units in the layer? number of weights in the net – Too few ==> can’t learn • Example: – Too many ==> poor generalization – e = 0.1, n = 80 weights – training set size 800 trained until 95% correct training set classification should produce 90% correct classification on testing set (typical) MSE 2400 Evolution & Learning 31 MSE 2400 Evolution & Learning 32 Summary • Neural network is a computational model that simulate some properties of the human brain. • The connections and nature of units determine the behavior of a neural network. • Perceptrons are feed-forward networks that can only represent linearly separable (very simple) functions. MSE 2400 Evolution & Learning 33 MSE 2400 Evolution & Learning 34 Summary (cont’d) • Given enough units, any function can be represented by Multi-layer feed-forward networks. • Backpropagation learning works on multi- layer feed-forward networks. • Neural Networks are widely used in developing artificial learning systems. MSE 2400 Evolution & Learning 35 6

Recommend

More recommend