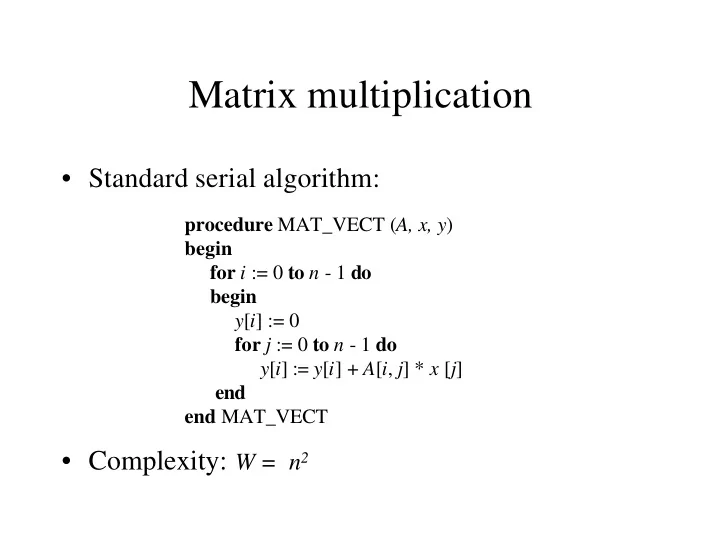

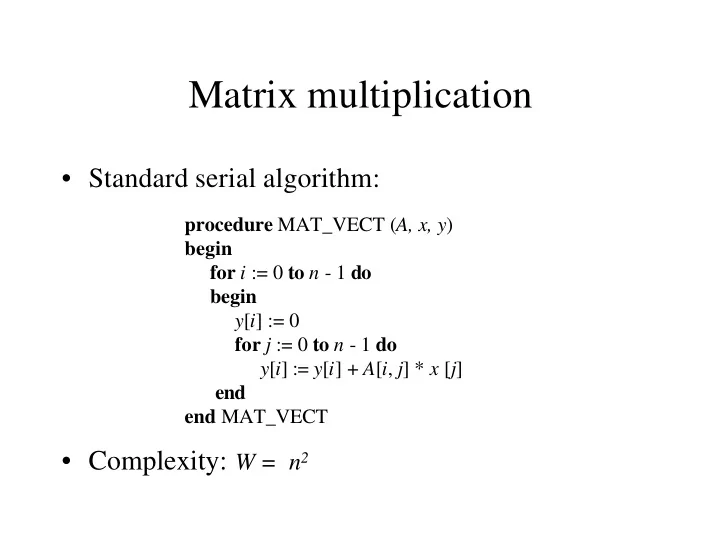

Matrix multiplication • Standard serial algorithm: procedure MAT_VECT ( A, x, y ) begin for i := 0 to n - 1 do begin y [ i ] := 0 for j := 0 to n - 1 do y [ i ] := y [ i ] + A [ i , j ] * x [ j ] end end MAT_VECT • Complexity: W = n 2

Matrix-Vector Multiplication (rowwise striping) Sequential run time: W = n 2 • • Simple case: p = n – A2A broadcast of vector elements: Θ ( n) – single row multiplication: Θ ( n) – Total time: Θ ( n) – processor-time product: Θ ( n 2 ) (cost-opt.) • General case: p < n – A2A broadcast of vector elements: • t s log p + t w n (hypercube) • 2 t s √ p + t w n (mesh) – row multiplication: Θ ( n 2 /p) – total time: • n 2 /p + t s log p + t w n (hypercube) • n 2 /p + 2 t s √ p + t w n (mesh)

Matrix-Vector Multiplication (checkerboard partitioning) Simple case: p = n 2 • – one-to-one comm. + one-2-all broadcast + single node accumul. per row + multipl. = = Θ ( n) + Θ ( n) + Θ ( n) + Θ (1) = Θ ( n) (mesh) = Θ (log n ) + Θ (log n ) + … = Θ (log n ) (hyper) – processor-time product: • Θ ( n 3 ) (mesh) • Θ ( n 2 log n ) (hypercube) General case: p < n 2 • – each processor stores a ( n √ p × n √ p ) block – Run time on mesh with CT: Θ ( n/ √ p ) + Θ (( n/ √ p ) log p ) + Θ (( n/ √ p ) log p ) – + Θ ( n 2 /p ) – Cost optimal as long as p = O ( n 2 / log 2 n )

Matrix multiplication • Standard serial algorithm: procedure MAT_MULT ( A, B, C ) begin for i := 0 to n - 1 do for j := 0 to n - 1 do begin C [ i, j ] := 0 for k := 0 to n - 1 do C [ i, j ] := C [ i, j ] + A [ i , k ] * B [ k , j ] end end MAT_MULT • Complexity: W = n 3

Matrix multiplication: a block matrix implementation • A parallelizable algorithm is derived from the serial one by replacing scalar operations with block matrix operations : procedure BLOCK_MAT_MULT( A, B, C ) begin for i := 0 to q - 1 do for j := 0 to q - 1 do begin Initialize all elements of C i,j to zero for k := 0 to q - 1 do C i,j := C i, j + A i , k * B k , j end end BLOCK_MAT_MULT • Number of scalar addition/moltiplications: n 3 – q 3 matrix multiplications each involving n/q × n/q elements and requiring ( n/q ) 3 multiplications and additions

Matrix multiplication: simple parallel implementation • The block matrix algorithm lends itself to implementation on a √ p × √ p mesh, with one block per processor: – processor P i, j initially holds A i, j and B i, j , and computes block C i,j , P i, j needs all blocks A i, k and B k, j for 0 ≤ k ≤ √ p , thus an A2A – broadcast is performed on each row, and one on each column – computation requires √ p multiplications of n/ √ p × n/ √ p matrices – Assuming a hypercube topology: • Tp = n 3 /p + t s log p + 2 t w n 2 / √ p – cost • n 3 + t s p log p + 2 t w n 2 √ p • cost-optimal for p = O( n 2 ) • A drawback of this algorithm is the excessive memory requirements - every processor has 2 √ p blocks of size n 2 /p

Matrix Multiplication: efficient algorithms • The simple parallel algorithm we saw last time is not memory efficient – every processor holds 2 √ p blocks of size n 2 /p -> total memory required is n 2 √ p which is √ p times the sequential alg. • More memory efficient algorithms exist – Cannon’s algorithm • rowwise (columnwise) rotation of submatrices instead of a2a broadcast • interleaving of communication and submatrix multiplication – Fox’s algorithm • rowwise broadcasts of each A i,j in turn, upward shifts of B i,

Systems of linear equations a 0,0 x 0 + a 0,1 x 1 + … + a 0,n-1 x n-1 = b 0 x 0 + u 0,1 x 1 + … + u 0,n-1 x n-1 = y 0 a 1,0 x 0 + a 1,1 x 1 + … + a 1,n-1 x n-1 = b 1 x 1 + … + u 1,n-1 x n-1 = y 1 . . . . . . . . . . . . . . . . . . Gaussian a n-1,0 x 0 + a n-1,1 x 1 + … + a n-1,n-1 x n-1 = b n-1 x n-1 = y n-1 elimination • Gaussian elimination is the classical serial algorithm for solving systems of linear equations: – First step: Ax = b transformed into Ux = y, where U is upper triangular and U[ i, i ] = 1 – Second step: system is solved for the x variables from x [n-1] to x [0] by back-substitution

Steps of the Gaussian elimination

Parallel Gaussian elimination: striped partitioning • Simple case: one row/processor – k th computation step: 3( n-k- 1) – Total computation: 3 n ( n -1)/2 – k th comm. step: ( t s + t w ( n-k -1))log n (on hypercube) – Tot. comm. : ( t s n + t w n(n- 1)/2)log n – Cost: Θ ( n 3 log n ) • not cost-optimal ( W = 2 n 3 /3) • A possible optimization; pipelining of the n iterations – row k +1 can start computation as soon as it gets data from row k – this optimized version of the alg. is cost- optimal

Parallel Gaussian elimination: striped partitioning • General case: p < n – block-striped partitioning • k th computation step: 3( n-k- 1) n/p (on proc. with max load) • k th comm. step: ( t s + t w ( n-k -1))log n (on hypercube) • => computation time dominates • total time: Θ ( n 3 p ) • cost: Θ ( n 3 ) • not very efficient because some processors are idle – cyclic-striped partitioning • better load balancing => less idle time

Parallel Gaussian elimination: blocked-checkerboard partitioning • Simple case: one element/processor – two communication steps required • rowwise broadcast • columnwise broadcast – Not cost-optimal • Usual optimization; pipelining of communication and computation – two communication steps => two opportunities for pipelining – this optimized version of the alg. is cost-optimal

Parallel Gaussian elimination: cyclic-checkerboard partitioning • Like for striped partitioning, an additional optimization is achieved by using cyclic- instead of block- partitioning due to improved load balancing among processors

Example of discretized physical domain • Physical system: temperature distribution on a sheet of metal held at temperature U 0 and U 1 on two sides – system is discretized by superimposing grid – temperature at each point is computed based on neighbor values

Recommend

More recommend