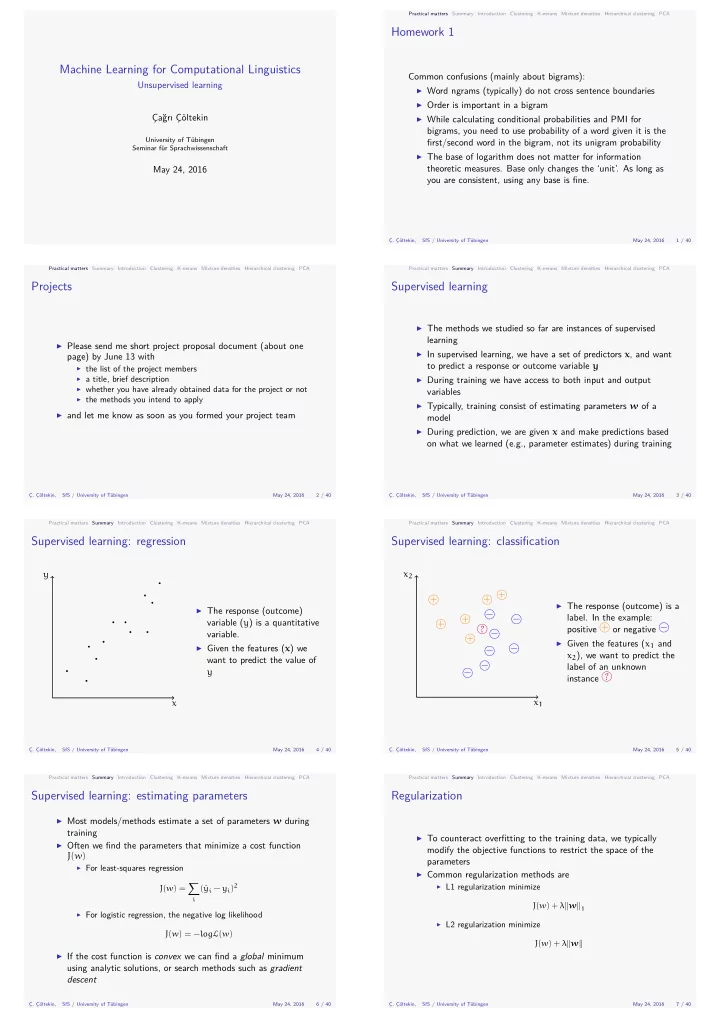

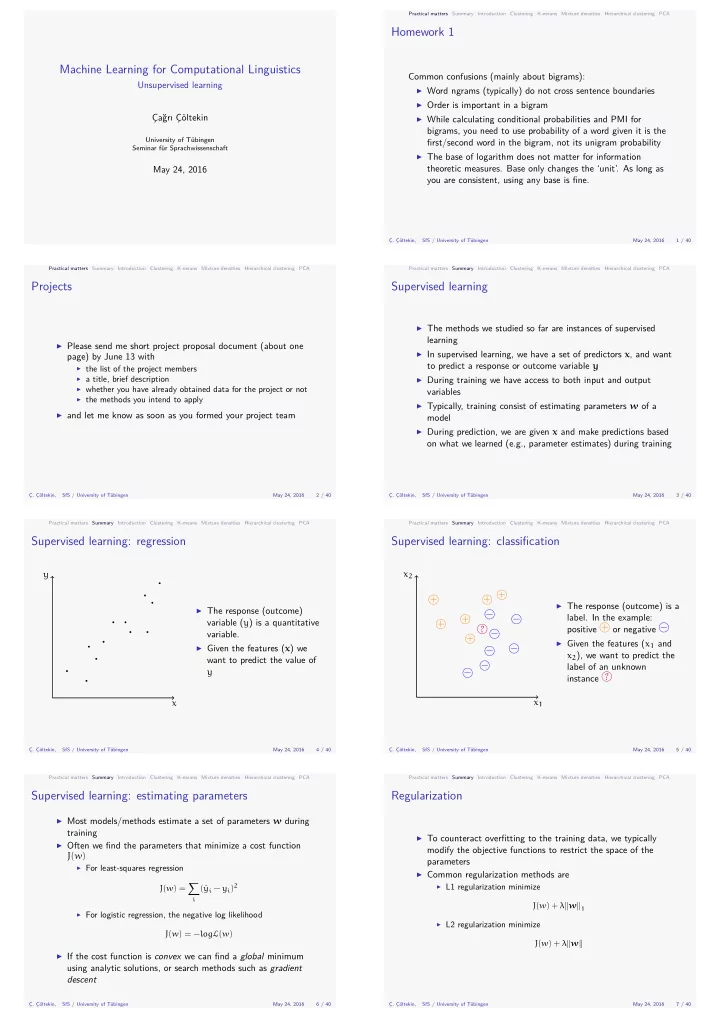

Machine Learning for Computational Linguistics May 24, 2016 Ç. Çöltekin, label of an unknown label. In the example: Supervised learning: classifjcation PCA Hierarchical clustering Mixture densities K-means Clustering Introduction Summary Practical matters 4 / 40 SfS / University of Tübingen May 24, 2016 Unsupervised learning want to predict the value of variable. Supervised learning: regression PCA Hierarchical clustering Mixture densities K-means Clustering Introduction Summary Practical matters 3 / 40 May 24, 2016 SfS / University of Tübingen 5 / 40 Ç. Çöltekin, Practical matters May 24, 2016 SfS / University of Tübingen Ç. Çöltekin, parameters modify the objective functions to restrict the space of the Regularization PCA Hierarchical clustering Mixture densities K-means Clustering Introduction Summary 6 / 40 Practical matters May 24, 2016 SfS / University of Tübingen Ç. Çöltekin, descent using analytic solutions, or search methods such as gradient training Supervised learning: estimating parameters PCA Hierarchical clustering Mixture densities K-means Clustering Introduction Summary SfS / University of Tübingen Ç. Çöltekin, on what we learned (e.g., parameter estimates) during training fjrst/second word in the bigram, not its unigram probability PCA Hierarchical clustering Mixture densities K-means Clustering Introduction Summary Practical matters 1 / 40 May 24, 2016 SfS / University of Tübingen Ç. Çöltekin, you are consistent, using any base is fjne. theoretic measures. Base only changes the ‘unit’. As long as bigrams, you need to use probability of a word given it is the page) by June 13 with Common confusions (mainly about bigrams): Homework 1 PCA Hierarchical clustering Mixture densities K-means Clustering Introduction Summary Practical matters May 24, 2016 Seminar für Sprachwissenschaft University of Tübingen Çağrı Çöltekin Projects 7 / 40 K-means Mixture densities May 24, 2016 2 / 40 Practical matters Summary Introduction Clustering Ç. Çöltekin, SfS / University of Tübingen Hierarchical clustering PCA Supervised learning learning variables model ▶ Word ngrams (typically) do not cross sentence boundaries ▶ Order is important in a bigram ▶ While calculating conditional probabilities and PMI for ▶ The base of logarithm does not matter for information ▶ The methods we studied so far are instances of supervised ▶ Please send me short project proposal document (about one ▶ In supervised learning, we have a set of predictors x , and want to predict a response or outcome variable y ▶ the list of the project members ▶ a title, brief description ▶ During training we have access to both input and output ▶ whether you have already obtained data for the project or not ▶ the methods you intend to apply ▶ Typically, training consist of estimating parameters w of a ▶ and let me know as soon as you formed your project team ▶ During prediction, we are given x and make predictions based y x 2 + + + ▶ The response (outcome) is a ▶ The response (outcome) − + − variable ( y ) is a quantitative + positive + or negative − ? − + ▶ Given the features ( x 1 and ▶ Given the features ( x ) we − − x 2 ), we want to predict the − y − instance ? x x 1 ▶ Most models/methods estimate a set of parameters w during ▶ To counteract overfjtting to the training data, we typically ▶ Often we fjnd the parameters that minimize a cost function J ( w ) ▶ For least-squares regression ▶ Common regularization methods are ∑ y i − y i ) 2 J ( w ) = ( ˆ ▶ L1 regularization minimize i J ( w ) + λ ∥ w ∥ 1 ▶ For logistic regression, the negative log likelihood ▶ L2 regularization minimize J ( w ) = − log L ( w ) J ( w ) + λ ∥ w ∥ ▶ If the cost function is convex we can fjnd a global minimum

Practical matters Ç. Çöltekin, Mixture densities Hierarchical clustering PCA K-means clustering K-means is a popular method for clustering. clusters 2. Repeat until convergence Euclidean distance within each cluster SfS / University of Tübingen Clustering May 24, 2016 13 / 40 Practical matters Summary Introduction Clustering K-means Mixture densities Hierarchical clustering K-means Introduction K-means clustering: visualization How to do clustering 11 / 40 Practical matters Summary Introduction Clustering K-means Mixture densities Hierarchical clustering PCA Most clustering algorithms try to minimize the scatter within each Summary clusters Summary Exact solution (fjnding global optimum) is not possible for realistic data. We use methods that fjnd a local minimum. Ç. Çöltekin, SfS / University of Tübingen May 24, 2016 12 / 40 Practical matters PCA 0 SfS / University of Tübingen 1 PCA K-means clustering: visualization 0 1 2 3 4 5 0 2 Mixture densities 3 4 5 randomly closest centroid centroids Ç. Çöltekin, SfS / University of Tübingen May 24, 2016 Hierarchical clustering K-means 1 5 2 3 4 5 0 1 2 3 4 randomly Clustering closest centroid centroids Ç. Çöltekin, SfS / University of Tübingen May 24, 2016 14 / 40 Practical matters Summary Introduction May 24, 2016 14 / 40 Ç. Çöltekin, with the supervised methods Clustering example in two dimensions Summary linguistic units (letters, words, sentences, documents, …) Practical matters 8 / 40 May 24, 2016 SfS / University of Tübingen Ç. Çöltekin, not have labels Hierarchical clustering dimensional representation of the data groups in the data Hierarchical clustering together Ç. Çöltekin, SfS / University of Tübingen May 24, 2016 10 / 40 PCA Mixture densities Summary (divisive) Clustering Mixture densities to each other K-means Clustering Introduction users/authors … specifjc) solutions, we often rely on greedy algorithms fjnding local K-means optima. Ç. Çöltekin, SfS / University of Tübingen May 24, 2016 9 / 40 Practical matters Summary Introduction Clustering Practical matters data points are grouped Introduction Unsupervised learning Introduction Clustering Clustering K-means Mixture densities Hierarchical clustering PCA PCA Mixture densities K-means Hierarchical clustering PCA Similarity and distance the data ▶ Our aim is to fjnd groups of instances/items that are similar ▶ In unsupervised learning, we do not have labels ▶ Our aim is to fjnd useful patterns/structure in the data ▶ Clustering similar languages, dialects, documents, ▶ Typical unsupervised methods include ▶ Clustering: fjnd related groups of instances ▶ The distance measure is important (but also application ▶ Density estimation: fjnd a probability distribution that explains ▶ Clustering can be hierarchical or non-hierarchical ▶ Dimensionality reduction: fjnd a accurate/useful lower ▶ Clustering can be bottom-up (agglomerative) or top-down ▶ Evaluation is diffjcult: we do not have ‘true’ labels/values ▶ For most (useful) problems we cannot fjnd globally optimum ▶ Sometimes unsupervised methods can be used in conjunction x 2 ▶ The notion of distance (similarity) is very important in clustering. A distance measure D , ▶ is symmetric: D ( a , b ) = D ( b , a ) ▶ Unlike classifjcation we do ▶ non-negative: D ( a , b ) ⩾ 0 for all a , b , and it D ( a , b ) = 0 ifg a = b ▶ obeys triangle inequality: D ( a , b ) + D ( b , c ) ⩾ D ( a , c ) ▶ We want to fjnd ‘natural’ ▶ The choice of distance is application specifjc ▶ Intuitively, similar or closer ▶ A few common choices: √ ∑ k ▶ Euclidean distance: ∥ a − b ∥ = j = 1 ( a j − b j ) 2 ▶ Manhattan distance: ∥ a − b ∥ 1 = ∑ k j = 1 | a j − b j | ▶ We will often face with defjning distance measures between x 1 cluster. Which is equivalent to maximizing the scatter between x 2 1. Randomly choose centroids , m 1 , . . . , m K , representing K K 1 ∑ ∑ ∑ ▶ Assign each data point to the cluster of the nearest centroid d ( a , b ) 2 ▶ Re-calculate the centroid locations based on the assignments k = 1 C ( a )= k C ( b )= k Efgectively, we are fjnding a local minimum of the sum of squared ▶ K 1 K ∑ ∑ ∑ 1 d ( a , b ) ∑ ∑ ∑ ∥ a − b ∥ 2 2 2 k = 1 C ( a )= k C ( b ) ̸ = k k = 1 C ( a )= k C ( b )= k x 1 ▶ The data ▶ The data ▶ Set cluster centroids ▶ Set cluster centroids ▶ Assign data points to ▶ Assign data points to ▶ Recalculate the ▶ Recalculate the

Recommend

More recommend