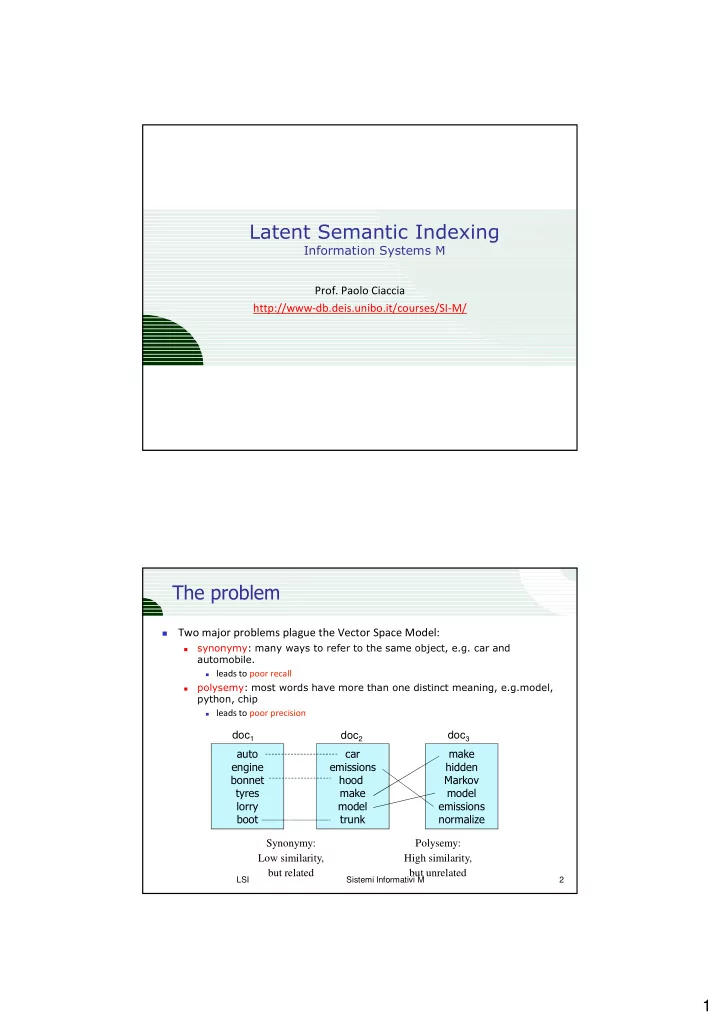

Latent Semantic Indexing Information Systems M Prof. Paolo Ciaccia http://www-db.deis.unibo.it/courses/SI-M/ ����������� Two major problems plague the Vector Space Model: � synonymy: many ways to refer to the same object, e.g. car and � automobile. � leads to poor recall polysemy: most words have more than one distinct meaning, e.g.model, � python, chip � leads to poor precision doc 1 doc 2 doc 3 ���� ��� ���� ������ ��������� ������ ������ ����� ������ ����� ���� ����� ����� ����� ��������� ���� ����� ��������� Synonymy: Polysemy: Low similarity, High similarity, but related but unrelated LSI Sistemi Informativi M 2 1

������������������������ Latent Semantic Indexing (LSI), also known as Latent Semantic Analysis (LSA) � when not applied to IR, was proposed at the end of 80’s as a way to solve such problem, http://lsi.argreenhouse.com/lsi/LSI.html The basic observation is that terms are an unreliable means to assess the � relevance of a document wrt to a query Because of synonymy and polysemy � Thus, one would like to represent document using a more semantically � accurate way, i.e., in terms of “concepts” LSI achieves this by analyzing the whole term-document matrrix W , � and by projecting it in a lower-dimensional “latent” space spanned by relevant “concepts” More precisely, LSI uses a linear algebra technique, called Singular Value � Decomposition (SVD), before performing dimensionality reduction LSI Sistemi Informativi M 3 ������������������������������������ Given a square m × m matrix S , a non-null vector v is an eigenvector of S if � there exists a scalar λ, called the eigenvalue of v , such that: ������� Sv = λ v The linear transformation associated to S does not change the directions of � eigenvectors, which are just stretched/shrinked by an amount given by the corresponding eigenvalue There are at most m distinct eigenvalues, which are solutions of the � characteristic equation Det( S - λ I ) = 0 For each eigenvalue, there are infinite corresponding eigenvectors � If � is an eigenvector, so it is k � , k ≠ 0 � Thus, we can consider normalized eigenvectors, ∥v v v v∥=1 � LSI Sistemi Informativi M 4 2

�������������������������������� If S is a real and symmetric matrix, then � All its eigenvalues are real � All the (normalized) eigenvectors of distinct eigenvalues are mutually � orthogonal (thus, linearly independent) 1 / 2 λ v = ; = 3 2 1 1 1 1 / 2 λ I λ 2 − = − − = S = | S | ( 2 ) 1 0 . 1 2 1 / 2 λ = = v ; 1 2 2 − 1 / 2 If S has m linearly independent eigenvectors, then it can be written as � m ∑ λ s = u × × u Λ U T S = U Λ Λ Λ i, j i, c c j, c where: c = 1 Λ is a diagonal matrix, Λ Λ = diag(λ 1 , λ 2 ,…, λ m ), with λ 1 ≥ λ 2 ≥ … ≥ λ m Λ Λ Λ Λ Λ � The columns of � are the corresponding eigenvectors of � � � U is a column-orthonormal matrix LSI Sistemi Informativi M 5 ������� 2 1 3 0 1 / 2 1 / 2 1 / 2 1 / 2 = = S 1 2 − 0 1 − 1 / 2 1 / 2 1 / 2 1 / 2 Λ Λ Λ Λ U T U m ∑ λ s = u × × u ; i, j i, c c j, c c = 1 m ∑ λ λ λ s = u × × u = u × × u + u × × u 1,2 1, c c 2, c 1,1 1 2,1 1,2 2 2,2 c = 1 = × × + × × − = − = 1 / 2 3 1 / 2 1 / 2 1 1 / 2 3 / 2 1 / 2 1 LSI Sistemi Informativi M 6 3

��������� �����!������������ Consider the M × N term-document weight matrix W � If W has rank r ≤ min{M,N}, then W can be factorized as: � r ∑ λ = × × Λ D T w t d W = T Λ Λ Λ i, j i, c c j, c = where: c 1 � is an M × r column.orthonormal matrix ( � T � = � ), � Λ is an r × r diagonal matrix Λ Λ Λ � � is an N × r column.orthonormal matrix ( � T � = � ) � Λ Λ Λ Λ is also called the “concept matrix” � T is the “term-concept similarity matrix” � D is the “document-concept similarity matrix” � LSI Sistemi Informativi M 7 ������������� SVD represents both terms and documents using a set of latent concepts � r ∑ λ w = t × × d i, j i, c c j, c = c 1 This just says that the weight of t i in doc j is expressed as a � “linear combination of term-concept and doc-concept weights” LSI Sistemi Informativi M 8 4

��������"#$ Consider the 12 × 9 weight matrix below, whose rank is r = 9, in which two � “groups” of documents are present W C1 C2 C3 C4 C5 G1 G2 G3 G4 Human 1 0 0 1 0 0 0 0 0 Interface 1 0 1 0 0 0 0 0 0 Computer 1 1 0 0 0 0 0 0 0 User 0 1 1 0 1 0 0 0 0 System 0 1 1 2 0 0 0 0 0 Response 0 1 0 0 1 0 0 0 0 Time 0 1 0 0 1 0 0 0 0 EPS 0 0 1 1 0 0 0 0 0 Survey 0 1 0 0 0 0 0 0 1 Tree 0 0 0 0 0 1 1 1 0 Graph 0 0 0 0 0 0 1 1 1 Minors 0 0 0 0 0 0 0 1 1 LSI Sistemi Informativi M 9 ��������"%$ It is Λ Λ = diag(3.34,2.54,2.35,1.64,1.50,1.31,0.85,0.56,0.35) Λ Λ � T D 0.22 -0.11 0.29 -0.41 -0.11 -0.34 0.52 -0.06 -0.41 0.20 -0.06 0.11 -0.95 0.05 0.08 0.18 -0.01 -0.06 0.20 -0.07 0.14 -0.55 0.28 0.50 -0.07 -0.01 -0.11 0.61 0.17 -0.50 -0.03 -0.21 -0.26 -0.43 0.05 0.24 0.24 0.04 -0.16 -0.59 -0.11 -0.25 -0.30 0.06 0.49 0.46 -0.13 0.21 0.04 0.38 0.72 -0.24 0.01 0.02 0.54 -0.23 0.57 0.27 -0.21 -0.37 0.26 -0.02 -0.08 0.40 0.06 -0.34 0.10 0.33 0.38 0.00 0.00 0.01 0.28 0.11 -0.51 0.15 0.33 0.03 0.67 -0.06 -0.26 0.64 -0.17 0.36 0.33 -0.16 -0.21 -0.17 0.03 0.27 0.00 0.19 0.10 0.02 0.39 -0.30 -0.34 0.45 -0.62 0.27 0.11 -0.43 0.07 0.08 -0.17 0.28 -0.02 -0.05 0.01 0.44 0.19 0.02 0.35 -0.21 -0.15 -0.76 0.02 0.27 0.11 -0.43 0.07 0.08 -0.17 0.28 -0.02 -0.05 0.02 0.62 0.25 0.01 0.15 0.00 0.25 0.45 0.52 0.30 -0.14 0.33 0.19 0.11 0.27 0.03 -0.02 -0.17 0.08 0.53 0.08 -0.03 -0.60 0.36 0.04 -0.07 -0.45 0.21 0.27 -0.18 -0.03 -0.54 0.08 -0.47 -0.04 -0.58 0.01 0.49 0.23 0.03 0.59 -0.39 -0.29 0.25 -0.23 0.04 0.62 0.22 0.00 -0.07 0.11 0.16 -0.68 0.23 0.03 0.45 0.14 -0.01 -0.30 0.28 0.34 0.68 0.18 LSI Sistemi Informativi M 10 5

� !����������������"#$ Since both T and D are column-orthonormal matrices, it is � W W T = ( T Λ Λ D T ) T = ( T Λ Λ D T )( T Λ Λ D T )( D Λ Λ T T T ) Λ Λ Λ Λ Λ Λ Λ Λ = T Λ Λ 2 T T Λ Λ W T W = ( D Λ Λ T T T )( T Λ Λ D T ) Λ Λ Λ Λ Λ 2 D T = D Λ Λ Λ ��� T is the (real and symmetric) M × M term.term similarity matrix, and the � columns of � are the eigenvectors of such matrix � T �� is the N × N document.document similarity matrix, and the columns of � � are the eigenvectors of such matrix Λ 2 is a matrix with the eigenvalues of ��� T (and � T � ) Λ Λ Λ � LSI Sistemi Informativi M 11 � !����������������"%$ T T W = Λ Λ D T Λ Λ Since it is � we can view this as a “projection” of documents (columns of W ) in the r-dimensional “concept space” spanned by T columns (i.e., T T rows) Λ D T In this space, documents are represented by the columns of Λ Λ Λ � (i.e., rows of D Λ Λ Λ ) Λ It follows that W T W = ( D Λ Λ D T ) amounts to compute the similarity Λ Λ Λ )( Λ Λ Λ � between documents as the inner product in this r-dimensional latent semantic space Similarly, in this space terms are represented by by the columns of Λ Λ T T Λ Λ � LSI Sistemi Informativi M 12 6

Recommend

More recommend