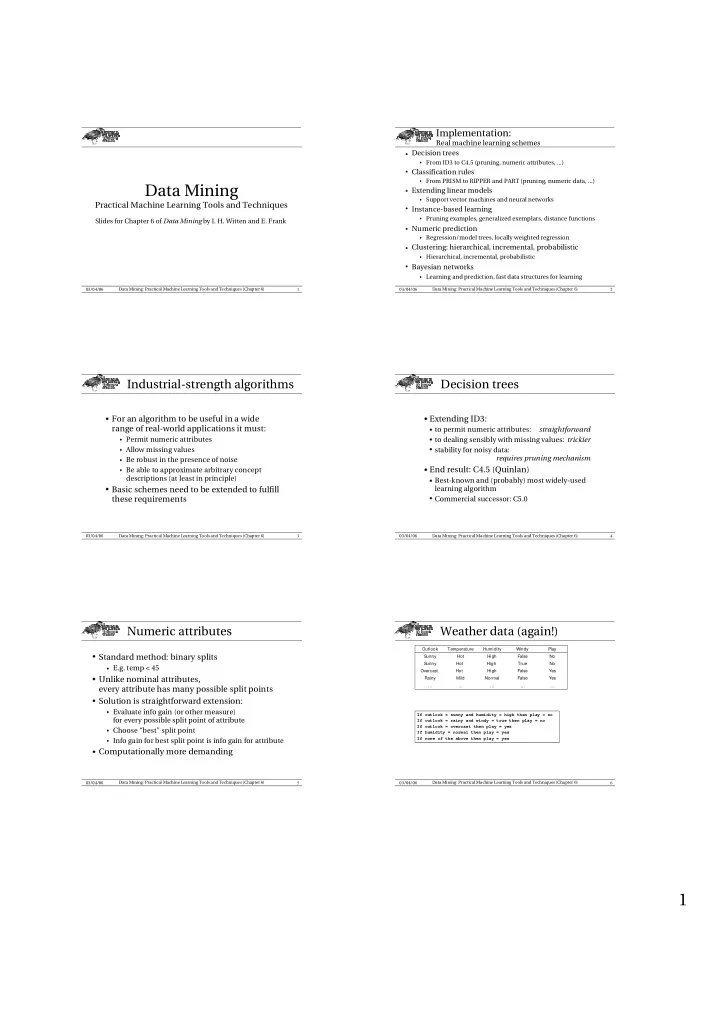

� � � � � � � Implementation: Real machine learning schemes Decision trees ♦ From ID3 to C4.5 (pruning, numeric attributes, ...) Classification rules ♦ From PRISM to RIPPER and PART (pruning, numeric data, ...) Data Mining Extending linear models ♦ Support vector machines and neural networks Practical Machine Learning Tools and Techniques Instance-based learning ♦ Pruning examples, generalized exemplars, distance functions Slides for Chapter 6 of Data Mining by I. H. Witten and E. Frank Numeric prediction ♦ Regression/model trees, locally weighted regression Clustering: hierarchical, incremental, probabilistic ♦ Hierarchical, incremental, probabilistic Bayesian networks ♦ Learning and prediction, fast data structures for learning 1 2 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) Industrial-strength algorithms Decision trees ✁ For an algorithm to be useful in a wide ✂ Extending ID3: ✄ to permit numeric attributes: range of real-world applications it must: straightforward ✄ to dealing sensibly with missing values: trickier ♦ Permit numeric attributes ✄ stability for noisy data: ♦ Allow missing values ♦ Be robust in the presence of noise requires pruning mechanism ✂ End result: C4.5 (Quinlan) ♦ Be able to approximate arbitrary concept ✄ Best-known and (probably) most widely-used descriptions (at least in principle) ✁ Basic schemes need to be extended to fulfill learning algorithm ✄ Commercial successor: C5.0 these requirements 3 4 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) Numeric attributes Weather data (again!) Outlook Temperature Humidity Windy Play ✁ Standard method: binary splits Sunny Hot High False No Sunny Hot High True No ♦ E.g. temp < 45 Overcast Hot High False Yes ✁ Unlike nominal attributes, Rainy Mild Normal False Yes … … … … … every attribute has many possible split points ✁ Solution is straightforward extension: ♦ Evaluate info gain (or other measure) If outlook = sunny and humidity = high then play = no for every possible split point of attribute If outlook = rainy and windy = true then play = no If outlook = overcast then play = yes ♦ Choose “best” split point If humidity = normal then play = yes ♦ Info gain for best split point is info gain for attribute If none of the above then play = yes ✁ Computationally more demanding 5 6 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) 1

Weather data (again!) Example ✁ Split on temperature attribute: Outlook Temperature Humidity Windy Play S unny Hot High False No S unny Hot High True No 64 65 68 69 70 71 72 72 75 75 80 81 83 85 Overcast Hot High False Yes Yes No Yes Yes Yes No No Yes Yes Yes No Yes Yes No Rainy Mild Normal False Yes ♦ E.g. temperature < 71.5: yes/4, no/2 … … … … … Outlook Temperature Humidity Windy Play Sunny 85 85 False No temperature ≥ 71.5: yes/5, no/3 Sunny 80 90 True No Overcast 83 86 False Yes ♦ Info([4,2],[5,3]) If outlook = sunny and humidity = high then play = no Rainy 75 80 False Yes If outlook = rainy and windy = true then play = no … … … … … = 6/14 info([4,2]) + 8/14 info([5,3]) If outlook = overcast then play = yes = 0.939 bits If humidity = normal then play = yes ✁ Place split points halfway between values If none of the above then play = yes If outlook = sunny and humidity > 83 then play = no If outlook = rainy and windy = true then play = no ✁ Can evaluate all split points in one pass! If outlook = overcast then play = yes If humidity < 85 then play = yes If none of the above then play = yes 7 8 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) Can avoid repeated sorting Binary vs multiway splits ✁ Splitting (multi-way) on a nominal attribute ✁ Sort instances by the values of the numeric attribute exhausts all information in that attribute ♦ Nominal attribute is tested (at most) once on ♦ Time complexity for sorting: O ( n log n ) any path in the tree ✁ Does this have to be repeated at each node of the ✁ Not so for binary splits on numeric tree? attributes! ✁ No! Sort order for children can be derived from sort ♦ Numeric attribute may be tested several times order for parent along a path in the tree ✁ Disadvantage: tree is hard to read ♦ Time complexity of derivation: O ( n ) ♦ Drawback: need to create and store an array of sorted ✁ Remedy: indices for each numeric attribute ♦ pre-discretize numeric attributes, or ♦ use multi-way splits instead of binary ones 9 10 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) Computing multi-way splits Missing values ✁ Simple and efficient way of generating ✁ Split instances with missing values into pieces multi-way splits: greedy algorithm ♦ A piece going down a branch receives a weight ✁ Dynamic programming can find optimum proportional to the popularity of the branch ♦ weights sum to 1 multi-way split in O ( n 2 ) time ✁ Info gain works with fractional instances ♦ imp ( k , i , j ) is the impurity of the best split of values x i … x j into k sub-intervals ♦ use sums of weights instead of counts ✁ During classification, split the instance into ♦ imp ( k , 1, i ) = min 0< j < i imp ( k –1, 1, j ) + imp (1, j +1, i ) pieces in the same way ♦ imp ( k, 1 , N ) gives us the best k -way split ♦ Merge probability distribution using weights ✁ In practice, greedy algorithm works as well 11 12 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) 2

� � � � � Pruning Prepruning ✂ Prevent overfitting to noise in the data ✁ Based on statistical significance test ✂ “Prune” the decision tree ✂ Two strategies: ♦ Stop growing the tree when there is no statistically ✄ Postpruning significant association between any attribute and the class at a particular node take a fully-grown decision tree and discard ✁ Most popular test: chi-squared test unreliable parts ✄ Prepruning ✁ ID3 used chi-squared test in addition to stop growing a branch when information information gain becomes unreliable ✂ Postpruning preferred in practice— ♦ Only statistically significant attributes were allowed to be selected by information gain procedure prepruning can “stop early” 13 14 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) Early stopping Postpruning a b class ✂ First, build full tree 1 0 0 0 ✁ Pre-pruning may stop the growth ✂ Then, prune it 2 0 1 1 3 1 0 1 ✄ Fully-grown tree shows all attribute interactions process prematurely: early stopping 4 1 1 0 ✂ Problem: some subtrees might be due to ✁ Classic example: XOR/Parity-problem chance effects ♦ No individual attribute exhibits any significant ✂ Two pruning operations: association to the class ✄ Subtree replacement ♦ Structure is only visible in fully expanded tree ✄ Subtree raising ♦ Prepruning won’t expand the root node ✂ Possible strategies: ✁ But: XOR-type problems rare in practice ✄ error estimation ✁ And: prepruning faster than postpruning ✄ significance testing ✄ MDL principle 15 16 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) Subtree replacement Subtree raising Bottom-up Delete node Redistribute instances Consider replacing a tree only after considering all its subtrees Slower than subtree replacement (Worthwhile?) 17 18 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) 03/04/06 Data Mining: Practical Machine Learning Tools and Techniques (Chapter 6) 3

Recommend

More recommend