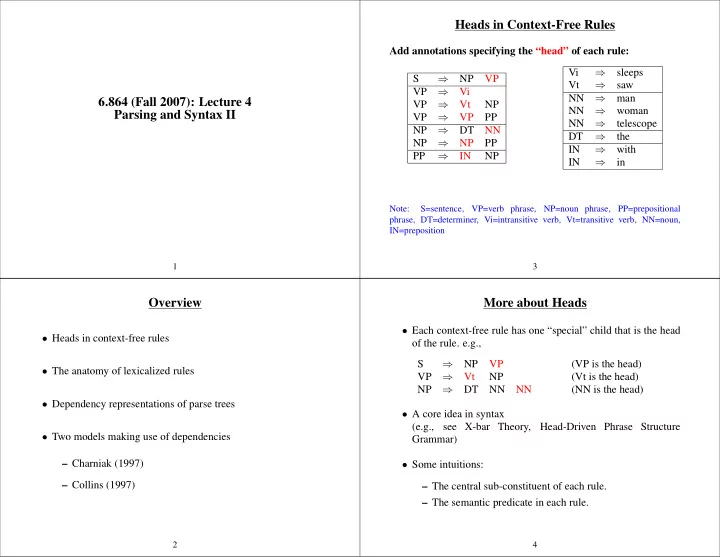

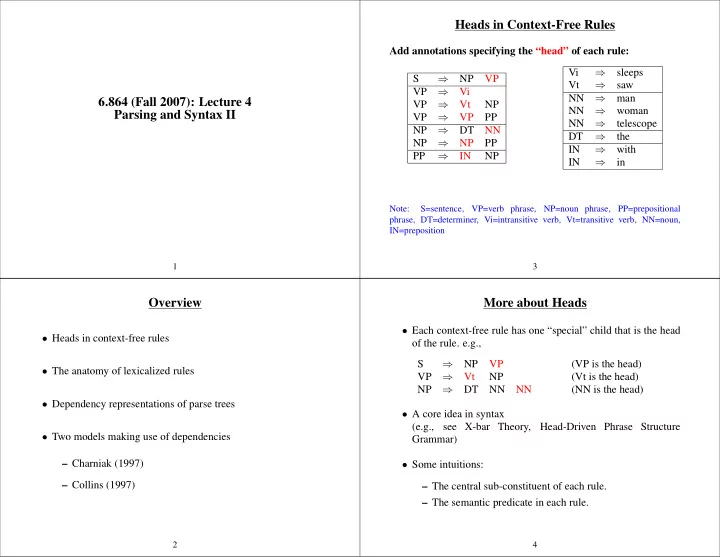

Heads in Context-Free Rules Add annotations specifying the “head” of each rule: Vi ⇒ sleeps ⇒ S NP VP Vt ⇒ saw ⇒ VP Vi ⇒ NN man 6.864 (Fall 2007): Lecture 4 ⇒ VP Vt NP ⇒ NN woman Parsing and Syntax II ⇒ VP VP PP ⇒ NN telescope ⇒ NP DT NN ⇒ DT the ⇒ NP NP PP IN ⇒ with PP ⇒ IN NP IN ⇒ in Note: S=sentence, VP=verb phrase, NP=noun phrase, PP=prepositional phrase, DT=determiner, Vi=intransitive verb, Vt=transitive verb, NN=noun, IN=preposition 1 3 Overview More about Heads • Each context-free rule has one “special” child that is the head • Heads in context-free rules of the rule. e.g., S ⇒ NP VP (VP is the head) • The anatomy of lexicalized rules VP ⇒ Vt NP (Vt is the head) NP ⇒ DT NN NN (NN is the head) • Dependency representations of parse trees • A core idea in syntax (e.g., see X-bar Theory, Head-Driven Phrase Structure • Two models making use of dependencies Grammar) – Charniak (1997) • Some intuitions: – Collins (1997) – The central sub-constituent of each rule. – The semantic predicate in each rule. 2 4

Rules which Recover Heads: Adding Headwords to Trees An Example of rules for NPs S If the rule contains NN, NNS, or NNP: NP VP Choose the rightmost NN, NNS, or NNP DT NN Vt NP the lawyer Else If the rule contains an NP: Choose the leftmost NP questioned DT NN the witness Else If the rule contains a JJ: Choose the rightmost JJ ⇓ S(questioned) Else If the rule contains a CD: Choose the rightmost CD Else Choose the rightmost child NP(lawyer) VP(questioned) e.g., NP ⇒ DT NNP NN DT(the) NN(lawyer) NP ⇒ DT NN NNP Vt(questioned) NP(witness) NP ⇒ NP PP the lawyer questioned NP ⇒ DT JJ DT(the) NN(witness) NP ⇒ DT the witness 5 7 Rules which Recover Heads: Adding Headwords to Trees An Example of rules for VPs S(questioned) If the rule contains Vi or Vt: Choose the leftmost Vi or Vt NP(lawyer) VP(questioned) Else If the rule contains an VP: Choose the leftmost VP DT(the) NN(lawyer) Else Choose the leftmost child Vt(questioned) NP(witness) the lawyer questioned DT(the) NN(witness) e.g., ⇒ VP Vt NP the witness ⇒ VP VP PP • A constituent receives its headword from its head child . ⇒ S NP VP (S receives headword from VP) ⇒ VP Vt NP (VP receives headword from Vt) NP ⇒ DT NN (NP receives headword from NN) 6 8

Chomsky Normal Form A New Form of Grammar A context free grammar G = ( N, Σ , R, S ) in Chomsky Normal • The new form of grammar looks just like a Chomsky normal Form is as follows form CFG, but with potentially O ( | Σ | 2 × | N | 3 ) possible rules. • N is a set of non-terminal symbols • Σ is a set of terminal symbols • Naively, parsing an n word sentence using the dynamic programming algorithm will take O ( n 3 | Σ | 2 | N | 3 ) time. But • R is a set of rules which take one of two forms: | Σ | can be huge!! – X → Y 1 Y 2 for X ∈ N , and Y 1 , Y 2 ∈ N at most O ( n 2 × | N | 3 ) rules can be – X → Y for X ∈ N , and Y ∈ Σ • Crucial observation: applicable to a given sentence w 1 , w 2 , . . . w n of length n . This • S ∈ N is a distinguished start symbol is because any rules which contain a lexical item that is not one of w 1 . . . w n , can be safely discarded. We can find the highest scoring parse under a PCFG in this form, in O ( n 3 | R | ) time where n is the length of the string being parsed, and | R | is the number of rules in the grammar (see the • The result: we can parse in O ( n 5 | N | 3 ) time. dynamic programming algorithm in the previous notes) 9 11 Adding Headtags to Trees A New Form of Grammar S(questioned, Vt) We define the following type of “lexicalized” grammar: (we’ll call this is a lexicalized Chomsky normal form grammar) NP(lawyer, NN) VP(questioned, Vt) • N is a set of non-terminal symbols DT NN • Σ is a set of terminal symbols Vt NP(witness, NN) the lawyer • R is a set of rules which take one of three forms: DT NN questioned – X ( h ) → Y 1 ( h ) Y 2 ( w ) for X ∈ N , and Y 1 , Y 2 ∈ N , and h, w ∈ Σ the witness – X ( h ) → Y 1 ( w ) Y 2 ( h ) for X ∈ N , and Y 1 , Y 2 ∈ N , and h, w ∈ Σ – X ( h ) → h for X ∈ N , and h ∈ Σ • Also propagate part-of-speech tags up the trees • S ∈ N is a distinguished start symbol (We’ll see soon why this is useful!) 10 12

Overview The Parent of a Lexicalized Rule An example lexicalized rule: • Heads in context-free rules VP(told,V) ⇒ V(told,V) NP(Clinton,NNP) SBAR(that,COMP) • The anatomy of lexicalized rules • The parent of the rule is the non-terminal on the left-hand- side (LHS) of the rule • Dependency representations of parse trees • e.g., VP(told,V) in the above example • Two models making use of dependencies • We will also refer to the parent label , parent word , and parent tag . In this case: – Charniak (1997) 1. Parent label is VP – Collins (1997) 2. Parent word is told 3. Parent tag is V 13 15 Non-terminals in Lexicalized rules The Head of a Lexicalized Rule An example lexicalized rule: An example lexicalized rule: VP(told,V) ⇒ V(told,V) NP(Clinton,NNP) SBAR(that,COMP) VP(told,V) ⇒ V(told,V) NP(Clinton,NNP) SBAR(that,COMP) • The head of the rule is a single non-terminal on the right-hand- • Each non-terminal is a triple consisting of: side (RHS) of the rule 1. A label • e.g., V(told,V) is the head in the above example. 2. A word • We will also refer to the head label , head word , and head 3. A tag (i.e., a part-of-speech tag) tag . In this case: • E.g., for VP(told,V): label = VP, word = told, tag = V 1. Head label is V 2. Head word is told E.g., for V(told,V): label = V, word = told, tag = V 3. Head tag is V 14 16

The Left-Modifiers of a Lexicalized Rule • Note: we always have Another example lexicalized rule: – parent word = head word S(told,V) ⇒ NP(yesterday,NN) NP(Hillary,NNP) VP(told,V) – parent tag = head tag • The left-modifiers of the rule are any non-terminals appearing to the left of the head • In this example there are two left-modifiers: – NP(yesterday,NN) – NP(Hillary,NNP) 17 19 The Left-Modifiers of a Lexicalized Rule The Right-Modifiers of a Lexicalized Rule An example lexicalized rule: An example lexicalized rule: VP(told,V) ⇒ V(told,V) NP(Clinton,NNP) SBAR(that,COMP) VP(told,V) ⇒ V(told,V) NP(Clinton,NNP) SBAR(that,COMP) • The left-modifiers of the rule are any non-terminals appearing • The right-modifiers of the rule are any non-terminals to the left of the head appearing to the right of the head • In this example there are no left-modifiers • In this example there are two right-modifiers: • In general there can be any number ( 0 or greater) of left- – NP(Clinton,NNP) modifiers – SBAR(that,COMP) • In general there can be any number ( 0 or greater) of right- modifiers 18 20

The General Form of a Lexicalized Rule Overview • The general form of a lexicalized rule is as follows: • Heads in context-free rules X ( h, t ) ⇒ L n ( lw n , lt n ) . . . L 1 ( lw 1 , lt 1 ) H ( h, t ) R 1 ( rw 1 , rt 1 ) . . . R m ( rw m , rt m ) • The anatomy of lexicalized rules • X ( h, t ) is the parent of the rule • Dependency representations of parse trees • H ( h, t ) is the head of the rule • Two models making use of dependencies • There are n left modifiers, L i ( lw i , lt i ) for i = 1 . . . n – Charniak (1997) • There are m right-modifiers, R i ( rw i , rt i ) for i = 1 . . . m – Collins (1997) • There can be zero or more left or right modifiers: i.e., n ≥ 0 and m ≥ 0 21 23 Headwords and Dependencies • X, H , L i for i = 1 . . . n and R i for i = 1 . . . m are labels • A new representation: a tree is represented as a set of • h , lw i for i = 1 . . . n and rw i for i = 1 . . . m are words dependencies , not a set of context-free rules • A dependency is an 8-tuple: • t , lt i for i = 1 . . . n and rt i for i = 1 . . . m are tags (head-word, head-tag, modifer-word, modifer-tag, parent-label, head-label, modifier-label, direction) • Each rule with n children contributes ( n − 1) dependencies. There is one dependency for each left or right modifier ⇒ VP(questioned,Vt) Vt(questioned,Vt) NP(lawyer,NN) ⇓ (questioned, Vt, lawyer, NN, VP, Vt, NP, RIGHT) 22 24

S(told,V) Headwords and Dependencies An example rule: NP(Hillary,NNP) VP(told,V) VP(told,V) NNP Hillary V(told,V) NP(Clinton,NNP) SBAR(that,COMP) V NNP COMP S told Clinton that V(told,V) NP(Clinton,NNP) SBAR(that,COMP) NP(she,PRP) VP(was,Vt) PRP Vt NP(president,NN) she was NN president This rule contributes two dependencies: ( told V TOP S SPECIAL) head-word head-tag mod-word mod-tag parent-label head-label mod-label direction (told V Hillary NNP S VP NP LEFT) told V Clinton NNP VP V NP RIGHT (told V Clinton NNP VP V NP RIGHT) told V that COMP VP V SBAR RIGHT (told V that COMP VP V SBAR RIGHT) (that COMP was Vt SBAR COMP S RIGHT) (was Vt she PRP S VP NP LEFT) (was Vt president NP VP Vt NP RIGHT) 25 27 A Special Case: the Top of the Tree Overview TOP • Heads in context-free rules S(told,V) • The anatomy of lexicalized rules ⇓ • Dependency representations of parse trees ( , , told, V, TOP, S, , SPECIAL) • Two models making use of dependencies – Charniak (1997) – Collins (1997) 26 28

Recommend

More recommend