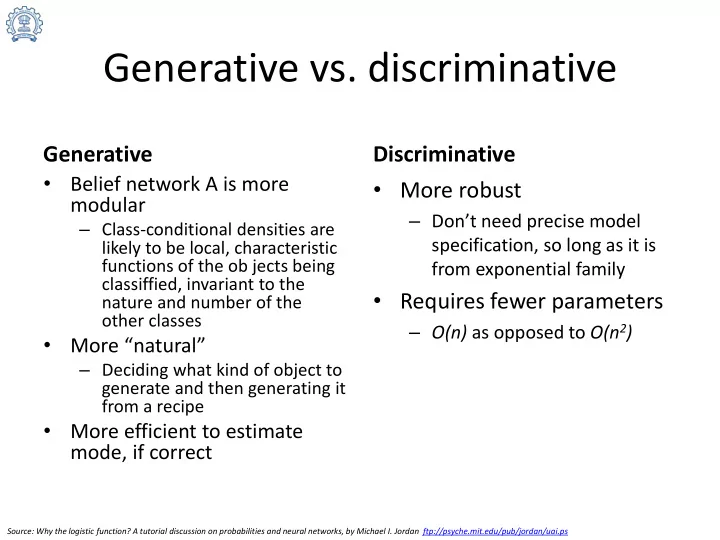

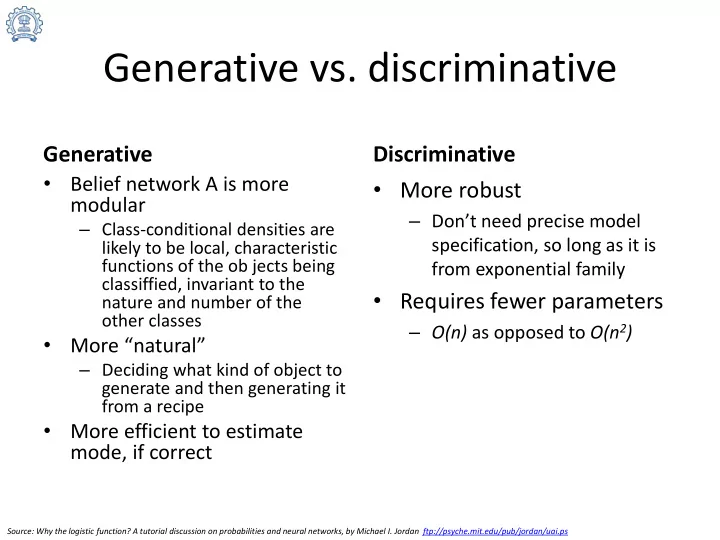

Generative vs. discriminative Generative Discriminative • Belief network A is more • More robust modular – Don’t need precise model – Class-conditional densities are specification, so long as it is likely to be local, characteristic functions of the ob jects being from exponential family classiffied, invariant to the • Requires fewer parameters nature and number of the other classes – O(n) as opposed to O(n 2 ) • More “natural” – Deciding what kind of object to generate and then generating it from a recipe • More efficient to estimate mode, if correct Source: Why the logistic function? A tutorial discussion on probabilities and neural networks, by Michael I. Jordan ftp://psyche.mit.edu/pub/jordan/uai.ps

Losses for ranking and metric learning • Margin loss • Cosine similarity • Ranking – Point-wise – Pair-wise • φ(z) = (1 -z) + , e -z , log(1-e -z ) – List-wise Source: “Ranking Measures and Loss Functions in Learning to Rank” Chen et al, NIPS 2009

Dropout: Drop a unit out to prevent co-adaptation Source: “Dropout: A Simple Way to Prevent Neural Networks from Overfitting”, by Srivastava, Hinton, et al. in JMLR 2014.

Why dropout? • Make other features unreliable to break co- adaptation • Equivalent to adding noise • Train several (dropped out) architectures in one architecture (O(2 n )) • Average architectures at run time – Is this a good method for averaging? – How about Bayesian averaging? – Practically, this work well too Source: “Dropout: A Simple Way to Prevent Neural Networks from Overfitting”, by Srivastava, Hinton, et al. in JMLR 2014.

Model averaging • Average output should be the same • Alternatively, – w /p at training time – w at testing time Source: “Dropout: A Simple Way to Prevent Neural Networks from Overfitting”, by Srivastava, Hinton, et al. in JMLR 2014.

Difference between non-DO and DO features Source: “Dropout: A Simple Way to Prevent Neural Networks from Overfitting”, by Srivastava, Hinton, et al. in JMLR 2014.

Indeed, DO leads to sparse activation Source: “Dropout: A Simple Way to Prevent Neural Networks from Overfitting”, by Srivastava, Hinton, et al. in JMLR 2014.

There is a sweet spot with DO, even if you increase the number of neurons Source: “Dropout: A Simple Way to Prevent Neural Networks from Overfitting”, by Srivastava, Hinton, et al. in JMLR 2014.

Advanced Machine Learning Gradient Descent for Non-Convex Functions Amit Sethi Electrical Engineering, IIT Bombay

Learning outcomes for the lecture • Characterize non-convex loss surfaces with Hessian • List issues with non-convex surfaces • Explain how certain optimization techniques help solve these issues

Contents • Characterizing a non-convex loss surfaces • Issues with gradient decent • Issues with Newton’s method • Stochastic gradient descent to the rescue • Momentum and its variants • Saddle-free Newton

Why do we not get stuck in bad local minima? • Local minima are close to global minima in terms of errors • Saddle points are much more likely at higher portions of the error surface (in high-dimensional weight space) • SGD (and other techniques) allow you to escape the saddle points

Error surfaces and saddle points http://math.etsu.edu/multicalc/prealpha/Chap2/Chap2-8/10-6-53.gif http://pundit.pratt.duke.edu/piki/images/thumb/0/0a/SurfExp04.png/400px-SurfExp04.png

Eigenvalues of Hessian at critical points Local minima Long furrow Plateau Saddle point http://i.stack.imgur.com/NsI2J.png

Saddle point Global minima A realistic picture Local minima Local maxima Image source: https://www.cs.umd.edu/~tomg/projects/landscapes/

Recommend

More recommend