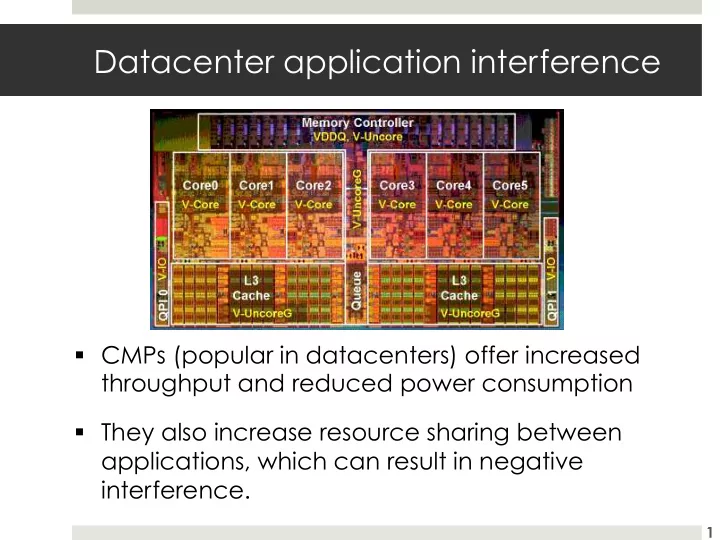

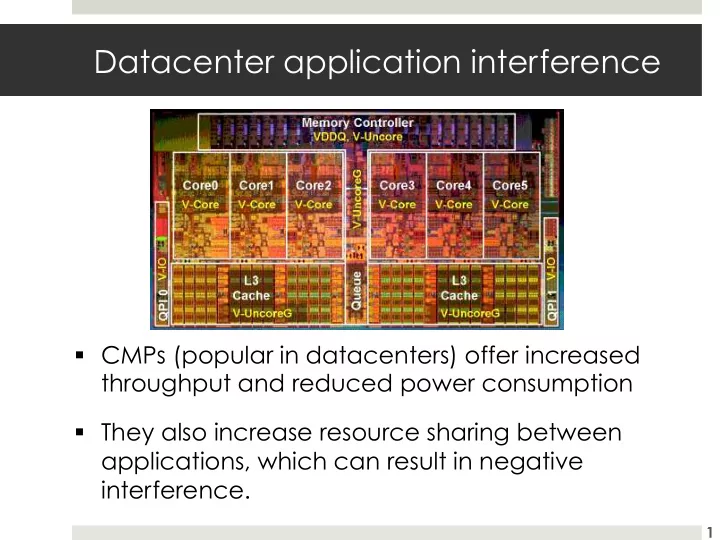

Datacenter application interference CMPs (popular in datacenters) offer increased throughput and reduced power consumption They also increase resource sharing between applications, which can result in negative interference. 1

Resource contention is well studied … at least on single machines. 3 main methods: (1) Gladiator style match-ups (2) Static analysis to predict application resource usage (3) Measure benchmark resource usage; apply to live applications 2

New methodology for understanding datacenter interference is needed. One that can handle complexities of a datacenter: (10s of) thousands of applications real user inputs production hardware financially feasible low overhead Hardware counter measurements of live applications. 3

Our contributions 1. ID complexities in datacenters 2. New measurement methodology 3. First large-scale study of measured interference on live datacenter applications. 4

Complexities of understanding application interference in a datacenter 5

Large chips and high core utilizations Profiling 1000 12-core, 24-hyperthread Google servers running production workloads revealed the average machine had >14/24 HW threads in use. 6

Heterogeneous application mixes Often applications have more than one co-runner on a machine. Observed 0-1 Co-runners max of 19 unique co- 2-3 Co-runners runner 4+ Co-runners threads (out of 24 HW threads). 7

Application complexities Fuzzy definitions Varying and sometimes unpredictable inputs Unknown optimal performance 8

Hardware & Economic Complexities Varying micro-arch platforms Necessity for low overhead = limited measurement capabilities Corporate policies 9

Measurement methodology 10

Measurement Methodology The goal: A generic methodology to collect application interference data on live production datacenter servers 11

Measurement Methodology Time App. A App. B 12

Measurement Methodology 1. 1. Use sample- based monitoring to collect per machine per core event (HW counter) sample data. 13

Measurement Methodology 2 M instrs 1 1 2 M instrs 2 M instrs 2 2 M instrs 2 2 M instrs 3 2 M instrs 4 3 2 M instrs 5 2 M instrs 4 2 M instrs 6 2 M instrs App. A App. B 14

Measurement Methodology 2. 2. Identify sample sized co-runner relationships … 15

Measurement Methodology Samples A:1- A:6 are co-runners with App. B. Samples B:1- B:4 are co-runners with App. A. App. A App. B 16

Measurement Methodology Say that a new App. C starts running on CPU 1… … B:4 no longer has a co-runner. App. A App. C App. B 17

Measurement Methodology 3. Filter relationships by arch. independent 3. interference classes… 18

Measurement Methodology Be on opp. sockets. 19

Measurement Methodology Share only I/O 20

Measurement Methodology 4. Aggregate equivalent co- schedules . 4. 21

Measurement Methodology For example: • Aggregate all the samples of App. A that have App. B as a shared core co- runner. • Aggregate all samples of App. A that have App. B as a shared core co-runner and App. C as a shared socket co- runner. 22

Measurement Methodology 5. Finally, calculate statistical indicators (means, medians) to get a midpoint 5. performance for app. interference comparisons 23

Measurement Methodology Avg. IPC = 2.0 Avg. IPC = 1.5 App. A App. B 24

Applying the measurement methodology at Google. 25

Applying the Methodology @ Google Experiment Details: Method: Event Instrs IPC 1. Collect Sampling period 2.5 Million samples Number of machines* 1000 * All had Intel Westmere chips (24 hyperthreads, 12 cores), matching clock speed, RAM, O/S 26

Applying the Methodology @ Google Experiment Details: Method: Event Instrs IPC 1. Collect Sampling period 2.5 Million samples Number of machines* 1000 * All had Intel Westmere chips (24 hyperthreads, 12 cores), matching clock speed, RAM, O/S 2. ID sample Collection results: size Unique binary apps 1102 relationships Co-runner relationships (top 8 apps) 3. Filter by Avg. shared core rel’ns 1M (min 2K) interference Avg. shared socket 9.5M (min 12K) classes Avg. opposite socket 11M (min 14K) 27

Applying the Methodology @ Google Method: 4. Aggregate equiv. schedules 5. Calculate statistical indicators 28

Analyze Interference streeview ’s IPC changes with top co-runners Overall median IPC across 1102 applications 29

Beyond noisy interferers (shared core) Less or pos. interference Base Application Noisy data Negative interference Co-running applications 30

Beyond noisy interferers (shared core) Less or pos. Base Applications interference Noisy data Negative interference Co-running applications * Recall minimum pair has 2K samples; medians across full grid of 1102 apps 31

Performance Strategies Restrict negative beyond noisy interferers (or encourage positive interferers as co-runners) Isolate sensitive or antagonistic applications 32

Takeaways 1. New datacenter application interference studies can use our identified complexities as a check list. 2. Our measurement methodology (verified at Google in 1st large-scale measurements of live datacenter interference), is generally applicable and shows promising initial performance opportunities. 33

Questions? melanie@cs.columbia.edu http://arcade.cs.columbia.edu/ 34

Recommend

More recommend