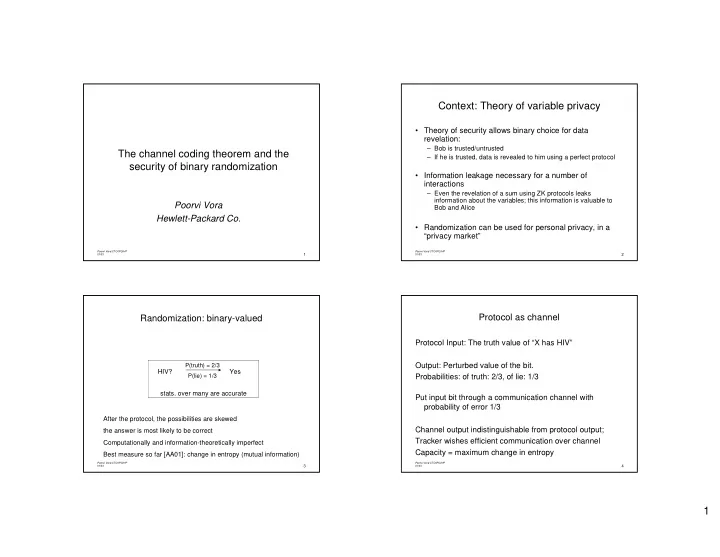

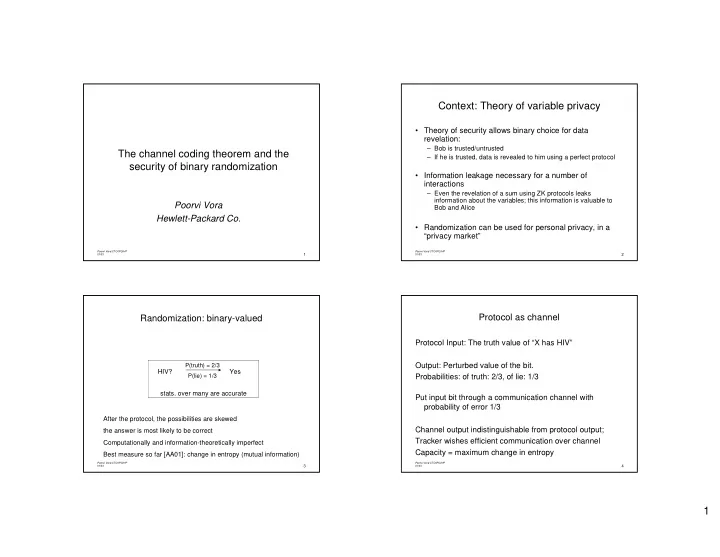

Context: Theory of variable privacy • Theory of security allows binary choice for data revelation: – Bob is trusted/untrusted The channel coding theorem and the – If he is trusted, data is revealed to him using a perfect protocol security of binary randomization • Information leakage necessary for a number of interactions – Even the revelation of a sum using ZK protocols leaks information about the variables; this information is valuable to Poorvi Vora Bob and Alice Hewlett-Packard Co. • Randomization can be used for personal privacy, in a “privacy market” Poorvi Vora/CTO/IPG/HP Poorvi Vora/CTO/IPG/HP 01/03 1 01/03 2 Protocol as channel Randomization: binary-valued Protocol Input: The truth value of “X has HIV” Output: Perturbed value of the bit. P(truth) = 2/3 HIV? Yes P(lie) = 1/3 Probabilities: of truth: 2/3, of lie: 1/3 stats. over many are accurate Put input bit through a communication channel with probability of error 1/3 After the protocol, the possibilities are skewed Channel output indistinguishable from protocol output; the answer is most likely to be correct Tracker wishes efficient communication over channel Computationally and information-theoretically imperfect Capacity = maximum change in entropy Best measure so far [AA01]: change in entropy (mutual information) Poorvi Vora/CTO/IPG/HP Poorvi Vora/CTO/IPG/HP 3 4 01/03 01/03 1

� � � � Attacks on randomization - Error repeating the question • An attack: asking the same question many times Known that: – tracker can reduce estimation error indefinitely • Can be thwarted by – by increasing the number of repeated queries indefinitely; – never answering the same question twice, or ∞ � ε n – always answering it the same. n 0 • Query repetition: – and that this is the best he can do with repeated queries – corresponds to an error-correcting code word a a a a a a a ε n 0 � cost per data point = n/1 ∞ – probability of error is monotonic decreasing with n for n -symbol code words – rate of code = 1/ n Poorvi Vora/CTO/IPG/HP Poorvi Vora/CTO/IPG/HP 01/03 5 01/03 6 Theorem: Channel codes are DRQS attacks and Is the following an attack? vice versa • Requested Bit 1: “location = North”; A code is • Requested Bit 2: “virus X test = positive”; – a (binary) function from M message words to n code bits • Requested Bit 3: “gender = male” AND “muscular sclerosis = – an estimation function from n bits to one of M message words present” If The only difference between codes and DRQS attacks is (location = North) ⊕ (virus X test = positive) that the coding entity in the communication channel ⇔ (gender = male) AND (muscular sclerosis = present) knows the bits Then: A3 = A1 ⊕ A2; check-sum bit; The tracker does not know the bits, but forces a pattern M = number of possible strings of interest; n = no. of queries among them through the queries rate = (log 2 M)/n = 2/3 i.e., k/n Deterministically-related query sequence (DRQS) Poorvi Vora/CTO/IPG/HP Poorvi Vora/CTO/IPG/HP 7 8 01/03 01/03 2

� � What kind of information transfer do DRQS attacks Shannon (1948) Channel Coding (“second”) Theorem provide? Existence result; tight upper bound on transmission efficiency (Really only weak law of large numbers) Recall: repetition attack sacrificed rate (1/n) for accuracy Codes exist for reliable transmission at all rates below capacity Does looking at more than one target bit at a time help the tracker? A channel cannot transmit reliably at rates above capacity. Can he maintain the rate of a DRQS attack while decreasing estimation error? Reliable transmission defined as decreasing error arbitrarily while maintaining rate i.e., Can he maintain k/n while ε n 0 Poorvi Vora/CTO/IPG/HP Poorvi Vora/CTO/IPG/HP 01/03 9 01/03 10 Existence of reliable DRQS attacks Types of attacks • Shannon (1948): Codes exist for reliable transmission at all rates below capacity • PRQS (Probabilistically-related Query Sequence) Attacks: Queries are probabilistically-related to required • Forney (1966): Existence of polynomial-time encodable bits. Most general and decodable Shannon codes • DRQS (Deterministically-related Query Sequence) • Spielman (1995): Construction of linear-time encodable Attacks: Queries are deterministically-related to required and decodable codes approaching Shannon codes bits. Among most efficient � Corollary: Construction methods for linear time DRQS • Reliable attacks: maintain rate while increasing accuracy attacks with k/n approaching C while ε n 0 Poorvi Vora/CTO/IPG/HP Poorvi Vora/CTO/IPG/HP 11 12 01/03 01/03 3

� � � � � � � � � � Theorem: The rate of a reliable PRQS attack is Theorem: Lower bound on total queries for tightly bounded above by protocol capacity arbitrarily small error If requested bits are all independent, minimum queries A channel cannot transmit reliably at rates above capacity when maximum rate: � Corollary: The capacity of a protocol is the (tight) upper bound on the rate of a reliable DRQS attack. Minimum no. of queries on average = target entropy/maximum rate � While the protocol is not perfect, there is a cost to exploiting its non- = target entropy/channel capacity; perfectness n ≥ k/ C - δ Bound also holds for: ε n 0 ; adaptive, non-deterministic; also many other protocols Shown using the source-channel coding theorem which i.e. ε n 0 � lim k/n ≤ C enables entropy to be addressed separately from the query pattern Poorvi Vora/CTO/IPG/HP Poorvi Vora/CTO/IPG/HP 01/03 13 01/03 14 We have shown that Why our approach is so powerful • All other information-theoretic approaches stop with the The tracker can do better by notion of entropy, and do not approach the notion of a – increasing the number of points combined in a single query channel – i.e. there exist attacks for which ∞ � ε n n 0 • All they provide access to is Shannon’s first (source ε n 0 � n/k ∞ coding) theorem \ “entropy is the minimum number of bits required, on average, to represent a random variable” There is a tight lower bound on the limit of n/k such that ∞ � ε n n 0 • Communication channels provide access to other work on both information theory and coding ∞ � ε n 0) � lim n/k > 1/ C Privacy measure i.e, (n Recall: C is max. value of change in entropy • Clearly just a beginning Poorvi Vora/CTO/IPG/HP Poorvi Vora/CTO/IPG/HP 15 16 01/03 01/03 4

This work was influenced by conversations with Umesh Vazirani Gadiel Seroussi Cormac Herley Poorvi Vora/CTO/IPG/HP 01/03 17 5

Recommend

More recommend