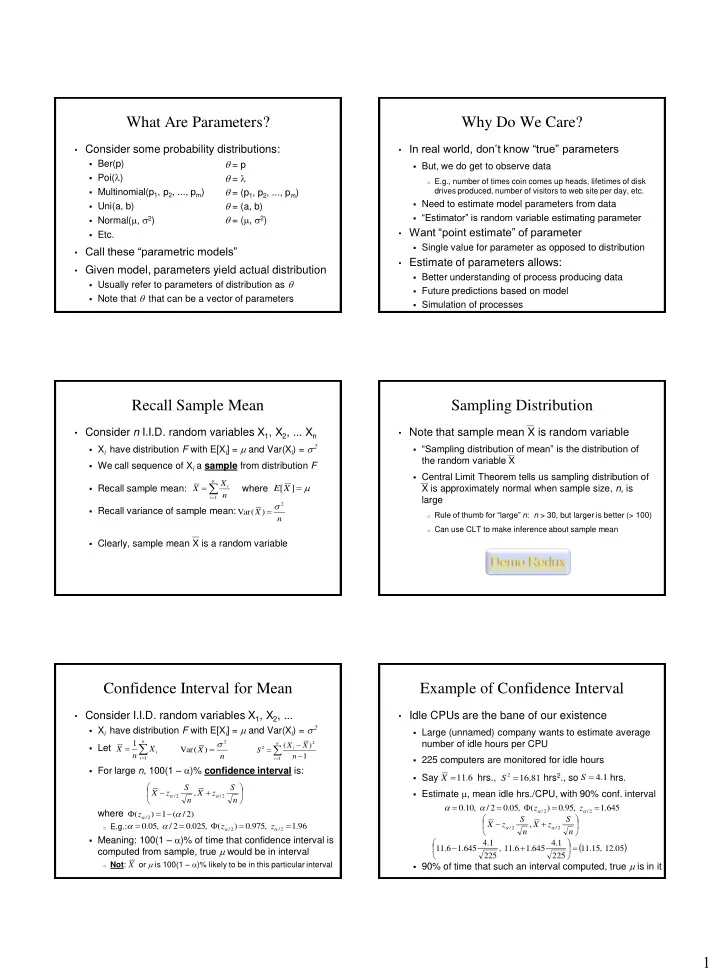

What Are Parameters? Why Do We Care? • In real world, don’t know “true” parameters • Consider some probability distributions: = p Ber(p) But, we do get to observe data Poi( l ) = l o E.g., number of times coin comes up heads, lifetimes of disk = (p 1 , p 2 , ..., p m ) Multinomial(p 1 , p 2 , ..., p m ) drives produced, number of visitors to web site per day, etc. = (a, b) Need to estimate model parameters from data Uni(a, b) “Estimator” is random variable estimating parameter Normal( m , 2 ) = ( m , 2 ) • Want “point estimate” of parameter Etc. Single value for parameter as opposed to distribution • Call these “parametric models” • Estimate of parameters allows: • Given model, parameters yield actual distribution Better understanding of process producing data Usually refer to parameters of distribution as Future predictions based on model Note that that can be a vector of parameters Simulation of processes Recall Sample Mean Sampling Distribution • Consider n I.I.D. random variables X 1 , X 2 , ... X n • Note that sample mean X is random variable X i have distribution F with E[X i ] = m and Var(X i ) = 2 “Sampling distribution of mean” is the distribution of the random variable X We call sequence of X i a sample from distribution F Central Limit Theorem tells us sampling distribution of n X m Recall sample mean: where X i E [ X ] X is approximately normal when sample size, n , is n i 1 large 2 Recall variance of sample mean: Var ( X ) o Rule of thumb for “large” n : n > 30, but larger is better (> 100) n o Can use CLT to make inference about sample mean Clearly, sample mean X is a random variable Confidence Interval for Mean Example of Confidence Interval • Consider I.I.D. random variables X 1 , X 2 , ... • Idle CPUs are the bane of our existence X i have distribution F with E[X i ] = m and Var(X i ) = 2 Large (unnamed) company wants to estimate average 1 n 2 number of idle hours per CPU 2 n ( X X ) Let X X Var ( X ) 2 i S i n n n 1 1 225 computers are monitored for idle hours i i 1 For large n , 100(1 – a )% confidence interval is: 2 Say hrs., hrs 2 ., so hrs. 4 . 1 X 11 . 6 S 16 . 81 S S S Estimate m , mean idle hrs./CPU, with 90% conf. interval , X z X z a a / 2 / 2 n n a a 0 . 10 , / 2 0 . 05 , ( z ) 0 . 95 , z 1 . 645 a a z a / 2 / 2 where ( ) 1 ( / 2 ) a / 2 S S a a X z , X z 0 . 05 , / 2 0 . 025 , ( ) 0 . 975 , 1 . 96 o E.g.: z z a a a a / 2 / 2 / 2 / 2 n n Meaning: 100(1 – a )% of time that confidence interval is 4 . 1 4 . 1 computed from sample, true m would be in interval 11 . 6 1 . 645 , 11 . 6 1 . 645 11 . 15 , 12 . 05 225 225 o Not : or m is 100(1 – a )% likely to be in this particular interval 90% of time that such an interval computed, true m is in it X 1

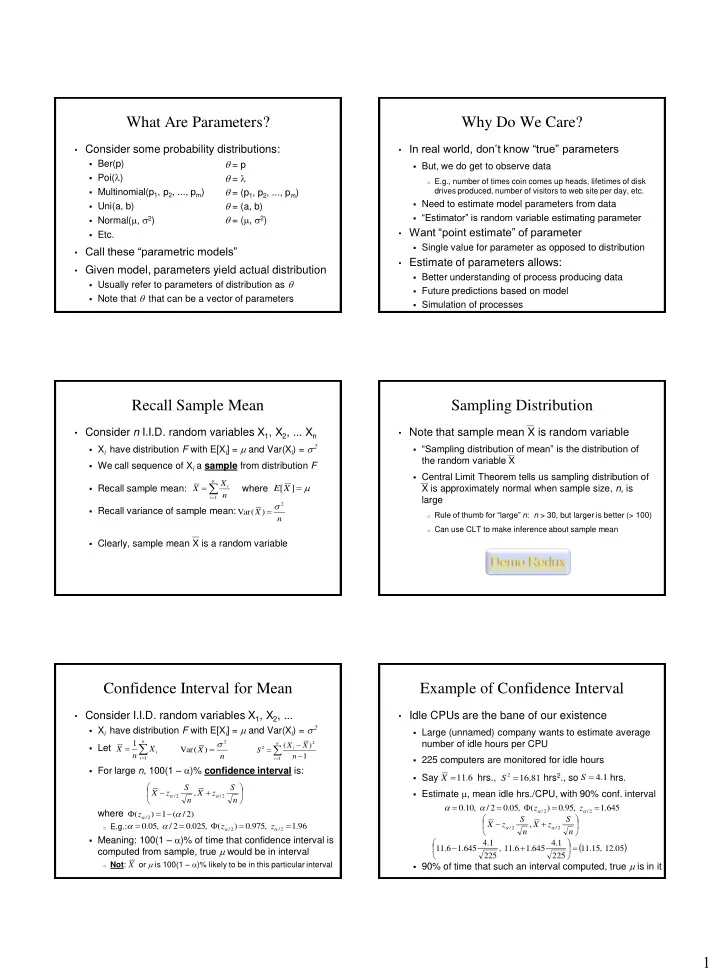

Method of Moments Examples of Method of Moments 1 n • Recall: n- th moment of distribution for variable X: • Recall the sample mean: ˆ X X m E [ X ] i 1 n i 1 m n [ ] E X This is method of moments estimator for E[X] n • Consider I.I.D. random variables X 1 , X 2 , ..., X n • Method of moments estimator for variance 1 n X i have distribution F ˆ 2 Estimate second moment: m X 2 i n 1 n 1 n 1 n 1 i 2 2 ˆ ˆ 2 ˆ k Var ( ) [ ] ( [ ]) Let X E X E X m X m X ... m X 1 i 2 i k i n n n i 1 i 1 i 1 ˆ ˆ 2 Estimate: Var ( ) ( ) X m m ˆ m are called the “sample moments” 2 1 i n 2 2 ( X X ) 1 n 1 n 1 n 2 2 2 2 1 i o Estimates of the moments of distribution based on data X X X X i i i n n n n i 1 i 1 i 1 • Method of moments estimators Recall sample variance: Estimate model parameters by equating “true” n 2 2 2 2 2 ( X X ) n ( X X ) n ( X 2 X X X ) n i ˆ ˆ ˆ 2 i i i i 1 ( ( ) 2 ) S m m moments to sample moments: m m 2 1 n 1 n 1 n 1 n 1 i i i 1 i 1 Small Samples = Problems Estimator Bias ˆ • What is difference between sample variance and • Bias of estimator: [ ] E MOM estimate for variance? When bias = 0, we call the estimator “unbiased” Imagine you have a sample of size n = 1 A biased estimator is not necessarily a bad thing 1 n What is sample variance? Sample mean is unbiased estimator X X i n 2 n ( X X ) i 1 2 i undefined S 2 n ( X X ) n 1 Sample variance is unbiased estimator 2 i S i 1 n 1 I.e., don’t really know variability of data i 1 n 1 S MOM estimator of variance = is biased 2 What is MOM estimate of variance? n 1 n 2 2 2 2 o Asymptotically less biased as n ( X X ) ( X X ) 1 i 1 i i i i 0 1 n For large n , either sample variance or MOM estimate I.e., have complete certainty about distribution! of variance is fine. o There is no variance Estimator Consistency Method of Moments with Bernoulli ˆ • Estimator “consistent”: for e > 0 e • Consider I.I.D. random variables X 1 , X 2 , ..., X n lim P (| | ) 1 n X i ~ Ber( p ) As we get more data, estimate should deviate from true value by at most a small amount • Estimate p This is actually known as “weak” consistency 1 n ˆ ˆ p E [ X ] m X X p Note similarity to weak law of large numbers: i 1 i n i 1 m e lim (| | ) 0 P X Can use estimate of p for X ~ Bin( n , p ) n Equivalently: If you know what n is, you don’t need to estimate that m e lim (| | ) 1 P X n Establishes sample mean as consistent estimate for m Generally, MOM estimates are consistent 2

Method of Moments with Poisson Method of Moments with Normal • Consider I.I.D. random variables X 1 , X 2 , ..., X n • Consider I.I.D. random variables X 1 , X 2 , ..., X n X i ~ Poi( l ) X i ~ N( m , 2 ) • Estimate l • Estimate m 1 n 1 n ˆ l l m m ˆ ˆ ˆ [ ] [ ] E X m X X E X m X X 1 1 i i i i n n 1 1 i i But note that for Poisson, l = Var(X i ) as well! • Now estimate 2 Could also use method of moments to estimate: m 2 ˆ ˆ 2 ( ) m 2 1 n 2 2 ( ) n 2 X X 2 n n n ( X X ) ˆ 1 1 1 l 2 2 ˆ ˆ 2 i l [ ] [ ] ( ) i 1 2 m ˆ 2 i E X E X m m 2 2 i 1 X X X 1 i 2 1 i i n n n n n i 1 i 1 i 1 Usually, use first moment estimate More generally, use the one that’s easiest to compute Method of Moments with Uniform • Consider I.I.D. random variables X 1 , X 2 , ..., X n X i ~ Uni( a , b ) Estimate mean: 1 n m ˆ m ˆ m X 1 i n i 1 Estimate variance: n 2 2 ( X X ) 2 ˆ ˆ 2 i ˆ 2 ( ) i 1 m m 2 1 n 2 a b ( b a ) m 2 For Uni( a , b ), know that: and 2 12 Solve (two equations, two unknowns): o Set b = 2 m – a , substitute into formula for 2 and solve: ˆ ˆ ˆ ˆ 3 and 3 a X b X 3

Recommend

More recommend