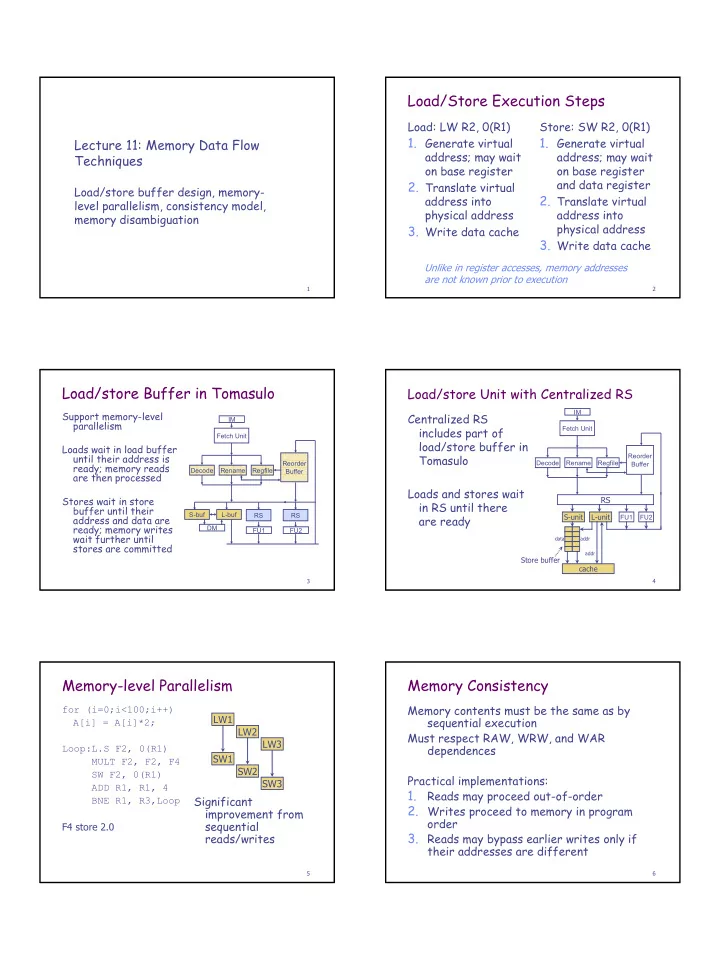

Load/Store Execution Steps Load: LW R2, 0(R1) Store: SW R2, 0(R1) 1. Generate virtual 1. Generate virtual Lecture 11: Memory Data Flow address; may wait address; may wait Techniques on base register on base register and data register 2. Translate virtual Load/store buffer design, memory- 2. Translate virtual address into level parallelism, consistency model, physical address address into memory disambiguation physical address 3. Write data cache 3. Write data cache Unlike in register accesses, memory addresses are not known prior to execution 1 2 Load/store Buffer in Tomasulo Load/store Unit with Centralized RS Support memory-level IM Centralized RS IM parallelism includes part of Fetch Unit Fetch Unit load/store buffer in Loads wait in load buffer until their address is Tomasulo Reorder Reorder Decode Rename Regfile ready; memory reads Buffer Decode Rename Regfile Buffer are then processed Loads and stores wait Stores wait in store RS in RS until there buffer until their S-buf L-buf RS RS address and data are are ready S-unit L-unit FU1 FU2 ready; memory writes DM FU1 FU2 wait further until data addr stores are committed addr Store buffer cache 3 4 Memory-level Parallelism Memory Consistency Memory contents must be the same as by for (i=0;i<100;i++) LW1 sequential execution A[i] = A[i]*2; LW2 Must respect RAW, WRW, and WAR LW3 dependences Loop:L.S F2, 0(R1) SW1 MULT F2, F2, F4 SW2 SW F2, 0(R1) Practical implementations: SW3 ADD R1, R1, 4 1. Reads may proceed out-of-order Significant BNE R1, R3,Loop 2. Writes proceed to memory in program improvement from order sequential F4 store 2.0 3. Reads may bypass earlier writes only if reads/writes their addresses are different 5 6 1

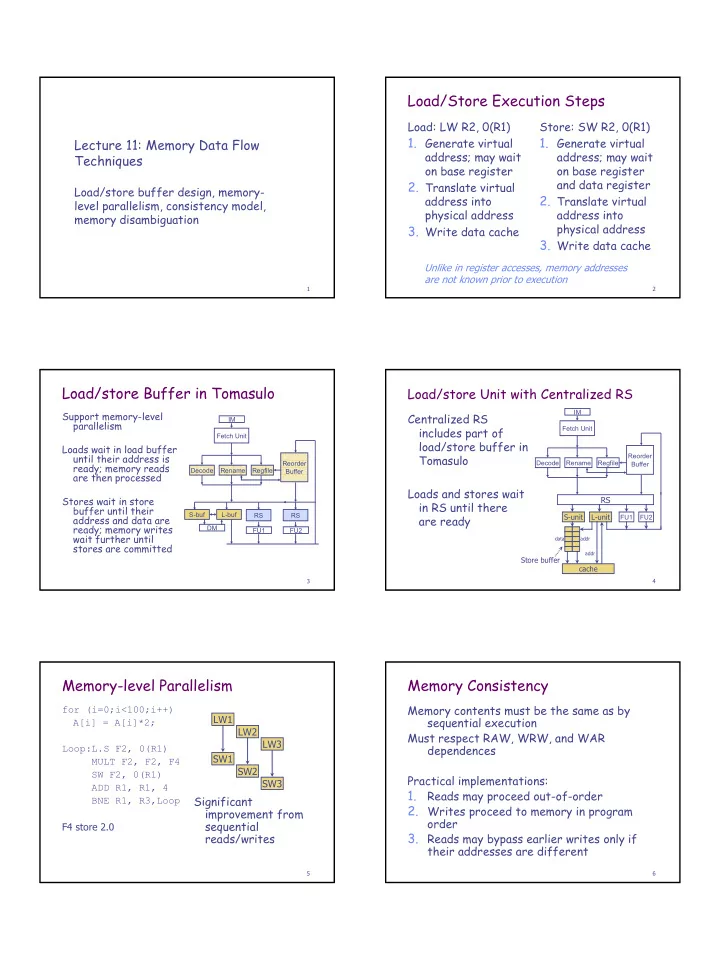

Store Stages in Dynamic Execution Load Bypassing and Memory Disambiguation 1. Wait in RS until base To exploit memory parallelism, loads RS address and store data have to bypass writes; but this may are available (ready) Store Load violate RAW dependences 2. Move to store unit for unit unit address calculation and Dynamic Memory Disambiguation: address translation 3. Dynamic detection of memory Move to store buffer finished (finished) completed dependences 4. Wait for ROB commit Compare load address with every older (completed) D-cache 5. Write to data cache store addresses (retired) Stores always retire in for Source: Shen and Lipasti, page 197 WAW and WRA Dep. 7 8 Load Bypassing Implementation Load Forwarding Load Forwarding: if a load RS RS address matches a in-order in-order 1. address calc. older write address, Load Load 2. address trans. can forward data Store 1 1 Store 1 1 unit unit 3. if no match, update unit 2 2 unit 2 2 match 3 match 3 dest reg If a match is found, forward the related To dest. Associative search for data addr reg data addr data to dest register matching (in ROB) D-cache D-cache Assume in-order execution addr addr Multiple matches may of load/stores exists; last one wins data data 9 10 In-order Issue Limitation Speculative Load Execution RS Any store in RS station Forwarding does for (i=0;i<100;i++) may blocks all following out-order not always work if A[i] = A[i]/2; loads Load 1 1 some addresses Store unit 2 2 unit are unknown When is F2 of SW Loop:L.S F2, 0(R1) match 3 available? DIV F2, F2, F4 Match No match: SW F2, 0(R1) addr at completion When is the next L.S predict a load has data ADD R1, R1, 4 ready? no RAW on older addrdata BNE R1, R3,Loop stores Finished Assume reasonable FU load buffer latency and pipeline D-cache Flush pipeline at length commit if data predicted wrong If match: flush pipeline 11 12 2

Alpha 21264 Pipeline Alpha 21264 Load/Store Queues Int issue queue fp issue queue Addr Int Int Addr FP FP ALU ALU ALU ALU ALU ALU Int RF(80) Int RF(80) FP RF(72) D-TLB L-Q S-Q AF Dual D-Cache 32-entry load queue, 32-entry store queue 13 14 Speculative Memory Disambiguation Load Bypassing, Forwarding, and RAW Detection commit Fetch PC match Load forwarding SQ ROB Load/store? LQ 1024 1-bit Renamed inst Load: WAIT if entry table LQ head not completed, then 1 move LQ head completed Store: mark SQ IQ IQ int issue queue head as If match: completed, then forwarding move SQ head D-cache D-cache • When a load is trapped at commit, set stWait bit in the table, indexed by the load’s PC • When the load is fetched, get its stWait from the If match: mark store-load trap table to flush pipeline (at commit) • The load waits in issue queue until old stores are issued • stWait table is cleared periodically 15 16 Architectural Memory States Summary of Superscalar Execution Instruction flow techniques LQ Branch prediction, branch target prediction, and SQ Committed instruction prefetch states Completed entries L1-Cache Register data flow techniques L2-Cache Register renaming, instruction scheduling, in-order L3-Cache (optional) commit, mis-prediction recovery Memory Disk, Tape, etc. Memory data flow techniques Load/store units, memory consistency Memory request: search the hierarchy from top to bottom Source: Shen & Lipasti 17 18 3

Recommend

More recommend