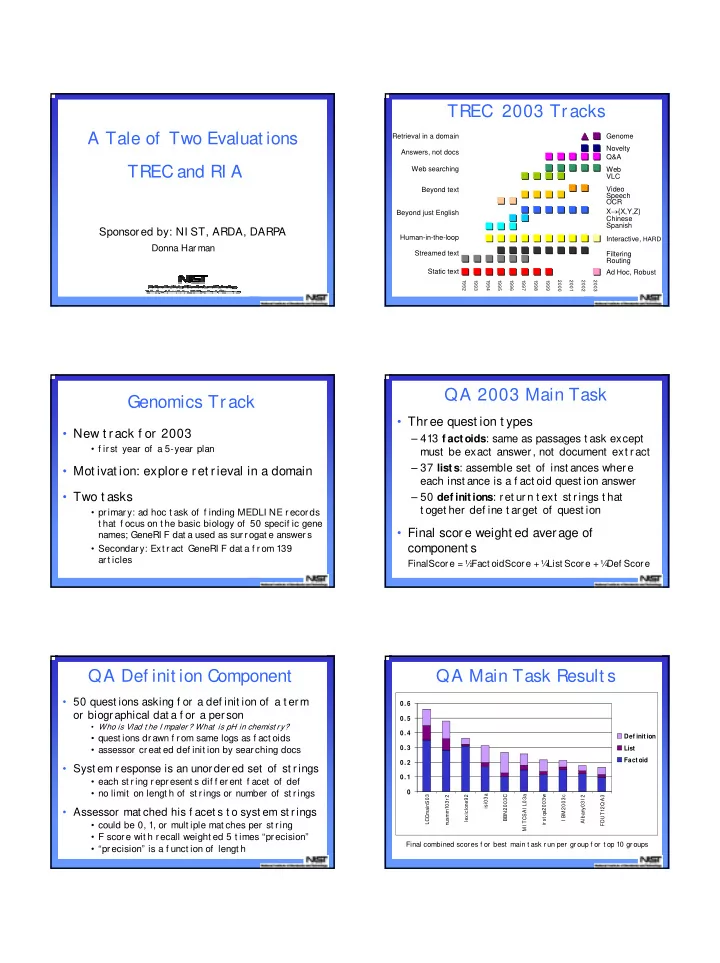

TREC 2003 Tracks A Tale of Two Evaluat ions Retrieval in a domain Genome Novelty Answers, not docs Q&A TREC and RI A Web searching Web VLC Video Beyond text Speech OCR X → {X,Y,Z} Beyond just English Chinese Spanish Sponsored by: NI ST, ARDA, DARPA Human-in-the-loop Interactive, HARD Donna Harman Streamed text Filtering Routing Static text Ad Hoc, Robust 1992 1993 1994 1995 1996 1997 1998 1999 2000 2001 2002 2003 QA 2003 Main Task Genomics Track • Three quest ion t ypes • New t rack f or 2003 – 413 f actoids : same as passages t ask except • f irst year of a 5-year plan must be exact answer, not document ext ract – 37 lists : assemble set of inst ances where • Mot ivat ion: explore ret rieval in a domain each inst ance is a f act oid quest ion answer • Two t asks – 50 def initions : ret urn t ext st rings t hat t oget her def ine t arget of quest ion • primary: ad hoc t ask of f inding MEDLI NE records t hat f ocus on t he basic biology of 50 specif ic gene • Final score weight ed average of names; GeneRI F dat a used as surrogat e answers component s • Secondary: Ext ract GeneRI F dat a f rom 139 art icles FinalScore = ½ Fact oidScore + ¼ List Score + ¼ Def Score QA Def init ion Component QA Main Task Result s • 50 quest ions asking f or a def init ion of a t erm 0. 6 or biographical dat a f or a person 0. 5 • Who is Vlad t he I mpaler? What is pH in chemist ry? 0. 4 • quest ions drawn f rom same logs as f act oids Def init ion 0. 3 List • assessor creat ed def init ion by searching docs Fact oid 0. 2 • Syst em response is an unordered set of st rings 0. 1 • each st ring represent s dif f erent f acet of def • no limit on lengt h of st rings or number of st rings 0 isi03a LCCmainS03 nusmm103r 2 lexiclone92 BBN2003C MI TCSAI L03a ir st qa2003w I BM2003c Albany03I 2 FDUT12Q A3 • Assessor mat ched his f acet s t o syst em st rings • could be 0, 1, or mult iple mat ches per st ring • F score wit h recall weight ed 5 t imes “precision” Final combined scor es f or best main t ask r un per gr oup f or t op 10 gr oups • “precision” is a f unct ion of lengt h

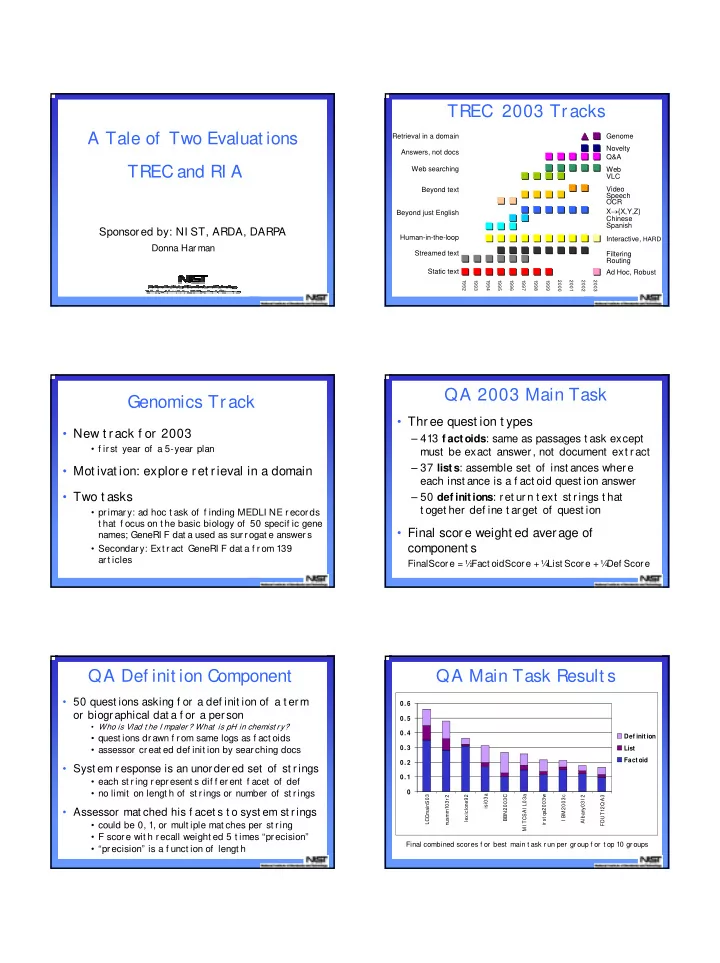

HARD t rack Robust Ret rieval Track • New t rack in 2003 • Goal: improve ad hoc ret rieval by cust omizing t he search t o t he user using: • Mot ivat ions: • f ocus on poor ly perf orming t opics since average 1) Met adat a f rom t opic st at ement s ef f ect iveness usually masks huge variance 1) t he pur pose of t he search • bring t radit ional ad hoc t ask back t o TREC 2) t he genr e or granular it y of t he desired response • Task 3) t he user’s f amiliar it y wit h t he subj ect mat t er 4) biogr aphical dat a about user (age, sex, et c.) • 100 t opics 2) Clarif ying f orms – 50 old t opics f rom TRECs 6-8 – 50 new t ropics creat ed by 2003 assessors 1) assessor (sur rogat e user) spends at most 3 minut es/ t opic responding t o t opic-specif ic f orm • TREC 6-8 document collect ion: disks 4&5 (no CR) 2) example uses: sense r esolut ion, relevance j udgment s • st andard t rec_eval evaluat ion plus new measures Ret rieval Met hods 2003 Robust Ret rieval Track • CUNY and Wat erloo expanded using t he 1 web (and possibly ot her collect ions) 0.9 • ef f ect ive, even f or poor perf ormers 0.8 0.7 • QE based on t arget collect ion generally Pr ecision 100 Topics 0.6 improved mean scores, but did not help 0.5 Old Topics 0.4 New Topics poor perf ormers 0.3 0.2 • Approaches f or poor perf ormers 0.1 • predict when t o expand 0 • f use result s f rom mult iple runs 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 • reorder t op ranked based on clust ering of Recall ret rieved set The Problem RI A Workshop 1 0.9 • I n t he summer of 2003, NI ST organized 0.8 a 6-week workshop called Reliable 0.7 Average Precision I nf ormat ion Access (RI A) 0.6 • RI A was part of t he Nort heast Regional 0.5 Research Cent er summer workshop series 0.4 sponsored by t he Advanced Research and 0.3 0.2 Development Act ivit y of t he US 0.1 Depart ment of Def ense 0 best ok8alx CL99XT

Part icipant s (28) Workshop Goals Donna Harman and Chris Buckley (coordinat ors) � To learn how t o cust omize I R syst ems f or opt imal perf ormance on any given query Cit y Universit y, London: Andy MacFarlane Clairvoyance: David Evans, David Hull, J esse Mont gomery � I nit ial st rong f ocus on relevance Carnegie Mellon U: J amie Callan, Paul Ogilvie, Yi Zhang, Luo Si, Kevyn f eedback and pseudo-relevance (blind) Collins-Thompson MI TRE: Warren Greif f f eedback NI ST: I an Soborof f and Ellen Voorhees � I f t ime, expand t o ot her t ools U. of Massachuset t s at Amherst : Andres Corrada-Emmanuel U. of New York at Albany: Tomek St rzalkowski, Paul Kant or, Sharon � Apply t he result s t o quest ion answering in Small, Ting Liu, Sean Ryan mult iple ways U. Wat erloo: Charlie Clarke, Gordon Cormack, Tom Lyman, Egidio Terr a Ot her st udent s: Zhenmei Gu, Luo Ming, Robert Warr en,J ef f Terrace Failure analysis Overall approach 1) Chose 44 out of 150 topics that were � Massive f ailure analysis done manually "f ailures" f or a single run by each system a) Mean Average Precision <= average � Statistical analysis using many b) have the most variance across systems 2) Use results f rom 6 systems’ standard runs “ident ical” f eedback runs f rom all 3) 6 people per topic (one per system) spent systems 45- 60 minutes looking at those results � Use the results of the above to group 4) Short 6- person group discussion to come to queries needing similar t reatment consensus about topic 5) I ndividual + overall report (f rom templates). Preliminary conclusions Grouping of queries by f ailure f rom f ailure analysis All syst ems emphasize one aspect ; miss anot her 21 � Systems agreed on causes of f ailure 362 – I dent if y incident s of human smuggling much more than had been expected Need out side expansion of “general” t erm 8 � Systems retrieve dif f erent documents, 438 – What count ries are experiencing an but don’t retrieve dif f erent classes of increase in t ourism? documents � Majority of f ailures could be f ixed Missing dif f icult aspect (semant ics in query) 7 with better f eedback and term weighting 401 – What language and cult ural dif f erence impede t he int egrat ion of f oreign minorit ies and query analysis that gives guidance as in Germany? to the relative importance of the terms General I R t echnical f ailure 8

List of experiment s run (Blind) Relevance Feedback bf _base: base runs f or all syst ems bot h using blind f eedback (bf ) and no f eedback What are new met hods of producing st eel? bf _numdocs: vary # docs used f or bf f rom 0-100 bf _numdocs_relonly: same but only use relevant * FBIS4-53871 title1 …. bf _numt erms: vary # t erms added f rom 0-100 FT923-9006 title2 …. bf _pass_numt erms: same but use passages as * FBIS4-27797 . source inst ead of document s * FT944-1455 . FBIS3-24678 . bf _swap-doc: use document s f rom ot her syst ems FT923-9281 . bf _swap_doc_t erm: expand using docs and t erms * FT923-10837 . FT922-11827 . bf _swap_doc_clust er: use CLARI T clust ers FT941-11316 . bf _swap_doc_f use: use f usion of ot her syst ems . bf _numt erms_passages bf _numdocs, relevant only Preliminary Lessons Learned Addit ional experiment s 1) Failure analysis a) systems tend to f ail f or the same reason • t opic_analysis: producing & comparing groups of b) getting the right concepts in system query t opics using assort ed measures critical • qa_st andard: ef f ect of I R algorit hms on QA 2) Surprises that require more analysis using docs/ passages a) bf _swap_docs: some systems better at • t opic_coverage: HI TI QA experiment using all providing docs syst ems b) some systems more robust during expansion c) bf _num_docs relevant only: some relevant docs are bad f eedback docs d) no topic in which there were “golden” terms in top 1- 4 f eedback terms

I mpact Workshop lessons learned � 1620 f inal runs made on TREC 678 collect ion � Learning t o “cat egorize” quest ions of a � This inf ormat ion will be publicly dist ribut ed t o varied nat ure like TREC t opics is much open t he way f or import ant f urt her analysis wit hin t he I R communit y harder t han anyone expect ed � Analysis wit hin t he workshop shows several � Doing massive and caref ul f ailure analysis promising measures f or predict ing blind across mult iple syst ems is a big win relevance f eedback f ailure � Perf orming parallel experiment s using � Addit ionally much has been learned (and will be mult iple syst ems may be t he only way of published) about t he int eract ion of search learning some general principles engines, t opics and dat a collect ions, leading t o more research in t his crit ical area Fut ur e • TREC will cont inue (t rec.nist .gov) – This year’s t racks likely t o cont inue • QA: request s f or required inf o + ot her inf o – One new t rack • invest igat e ad hoc evaluat ion met hodologies f or t erabyt e scale collect ions • SI GI R 2004 workshop on RI A result s – Many more det ails on what was done – Lot s of t ime f or discussion – Breakout sessions on where t o go next

Recommend

More recommend