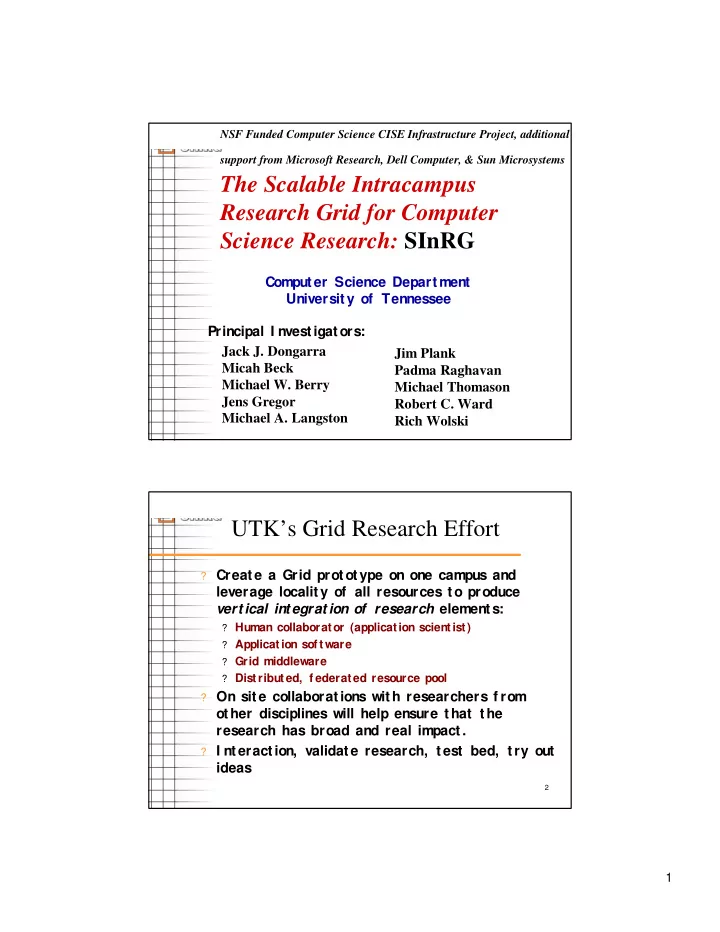

NSF Funded Computer Science CISE Infrastructure Project, additional support from Microsoft Research, Dell Computer, & Sun Microsystems The Scalable Intracampus Research Grid for Computer Science Research: SInRG Computer Science Department University of Tennessee Principal I nvestigators: Jack J. Dongarra Jim Plank Micah Beck Padma Raghavan Michael W. Berry Michael Thomason Jens Gregor Robert C. Ward Michael A. Langston Rich Wolski 1 UTK’s Grid Research Effort ? Create a Grid prototype on one campus and leverage locality of all resources to produce vertical integration of research elements: ? Human collaborator (application scientist) ? Application sof tware ? Grid middleware ? Distributed, f ederated resource pool ? On site collaborations with researchers f rom other disciplines will help ensure that the research has broad and real impact. ? I nteraction, validate research, test bed, try out ideas 2 1

Objective & Collaborative Research Projects ? Approach: ? Build a computational grid f or Computer Science research that mirrors the underlying technologies and types of research collaboration that are taking place on the national technology grid. ? Leverage Collaborative Research Projects: ? Advanced Machine Design ? Molecular Design » Bouldin, Langst on, Raghavan » Cummings, Ward ? Medical I maging ? JI CS and HBCU » Smith, Gregor, Thomason » Halloy, Mann ? Computational Ecology ? Projects within Dept. » Gross, Hallam, Berry » Beck, Dongarra, Plank, 3 Wolski SInRG’s Vision ? SI nRG provides a testbed ? CS grid middleware ? Computational Science applications ? Many hosts, co- existing in a loose conf ederation tied together with high- speed links. ? Users have the illusion of a very powerf ul computer on the desk. ? Spectrum of users 4 2

Properties of SInRG at UTK ? Genuine Grid ? realistically mirroring the essential f eatures that make computational grid both promising and problematic ? Designed f or Research ? support experimental approach by allowing PI s to rapidly deploy new ideas and prototypes ? complements PACI f ocus on hardening & deployment ? Communication between researchers leveraging locality ? centered in one department but collaborative across campus ? Used as part of normal research and education ? must be scalable in users and resources 5 Grid Service Clusters ( GSC ) in the Grid Fabric ? Computation ? used to run Grid controlware ? schedulable to augment other CPUs on Grid ? Storage ? State management » data caching » migration and f ault- tolerance ? Network ? allows dynamic reconf igutation of resources 6 3

Challenges Provide a solid, ? integrated, f oundation to build applications ? Hide as much as possible the underlying physical inf rastructure ? Deliver high- perf ormance to the application Support access, location, ? f ault transparency, state management, and scheduling Enable inter- operation of ? components 7 Related UTK Research in Grid Based Computing Message and Network based computing ? ? Experience with PVM, MPI , Harness, & NetSolve Tennessee- Oak Ridge Cluster (TORC) ? ? Wide- area cluster computing Numerical Libraries ? ? Grid aware Fault Tolerant library sof tware ? ? Built into sof tware/ library Graph Scheduling ? ? Partitioning and Graph algorithms Collaborations with other related ef f orts ? ? Globus, Legion, Condor, … 8 4

SInRG Software Infrastructure NetSolve ? ? programming abstractions ? intelligent scheduling f ramework ? hides complexity I nternet Backplane Protocol (I BP) ? ? distributed state management ? application driven caching Application- Level Scheduling (AppLeS ) ? ? dynamic schedulers Network Weather Service ? ? dynamic perf ormance prediction EveryWare ? ? toolkit f or leveraging multiple Grid inf rastructures and resources Fault- tolerance ? 9 ? process robustness and migration SInRG Today GSC # 1 - Dell PowerEdge service cluster, Linux ? ? 18 Dell PowerEdge 1300 dual 500MHz Pentium I I I ? 2 Dell PowerEdge 2400 dual 600MHz Pentium I I I GSC # 2 - Sun Enterprise service cluster, Solaris ? ? 17 Sun Enterprise 220R dual 450MHz UltraSPARC- I I GSC # 3 – Donation f rom Microsof t, Windows NT ? ? 4 Dell PowerEdge 6350 quad 550MHz Pentium I I I Xeon GSC # 4 – TORC, Linux ? ? 10 Dual Processor 550 MHz Pentium I I Gigabit network ? ? Foundry Networks FastI ron I I (4 slot) with 26 f iber ports ? Cisco Catalyst 6000 with 26 f iber ports ? SysKonnect SK- NET GE- SX (SK- 9843) 1000Base- SX with SC f iber connectors 1 0 5

SInRG 1 1 12 6

Recommend

More recommend