Regression Testing vs. Regression Testing Development Testing • Developed first version of software • During regression testing, an • Adequately tested the first version established test set may be • Modified the software; version 2 now available for reuse needs to be tested • How to test version 2? • Approaches • Approaches – Retest entire software from scratch – Retest all – Only test the changed parts, ignoring – Selective retest (selective regression unchanged parts since they have already testing) ← Main focus of research been tested – Could modifications have adversely affected unchanged parts of the software? Selective Retesting T Tests to rerun Tests not to rerun • Tests to rerun – Select those tests that will produce different output when run on P’ • Modification-revealing test cases • It is impossible to always find the set of modification-revealing test cases – (we cannot predict when P’ will halt for a test) – Select modification-traversing test cases • If it executes a new or modified statement in P’ or misses a statement in P’ that it executed in P 1

2

Cost of Regression Testing 2 1 3 1 3 2 1 3 2 3 1 2 Analysis 2 3 + Cost = C x 3 Cost = C y Retest All 2 3 3 1 Selective Retest 3 3 2 We want C x < C y 3 1 Key is the test selection algorithm/technique 1 3 We want to maintain the same “quality of testing” T’ = {t2, t3} Selective-retest Approaches Selective-retest Approaches • Safe approaches • Data-flow coverage-based approaches – Select every test that may cause the – Select tests that exercise data interactions modified program to produce different output that have been affected by modifications than the original program • E.g., select every test in T, that when executed on P, executed at least one def-use pair that has been • E.g., every test that when executed on P, executed at deleted from P’, or at least one def-use pair that has least one statement that has been deleted from P, at been modified for P’ least one statement that is new in or modified for P’ • Coverage-based approaches • Minimization approaches – Rerun tests that could produce different – Minimal set of tests that must be run to output than the original program. Use some meet some structural coverage criterion coverage criterion as a guide • E.g., every program statement added to or modified for P’ be executed (if possible) by at least one test in T 3

Selective-retest Approaches Factors to consider • Ad-hoc/random approaches • Testing costs – Time constraints • Fault-detection ability – No test selection tool available • Test suite size vs. fault-detection • E.g., randomly select n test cases from T ability • Specific situations where one technique is superior to another Open Questions Experiment • How do techniques differ in terms of • Hypothesis their ability to – Non-random techniques are more effective than random techniques but are much more expensive – reduce regression testing costs? – The composition of the original test suite – detect faults? greatly affects the cost and benefits of test • What tradeoffs exist b/w testsuite size selection techniques reduction and fault detection ability? – Safe techniques are more effective and more • When is one technique more cost- expensive than minimization techniques effective than another? – Data-flow coverage based techniques are as effective as safe techniques, but can be more • How do factors such as program design, expensive location, and type of modifications, and – Data-flow coverage based techniques are more test suite design affect the efficiency effective than minimization techniques but are and effectiveness of test selection more expensive techniques? 4

Measure Modeling Cost • Did not have implementations of all • Costs and benefit of several test techniques selection algorithms – Had to simulate them • Developed two models • Experiment was run on several – Calculating the cost of using the machines (185,000 test cases) – technique w.r.t. the retest-all results not comparable technique • Simplifying assumptions – Calculate the fault detection – All test cases have uniform costs effectiveness of the resulting test – All sub-costs can be expressed in case equivalent units • Human effort, equipment cost Modeling Cost Modeling Fault-detection • Cost of regression test selection • Per-test basis – Given a program P and – Cost = A + E(T’) – Its modified version P’ – Where A is the cost of analysis – Identify those tests that are in T and reveal – And E(T’) is the cost of executing and a fault in P’, but that are not in T’ validating tests in T’ – Normalize above quantity by the number of – Note that E(T) is the cost of fault-revealing tests in T executing and validating all tests, i.e., • Problem the retest-all approach – Multiple tests may reveal a given fault – Relative cost of executing and – Penalizes selection techniques that discard these test cases (i.e., those that do not validating = |T’|/|T| reduce fault-detection effectiveness) 5

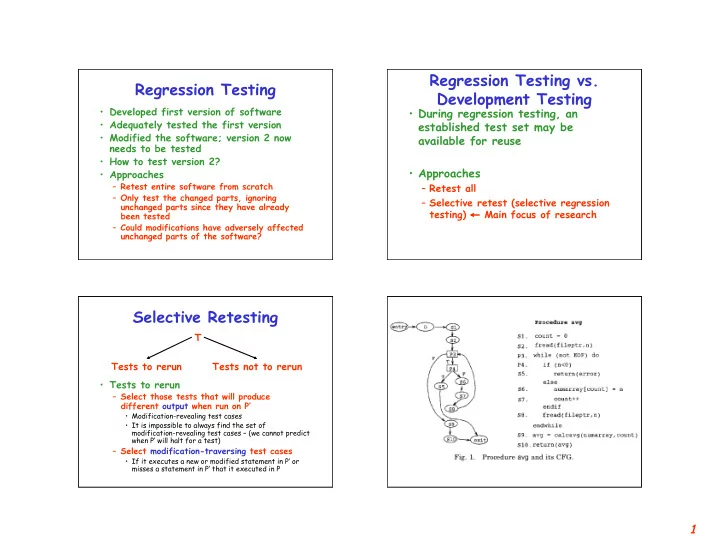

Modeling Fault-detection Experimental Design • Per-test-suite basis • 6 C programs – Three options • Test suites for the programs • The test suite is inadequate • Several modified versions – No test in T is fault revealing, and thus, no test in T’ is fault revealing • Same fault detection ability – Some test in both T and T’ is fault revealing • Test selection compromises fault-detection – Some test in T is fault revealing, but no test in T’ is fault revealing • 100 - (Percentage of cases in which T’ contains no fault-revealing tests) Test Suites and Versions Versions and Test Suites • Given a test pool for each program • Two sets of test suites for each program – Edge-coverage based – Black-box test cases • 1000 edge-coverage adequate test suites • Category-partition method • To obtain test suite T, for program P (from its test – Additional white-box test cases pool): for each edge in P’s CFG, choose (randomly) from those tests of pool that exercise the edge (no • Created by hand repeats) • Each (executable) statement, edge, and def- – Non-coverage based use pair in the base program was exercised • 1000 non-coverage-based test suites by at least 30 test cases • To obtain the k th non-coverage based test suite, for • Nature of modifications program P: determine n, the size of the k th coverage- based test suite, and then choose tests randomly – Most cases single modification from the test pool for P and add them to T, until T contains n test cases – Some cases, 2-5 modifications 6

Another look at the subjects Test Selection Tools • Minimization technique 1000 • For each program – Select a minimal set of tests that cover • 1000 edge-coverage based test suites: modified edges • 1000 non-coverage based test suites: • Safe technique – DejaVu • we discussed the details earlier in this lecture • Data-flow coverage based technique – Select tests that cover modified def-use pairs • Random technique – Random(n) randomly selects n% of the test cases • Retest-all Variables Measured Quantities • Each run • The subject program – Program P – 6 programs, each with a variety of – Version P’ modifications – Selection technique M • The test selection technique – Test suite T – Safe, data-flow, minimization, • Measured random(25), random(50), random(75), – The ratio of tests in the selected test retest-all suite T’ to the tests in the original • Test suite composition test suite – Edge-coverage adequate – Whether one or more tests in T’ reveals the fault in P’ – random 7

Dependent variables Number of runs • Average reduction in test suite size • For each subject program, from the test suite universe • Fault detection effectiveness – Selected 100 edge-coverage adequate • 100-Percentage of test suites in which T’ does not reveal a fault in P’ – And 100 random test suites • For each test suite – Applied each test selection method – Evaluated the fault detection capability of the resulting test suite Fault-detection Effectiveness How to read the graphs Entire structure represents a data distribution Upper quartile Box’s height spans Median the central 50% of the data Lower quartile 100-Percentage of test suites in which T’ does not reveal a fault in P’ 8

How to read the graphs Fault-detection Effectiveness 9

Conclusions • Minimization produces the smallest and the least effective test suites • Random selection of slightly larger test suites yielded equally good test suites as far as fault-detection is concerned • Safe and data-flow nearly equivalent average behavior and analysis costs – Data-flow may be useful for other aspects of regression testing • Safe methods found all faults (for which they has fault-revealing tests) while selecting (average) 74% of the test cases Conclusions • In certain cases, safe method could not reduce test suite size at all – On the average, slightly larger random test suites could be nearly as effective • Results were sensitive to – Selection methods used – Programs – Characteristics of the changes – Composition of the test suites 10

Recommend

More recommend