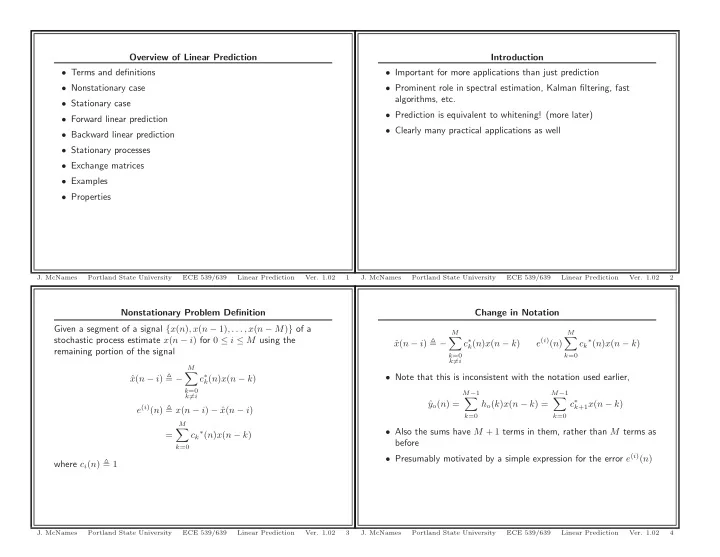

Overview of Linear Prediction Introduction • Terms and definitions • Important for more applications than just prediction • Nonstationary case • Prominent role in spectral estimation, Kalman filtering, fast algorithms, etc. • Stationary case • Prediction is equivalent to whitening! (more later) • Forward linear prediction • Clearly many practical applications as well • Backward linear prediction • Stationary processes • Exchange matrices • Examples • Properties J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 1 J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 2 Nonstationary Problem Definition Change in Notation Given a segment of a signal { x ( n ) , x ( n − 1) , . . . , x ( n − M ) } of a M M � � stochastic process estimate x ( n − i ) for 0 ≤ i ≤ M using the x ( n − i ) � − c ∗ e ( i ) ( n ) ∗ ( n ) x ( n − k ) ˆ k ( n ) x ( n − k ) c k remaining portion of the signal k =0 k =0 k � = i � M x ( n − i ) � − c ∗ • Note that this is inconsistent with the notation used earlier, ˆ k ( n ) x ( n − k ) k =0 M − 1 M − 1 � � k � = i c ∗ y o ( n ) = ˆ h o ( k ) x ( n − k ) = k +1 x ( n − k ) e ( i ) ( n ) � x ( n − i ) − ˆ x ( n − i ) k =0 k =0 � M • Also the sums have M + 1 terms in them, rather than M terms as ∗ ( n ) x ( n − k ) = c k before k =0 • Presumably motivated by a simple expression for the error e ( i ) ( n ) where c i ( n ) � 1 J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 3 J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 4

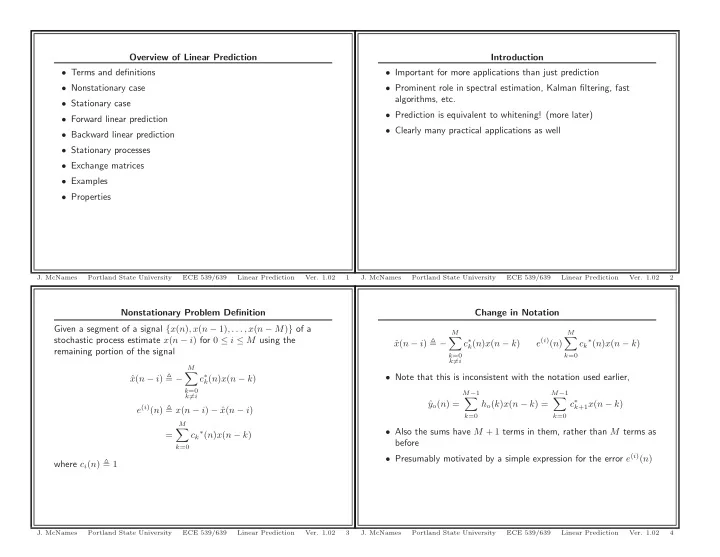

Types of “Prediction” Linear “Prediction” Notation and Partitions � x ( n ) x ( n − M + 1) � T x ( n ) � � M � M x ( n − 1) . . . c ∗ e ( i ) ( n ) � ∗ ( n ) x ( n − k ) x ( n − i ) � − ˆ k ( n ) x ( n − k ) c k � � T x ( n ) � ¯ x ( n ) x ( n − 1) . . . x ( n − M ) k =0 k =0 k � = i � � T x T ( n − 1) = x ( n ) • Forward Linear Prediction : i = 0 � � T x T ( n ) = x ( n − M ) • Backward Linear Prediction : i = M – Misnomer, but terminology is rooted in the literature R ( n ) = E[ x ( n ) x H ( n )] ¯ x H ( n )] R ( n ) = E[¯ x ( n )¯ • Symmetric Linear Smoother : i = M/ 2 Forward and backward linear prediction use specific partitions of the “extended” autocorrelation matrix � � � R ( n ) � r H P x ( n ) f ( n ) r b ( n ) ¯ ¯ R ( n ) � R ( n ) � r H r f ( n ) R ( n − 1) b ( n ) P x ( n − M ) r f ( n ) = E[ x ( n − 1) x ∗ ( n )] r b ( n ) = E[ x ( n ) x ∗ ( n − M )] J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 5 J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 6 Forward and Backward Linear Prediction Estimator and Error Forward and Backward Linear Prediction Solution Solution is the same as before, but watch the minus signs M � a ∗ = − a H ( n ) x ( n − 1) x f ( n ) = − ˆ k ( n ) x ( n − k ) R ( n − 1) a o ( n ) = − r f ( n ) k =1 R ( n ) b o ( n ) = − r b ( n ) M − 1 � b ∗ = − b H ( n ) x ( n ) P f , o ( n ) = P x ( n ) + r H x b ( n ) = − ˆ k ( n ) x ( n − k ) f ( n ) a o ( n ) k =0 P b , o ( n ) = P x ( n − M ) + r H b ( n ) b o ( n ) M � a ∗ = x ( n ) + a H ( n ) x ( n − 1) � M e f = x ( n ) + k ( n ) x ( n − k ) a ∗ x f ( n ) = − ˆ k ( n ) x ( n − k ) k =1 k =1 M − 1 � b ∗ = b H ( n ) x ( n ) + x ( n − M ) = − a H ( n ) x ( n − 1) e b = k ( n ) x ( n − k ) + x ( n − M ) k =0 M − 1 � b ∗ ˆ x b ( n ) = − k ( n ) x ( n − k ) • Again, new notation compared to the FIR linear estimation case k =0 • I use subscripts for the f instead of superscripts like the text = − b H ( n ) x ( n ) J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 7 J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 8

Stationary Case: Autocorrelation Matrix Stationary Case: Cross-correlation Vector When the process is stationary, something surprising happens! � r x (0) r x (1) r x ( M ) � � r x (1) � . . . r ∗ x (1) r x (0) r x ( M − 1) r x (2) . . . r x (0) r x (1) r x ( M − 1) r x ( M ) � . . . � � � � � ¯ M × 1 � ( M +1) × ( M +1) = R r � � � � r ∗ x (1) r x (0) . . . r x ( M − 2) r x ( M − 1) � . . . � � . � ... � � . . . . � � � � . . . . � � ¯ ( M +1) × ( M +1) = R r ∗ r ∗ � . . . . � x ( M ) x ( M − 1) . . . r x (0) r x ( M ) ... . . . . � � . . . . � � r ∗ r ∗ x ( M − 1) x ( M − 2) . . . r x (0) r x (1) � � � � � � r ∗ x ( M ) r ∗ x ( M − 1) r ∗ x (1) r x (0) . . . r H r T r x (0) r x (0) ¯ f R = = � T r ∗ r f R R M × 1 � � r x (1) r x (2) r x ( M ) r . . . � R � � R � r b Jr ¯ R = = � � � R ( n ) � r H r H J r x (0) r x (0) r H P x ( n ) f ( n ) r b ( n ) b ¯ R ( n ) = = r H r f ( n ) R ( n − 1) b ( n ) P x ( n − M ) Clearly, r f = E[ x ( n − 1) x ∗ ( n )] = r ∗ r b = E[ x ( n ) x ∗ ( n − M )] = Jr R ( n ) = R ( n − 1) P x (0) = P x ( n − M ) = r x (0) where J is the exchange matrix J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 9 J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 10 Exchange Matrix Forward/Backward Prediction Relationship ⎡ ⎤ Rb o = − r b = − Jr 0 0 . . . 1 ⎢ ⎥ . . . Ra o = − r f = − r ∗ ... . . . ⎢ ⎥ J H J = JJ H = I . . . J � ⎢ ⎥ JRa o = − Jr ∗ ⎣ ⎦ 0 1 . . . 0 JR ∗ a ∗ 1 0 . . . 0 o = − Jr = − r b JR ∗ = RJ • Counterpart to the identity matrix R ( Ja ∗ o ) = − r b • When multiplied on the left, flips a vector upside down b o = Ja ∗ o • When multiplied on the right, flips a vector sideways • The BLP parameter vector is the flipped and conjugated FLP • Don’t do this in MATLAB—many wasted multiplications by zeros parameter vector! • See fliplr and flipud • Useful for estimation: can solve for both and combine them to reduce variance • Further the prediction errors are the same! P f , o = P b , o = r (0) + r H a o = r (0) + r H Jb o J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 11 J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 12

Example 1: MA Process Example 1: MMSE Versus Prediction Index i Create a synthetic MA process in MATLAB. Plot the pole-zero and transfer function of the system. Plot the MMSE versus the point 1 Minimum NMSE Estimated being estimated, MNMSE � M 0.8 c ∗ x ( n − i ) � − ˆ k ( n ) x ( n − k ) k =0 k � = i MNMSE 0.6 for M = 25 . 0.4 0.2 0 0 5 10 15 20 25 Prediction Index (i, Samples) J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 13 J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 14 Example 1: Prediction Example Example 1: Prediction Example � � M:25 i:0 NMSE:0.388 M:25 i:13 NMSE:0.168 5 5 x ( n ) x ( n ) x ( n + 0) ˆ x ( n + 13) ˆ Signal + Estimate (scaled) Signal + Estimate (scaled) 0 0 −5 −5 0 10 20 30 40 50 60 70 80 90 100 0 10 20 30 40 50 60 70 80 90 100 Sample Time (n) Sample Time (n) J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 15 J. McNames Portland State University ECE 539/639 Linear Prediction Ver. 1.02 16

Recommend

More recommend