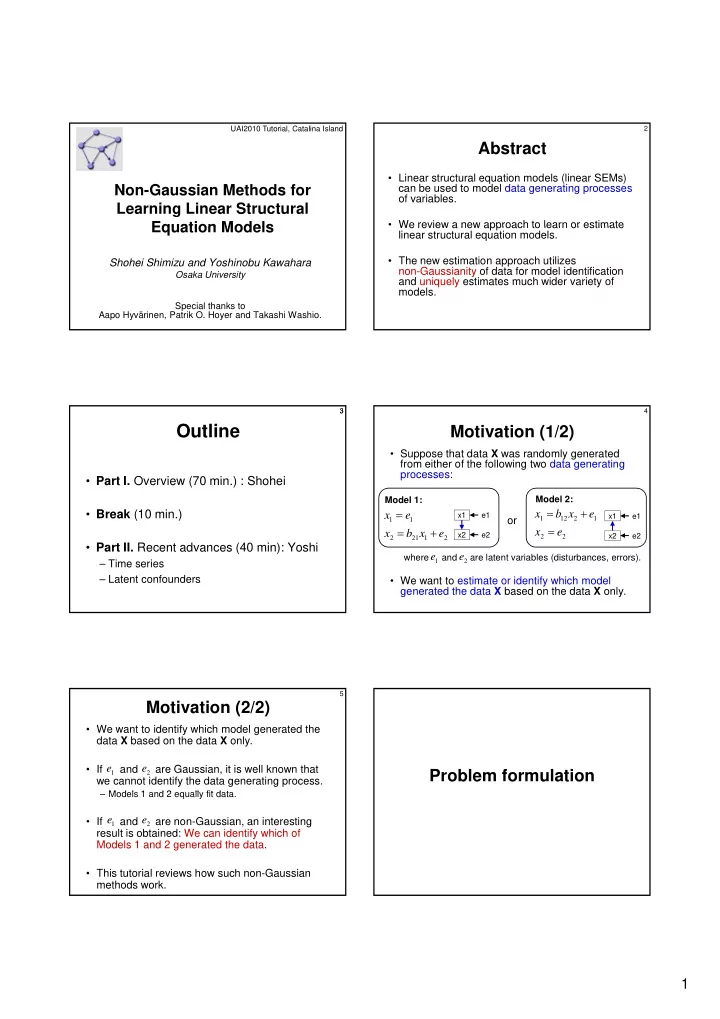

UAI2010 Tutorial, Catalina Island 1 2 Abstract • Linear structural equation models (linear SEMs) Non-Gaussian Methods for can be used to model data generating processes of variables. Learning Linear Structural • We review a new approach to learn or estimate We review a new approach to learn or estimate Equation Models Equation Models linear structural equation models. • The new estimation approach utilizes Shohei Shimizu and Yoshinobu Kawahara non-Gaussianity of data for model identification Osaka University and uniquely estimates much wider variety of models. Special thanks to Aapo Hyvärinen, Patrik O. Hoyer and Takashi Washio. 1 3 4 Outline Motivation (1/2) • Suppose that data X was randomly generated from either of the following two data generating processes: • Part I. Overview (70 min.) : Shohei Model 2: Model 1: • Break (10 min.) x b x e x e x1 e1 x1 e1 or 1 12 2 1 1 1 x e x b x e x2 e2 2 2 x2 e2 2 21 1 2 • Part II. Recent advances (40 min): Yoshi e e where and are latent variables (disturbances, errors). 1 2 – Time series – Latent confounders • We want to estimate or identify which model generated the data X based on the data X only. 5 Motivation (2/2) • We want to identify which model generated the data X based on the data X only. e e • If x1 and x2 are Gaussian, it is well known that Problem formulation 1 2 we cannot identify the data generating process. – Models 1 and 2 equally fit data. M d l 1 d 2 ll fit d t e e • If x1 and x2 are non-Gaussian, an interesting 1 2 result is obtained: We can identify which of Models 1 and 2 generated the data. • This tutorial reviews how such non-Gaussian methods work. 1

7 8 Basic problem setup (1/3) Basic problem setup (2/3) x • Further assume linear relations of variables . • Assume that the data generating process of i x • Then we obtain a linear acyclic SEM (Wright, 1921; Bollen, continuous observed variables is graphically i 1989) : represented by a directed acyclic graph (DAG). – Acyclicity means that there are no directed cycles. x Bx e x b x e or i i ij ij j j i i Example of a directed E l f di t d Example of a directed : parents of j i cyclic graph: acyclic graph (DAG): where x3 e3 x3 e3 e – The are continuous latent variables that are not i determined inside the model, which we call external x1 e1 x1 e1 influences (disturbances, errors). e x2 e2 x2 e2 – The are of non-zero variance and are independent. i b – The ‘path-coefficient’ matrix B = [ ] corresponds to a DAG. x x ij is a parent of etc. 3 1 9 10 Assumption of acyclicity Example of linear acyclic SEMs • Acyclicity ensures existence of an ordering of • A three-variable linear acyclic SEM: x variables that makes B lower-triangular with i zeros on the diagonal. 0 0 1 . 5 x x e x 1 . 5 x e 1 1 1 1 3 1 1 . 3 0 0 or x x e 0 0 0 0 1 . 5 x 0 0 0 x e 1 . 3 x x e 0 0 0 x x e 2 2 2 3 3 3 1 1 1 2 1 2 0 0 0 x x e 1 . 5 0 0 0 0 x 1 . 3 0 0 x e x x e x 3 3 3 x e e 2 2 2 2 2 2 1 1 1 3 3 0 0 0 0 1 . 3 0 x x e x 0 x e B 3 3 3 2 2 2 B B • B corresponds to the data-generating DAG: perm The ordering is : x3 e3 x3 e3 0 b No directed edge from x to x 1.5 . x x x 1.5 ij j i 3 1 2 x1 e1 x1 e1 x may be an ancestor of x , x , 0 A directed edge from to b x x 3 1 2 -1.3 -1.3 ij j i but not vice versa . x2 e2 x2 e2 11 12 12 Assumption of independence Basic problem setup (3/3): Learning linear acyclic SEMs between external influences • It implies that there are no latent confounders • Assume that data X is randomly sampled (Spirtes et al. 2000) f from a linear acyclic SEM (with no latent – A latent confounder is a latent variable that is a parent of more than or equal to two observed variables: confounders): e1’ x1 x1 e1 x Bx e f b 21 e2’ x2 x2 e2 f • Such a latent confounder makes external influences dependent (Part II): • Goal: Estimate the path-coefficient matrix B by x1 e1 observing data X only! – B corresponds to the data-generating DAG. x2 e2 2

14 14 Under what conditions B is identifiable? • `B is identifiable’ ` B is uniquely determined or Problems: estimated from p( x )’. Identifiability problems of con entional methods conventional methods • Linear acylic SEM: x1 e1 x Bx e b 21 x2 e2 – B and p( e ) induce p( x ). – If p( x ) are different for different B , then B is uniquely determined. 15 16 16 Conventional methods based on Conventional estimation principle: causal Markov condition Causal Markov condition • Methods based on conditional independencies • If the data-generating model is a linear (Spirtes & Glymour, 1991) acyclic SEM, causal Markov condition – Many linear acyclic SEMs give a same set of holds : conditional independences and equally fit data. x – Each observed variable xi is independent of its E h b d i bl i i i d d t f it i non-descendants in the DAG conditional on its • Scoring methods based on Gaussianity parents (Pearl & Verma, 1991) : (Chickering, 2002) – Many linear acyclic SEMs give a same Gaussian distribution and equally fit data. p p x p x | parents of x i i • In many cases, path-coefficient matrix B is not 1 i uniquely determined. 17 17 Example e e • Two models with Gaussian e1 and : 1 2 Model 2: Model 1: 0 . 8 x e x x e x1 e1 x1 e1 A solution: 1 1 1 2 1 0 . 8 x x e x e x2 e2 x2 e2 2 1 2 2 2 Non-Gaussian approach pp E e E e 0 , var x var x 1 1 2 1 2 • Both introduce no conditional independence: cov x , x 0 . 8 0 1 2 • Both induce the same Gaussian distribution: 0 1 0 . 8 x 1 ~ N 0 0 . 8 1 x 2 3

Recommend

More recommend