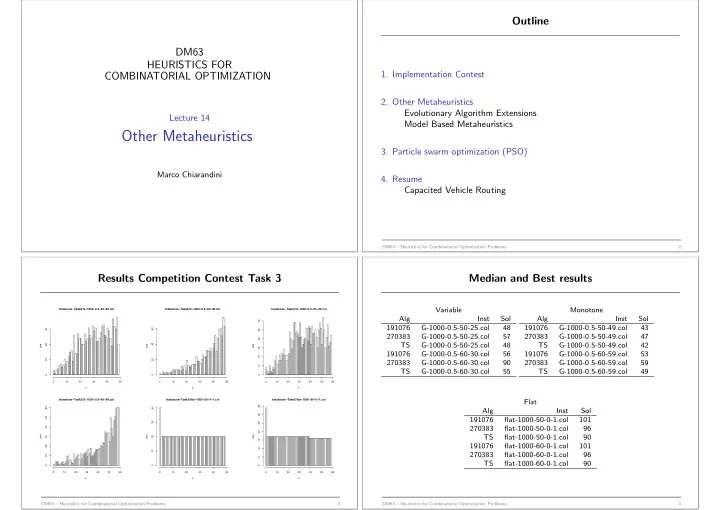

Outline DM63 HEURISTICS FOR 1. Implementation Contest COMBINATORIAL OPTIMIZATION 2. Other Metaheuristics Evolutionary Algorithm Extensions Lecture 14 Model Based Metaheuristics Other Metaheuristics 3. Particle swarm optimization (PSO) Marco Chiarandini 4. Resume Capacited Vehicle Routing DM63 – Heuristics for Combinatorial Optimization Problems 2 Results Competition Contest Task 3 Median and Best results Variable Monotone Instances−Task3/G−1000−0.5−50−25.col Instances−Task3/G−1000−0.5−50−49.col Instances−Task3/G−1000−0.5−60−30.col Alg Inst Sol Alg Inst Sol 30 191076 G-1000-0.5-50-25.col 48 191076 G-1000-0.5-50-49.col 43 60 30 25 270383 G-1000-0.5-50-25.col 57 270383 G-1000-0.5-50-49.col 47 20 TS G-1000-0.5-50-25.col 48 TS G-1000-0.5-50-49.col 42 40 20 size size size 15 191076 G-1000-0.5-60-30.col 56 191076 G-1000-0.5-60-59.col 53 10 10 20 270383 G-1000-0.5-60-30.col 90 270383 G-1000-0.5-60-59.col 59 5 TS G-1000-0.5-60-30.col 55 TS G-1000-0.5-60-59.col 49 0 0 0 0 10 20 30 40 50 0 10 20 30 40 50 0 10 20 30 40 50 60 k k k Instances−Task3/G−1000−0.5−60−59.col Instances−Task3/flat−1000−50−0−1.col Instances−Task3/flat−1000−60−0−1.col Flat 35 60 40 Alg Inst Sol 30 50 191076 flat-1000-50-0-1.col 101 30 25 40 270383 flat-1000-50-0-1.col 96 20 size size size TS flat-1000-50-0-1.col 90 30 20 15 191076 flat-1000-60-0-1.col 101 20 10 10 270383 flat-1000-60-0-1.col 96 10 5 TS flat-1000-60-0-1.col 90 0 0 0 0 10 20 30 40 50 60 0 10 20 30 40 50 0 10 20 30 40 50 60 k k k DM63 – Heuristics for Combinatorial Optimization Problems 3 DM63 – Heuristics for Combinatorial Optimization Problems 4

Outline Scatter Search and Path Relinking Key idea: maintain a small population of reference solutions and combine them to create new solutions. 1. Implementation Contest Differ from EC by providing unified principles for recombining solutions based on generalized path constructions in Euclidean or neighborhood spaces. 2. Other Metaheuristics Evolutionary Algorithm Extensions Model Based Metaheuristics Scatter Search and Path Relinking: generate sp with a diversification generation method 3. Particle swarm optimization (PSO) perform subsidiary perturbative search on sp update reference set rs from sp while termination criterion is not satisfied: do 4. Resume generate subset sb from rs Capacited Vehicle Routing apply solution combination to sb to obtain sc perform subsidiary perturbative search on sc update reference set rs from rs ∪ sc DM63 – Heuristics for Combinatorial Optimization Problems 5 DM63 – Heuristics for Combinatorial Optimization Problems 6 Outline Note: ◮ A large number of solutions is generated by the diversification generation method while about 1/10 of them are chosen for the reference set . ◮ In more complex implementations the size of the subset of solutions sc 1. Implementation Contest may be larger than two. 2. Other Metaheuristics Scatter Search Evolutionary Algorithm Extensions Solutions are encoded as points of an Euclidean space and new solutions are Model Based Metaheuristics created by building linear combinations of reference solutions using both positive and negative coefficients. 3. Particle swarm optimization (PSO) Path Relinking 4. Resume Combinations are reinterpreted as paths between solutions in a neighborhood Capacited Vehicle Routing space. Starting from an initiating solution moves are performed that introduces components of a guiding solution . DM63 – Heuristics for Combinatorial Optimization Problems 7 DM63 – Heuristics for Combinatorial Optimization Problems 8

Model Based Metaheuristics Stochastic Gradient Method ◮ { P ( s, � θ ) | � θ ∈ Θ } family of probability functions defined on s ∈ S ◮ Θ ⊂ R m m-dimensional parameter space Key idea Solutions generated using a parameterized probabilistic model ◮ P continuous and differentiable updated using previously seen solutions. Then the original problem may be replaced with the following continuous one 1. Candidate solutions are constructed using some parameterized probabilistic model, that is, a parameterized probability distribution over arg min � θ ∈ Θ E � θ [ f ( s )] the solution space. 2. The candidate solutions are used to modify the model in a way that is Gradient Method: deemed to bias future sampling toward low cost solutions. ◮ start from some initial guess � θ 0 θ t [ f ( s )] and update � ◮ at stage t , calculate the the gradient ∇ E � θ t + 1 to be � θ t + α t ∇ E � θ t [ f ( s )] where α t is a step-size parameter. DM63 – Heuristics for Combinatorial Optimization Problems 9 DM63 – Heuristics for Combinatorial Optimization Problems 10 ◮ p ( s, � θ ) | � Cross Entropy Method θ ∈ Θ } probability density function on s ∈ S s ∈S f ( s ) p ( s, � ◮ E � θ [ f ( s )] = � θ ) If we are interested in the probability that f ( s ) is smaller than some threshold γ under the probability p ( · , � θ ∗ ) then: Pr ( f ( s ) ≥ γ, θ ∗ ) = E θ ∗ [ I { f ( s ) ≥ γ } ] Key idea: use rare event-simulation and importance sampling to proceed if this probability is very small then we call { f ( s ) ≥ γ } a rare event toward good solutions ◮ Generate random solution samples according to a specified mechanism Monte-Carlo simulation: ◮ update the parameters of the random mechanism to produce better ◮ draw a random sample “sample” � N 1 i = 1 I { f ( S i ) ≥ γ } ◮ N Importance sampling: ◮ use a different probability function h on S to sample the solutions � N i = 1 I { f ( S i ) ≥ γ } p ( S i ,θ ∗ ) 1 ◮ N h ( S i ) DM63 – Heuristics for Combinatorial Optimization Problems 11 DM63 – Heuristics for Combinatorial Optimization Problems 12

How h is determined? The best h , h ∗ , is unknown. Hence h is chosen from p ( · , � θ ) Cross Entropy Method (CEM): θ ) to h ∗ is ◮ chose the parameter � θ such that the difference of h = p ( · , � Define � � θ 0 . Set t = 1 minimal While termination criterion is not satisfied: ◮ this is done using a convenient measure of the distance between two | generate a sample ( s 1 , s 2 , . . . s N ) from the pdf p ( · ; � | θ t − 1 ) probability distribution functions, the cross entropy: γ t = S ( ⌈ ( 1 − ρ ) N ⌉ ) ) | | set � γ t equal to the ( 1 − ρ ) -quantile with respect to f ( � � � | use the same sample ( s 1 , s 2 , . . . , s N ) to solve the stochastic program | ln h ∗ ( s ) D ( h ∗ , p ) = E h ∗ N � � γ t } ln p ( S i ; � p ( s ) 1 ⌊ θ t = arg max � θ ) I { f ( S i ) ≤ b N v i = 1 ◮ Minimizing the distance by means of sampling estimation leads to: Generates a two-phase iterative approach to construct a sequence of levels N I { f ( S i ≥ γ ) } p ( S i , � = 1 θ ) � � � ln p ( S i , � γ t and parameters � θ 1 , � θ 2 , . . . , � θ = arg max θ ) � � � � γ 1 , � γ 2 , . . . , � θ t such that � γ t is close to optimal p ( S i , � N θ ′ ) � θ and � i = 1 � θ t assigns maximal probability to sample high quality solutions where S N is a random sample from p ( · , θ ) DM63 – Heuristics for Combinatorial Optimization Problems 13 DM63 – Heuristics for Combinatorial Optimization Problems 14 Example: TSP ◮ Solution representation: permutation representation ◮ Probabilistic model: matrix P where p ij represents probability of vertex j ◮ Termination criterion : if for some t ≥ d with, e.g. , d = 5 , after vertex i γ t = � γ t − 1 = . . . = � � γ t − d ◮ Tour construction: specific for tours ◮ Smoothed Updating : � θ t = α � θ t + ( 1 − α ) � � � � θ t − 1 with 0.4 ≤ α ≤ 0.9 Define P ( 1 ) = P and X 1 = 1 . Let k = 1 ◮ Parameters : N = cn , n size of the problem (number of choices While k < n − 1 available for each solution component to decide), c > 1 ( 5 ≤ c ≤ 10 ); | obtain P ( k + 1 ) from P ( k ) by setting the X k -th column of P ( k ) to zero | ρ ≈ 0.01 for n ≥ 100 and ρ ≈ ln ( n ) /n for n < 100 | and normalizing the rows to sum up to 1. | | Generate X k + 1 from the distribution formed by the X k -th row of P ( k ) | ⌊ set k = k + 1 ◮ Update: take the fraction of times transition i to j occurred in those paths the cycles that have f ( s ) ≤ γ DM63 – Heuristics for Combinatorial Optimization Problems 15 DM63 – Heuristics for Combinatorial Optimization Problems 16

Recommend

More recommend