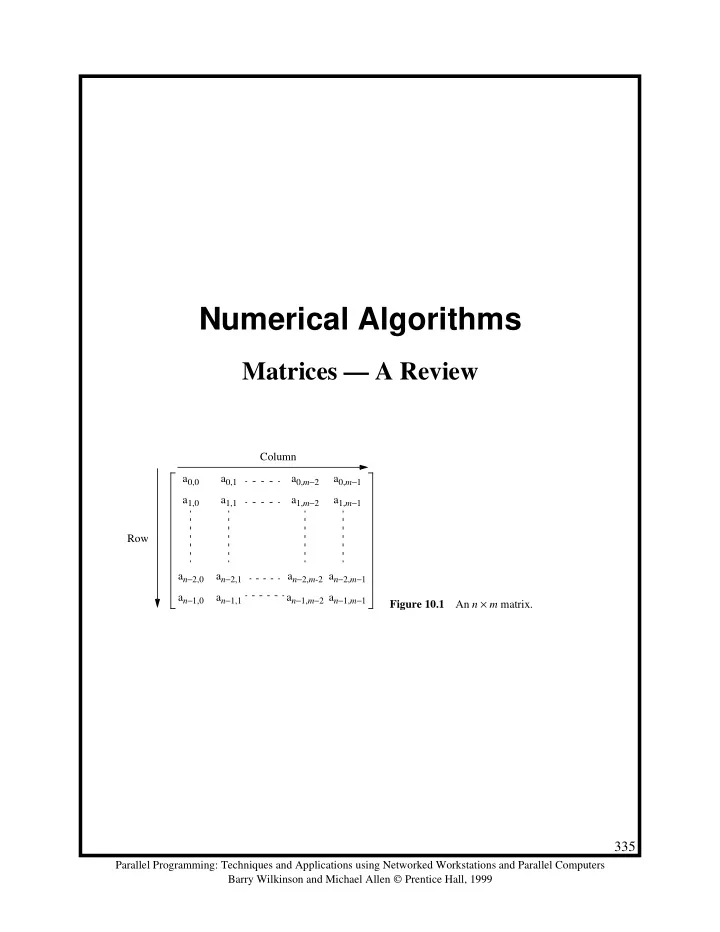

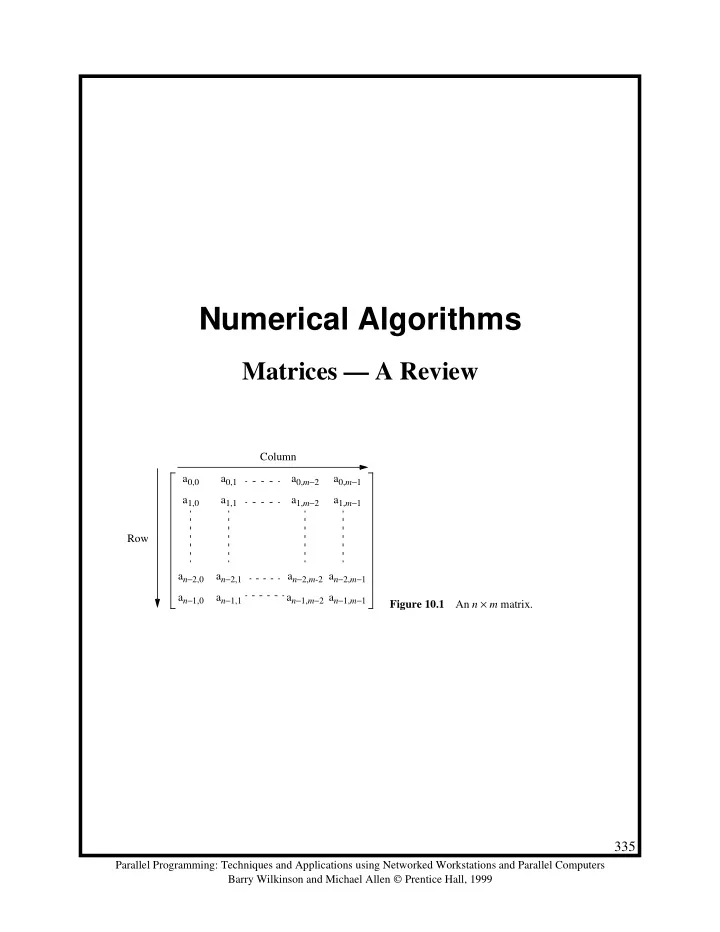

Numerical Algorithms Matrices — A Review Column a 0,0 a 0,1 a 0, m − 2 a 0, m − 1 a 1,0 a 1,1 a 1, m − 2 a 1, m − 1 Row a n − 2,0 a n − 2,1 a n − 2, m -2 a n − 2, m − 1 a n − 1,0 a n − 1,1 a n − 1, m − 2 a n − 1, m − 1 An n × m matrix. Figure 10.1 335 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Matrix Addition Matrix addition simply involves adding corresponding elements of each matrix to form the result matrix. Given the elements of A as a i , j and the elements of B as b i , j , each element of C is computed as c i , j = a i , j + b i , j (0 ≤ i < n , 0 ≤ j < m ) 336 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Matrix Multiplication Multiplication of two matrices, A and B , produces the matrix C whose elements, c i , j (0 ≤ i < n , 0 ≤ j < m ), are computed as follows: l – 1 ∑ c i j = a i,k b k,j , k = 0 where A is an n × l matrix and B is an l × m matrix. Column Sum Multiply results j Row i c i , j × = A B C Matrix multiplication, C = A × B . Figure 10.2 337 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Matrix-Vector Multiplication A vector is a matrix with one column; i.e., an n × 1 matrix. Matrix-vector multiplication follows directly from the definition of matrix-matrix multipli- cation by making B an n × 1 matrix. The result will be an n × 1 matrix (vector). × = A b c Row sum c i i Figure 10.3 Matrix-vector multiplication c = A × b . 338 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Relationship of Matrices to Linear Equations A system of linear equations can be written in matrix form Ax = b The matrix A holds the a constants, x is a vector of the unknowns, and b is a vector of the b constants. 339 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Implementing Matrix Multiplication Sequential Code Assume throughout that the matrices are square ( n × n matrices). The sequential code to compute A × B could simply be for (i = 0; i < n; i++) for (j = 0; j < n; j++) { c[i][j] = 0; for (k = 0; k < n; k++) c[i][j] = c[i][j] + a[i][k] * b[k][j]; } This algorithm requires n 3 multiplications and n 3 additions, leading to a sequential time complexity of Ο ( n 3 ). 340 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Parallel Code Usually based upon the direct sequential matrix multiplication algorithm. Sequential code has independent loops. With n processors (and n × n matrices), we can expect a parallel time complexity of Ο ( n 2 ), and this is easily obtainable. Also quite easy to obtain a time complexity of Ο ( n ) with n 2 processors, where one element of A and B are assigned to each processor. These implementations are cost optimal [since Ο ( n 3 ) = n × Ο ( n 2 ) = n 2 × Ο ( n )]. Possible to obtain Ο (log n ) with n 3 processors by parallelizing the inner loop - not cost optimal [since Ο ( n 3 ) ≠ n 3 × Ο (log n )]. Ο (log n ) is the lower bound for parallel matrix multiplication. 341 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Partitioning into Submatrices Usually, we want to use far fewer than n processors with n × n matrices because of the size of n . Submatrices Each matrix can be divided into blocks of elements called submatrices . These submatrices can be manipulated as if there were single matrix elements. Suppose the matrix is divided into s 2 submatrices. Each submatrix has n / s × n / s elements. Using the notation A p,q as the submatrix in submatrix row p and submatrix column q : for (p = 0; p < s; p++) for (q = 0; q < s; q++) { C p,q = 0; /* clear elements of submatrix */ for (r = 0; r < m; r++) /* submatrix multiplication and */ C p,q = C p,q + A p,r * B r,q ; /* add to accumulating submatrix */ } The line C p,q = C p,q + A p,r * B r,q ; means multiply submatrix A p,r and B r,q using matrix multiplication and add to submatrix C p,q using matrix addition. The arrangement is known as block matrix multiplication . Sum q Multiply results p × = A B C Figure 10.4 Block matrix multiplication. 342 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

a 0,0 a 0,1 a 0,2 a 0,3 b 0,0 b 0,1 b 0,2 b 0,3 a 1,0 a 1,1 a 1,2 a 1,3 b 1,0 b 1,1 b 1,2 b 1,3 × a 2,0 a 2,1 a 2,2 a 2,3 b 2,0 b 2,1 b 2,2 b 2,3 a 3,0 a 3,1 a 3,2 a 3,3 b 3,0 b 3,1 b 3,2 b 3,3 (a) Matrices A 0,0 B 0,0 A 0,1 B 1,0 a 0,0 a 0,1 b 0,0 b 0,1 a 0,2 a 0,3 b 2,0 b 2,1 × × + a 1,0 a 1,1 b 1,0 b 1,1 a 1,2 a 1,3 b 3,0 b 3,1 a 0,0 b 0,0 + a 0,1 b 1,0 a 0,0 b 0,1 + a 0,1 b 1,1 a 0,2 b 2,0 + a 0,3 b 3,0 a 0,2 b 2,1 + a 0,3 b 3,1 = + a 1,0 b 0,0 + a 1,1 b 1,0 a 1,0 b 0,1 + a 1,1 b 1,1 a 1,2 b 2,0 + a 1,3 b 3,0 a 1,2 b 2,1 + a 1,3 b 3,1 a 0,0 b 0,0 + a 0,1 b 1,0 + a 0,2 b 2,0 + a 0,3 b 3,0 a 0,0 b 0,1 + a 0,1 b 1,1 + a 0,2 b 2,1 + a 0,3 b 3,1 = a 1,0 b 0,0 + a 1,1 b 1,0 + a 1,2 b 2,0 + a 1,3 b 3,0 a 1,0 b 0,1 + a 1,1 b 1,1 + a 1,2 b 2,1 + a 1,3 b 3,1 = C 0,0 (b) Multiplying A 0,0 × B 0,0 to obtain C 0,0 Figure 10.5 Submatrix multiplication. 343 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Direct Implementation Allocate one processor to compute each element of C . Then n 2 processors would be needed. One row of elements of A and one column of elements of B are needed. Some of the same elements must be sent to more than one processor. Using submatrices, one processor would compute one m × m submatrix of C . Column j b[][j] Row i a[i][] Processor P i , j Figure 10.6 Direct implementation of c[i][j] matrix multiplication. 344 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Analysis Assuming n × n matrices and not submatrices. Communication With separate messages to each of the n 2 slave processors: t comm = n 2 ( t startup + 2 nt data ) + n 2 ( t startup + t data ) = n 2 (2 t startup + (2 n + 1) t data ) A broadcast along a single bus would yield t comm = ( t startup + n 2 t data ) + n 2 ( t startup + t data ) Dominant time now is in returning the results as t startup is usually significantly larger than t data . A gather routine should reduce the time. Computation Each slave performs in parallel n multiplications and n additions; i.e., t comp = 2 n 345 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Performance Improvement By using a tree construction so that n numbers can be added in log n steps using n proces- sors: a 0,0 b 0,0 a 0,1 b 1,0 a 0,2 b 2,0 a 0,3 b 3,0 × × × × P 0 P 1 P 2 P 3 + + P 0 P 2 + P 0 Figure 10.7 Accumulation using a tree c 0,0 construction. Computational time complexity of Ο (log n ) using n 3 processors Instead of Ο ( n ) using n 2 processors. 346 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Submatrices In every method, we can substitute submatrices for matrix elements to reduce the number of processors. Let us select m × m submatrices and s = n / m . Then there are s 2 submatrices in each matrix and s 2 processors. Communication Each of the s 2 slave processors must separately receive one row and one column of subma- trices. Each slave processor must separately return a C submatrix to the master processor giving a communication time of t comm = s 2 { 2( t startup + nmt data ) + ( t startup + m 2 t data ) } = ( n / m ) 2 { 3 t startup + ( m 2 + 2 nm ) t data } Complete matrices could be broadcast to every processor. As the size of the matrices, m , is increased, the data transmission time of each message increases but the actual number of messages decreases. Computation One sequential submatrix multiplication requires m 3 multiplications and m 3 additions. A submatrix addition requires m 2 additions. Hence t comp = s (2 m 3 + m 2 ) = Ο ( sm 3 ) = Ο ( nm 2 ) 347 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Recommend

More recommend