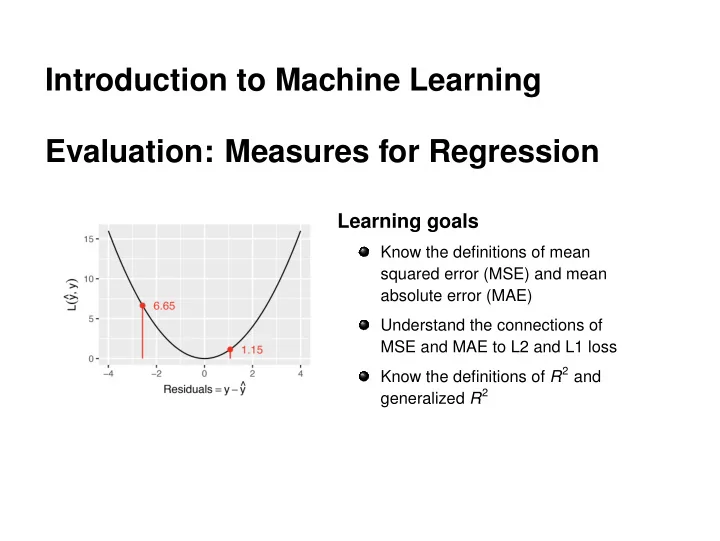

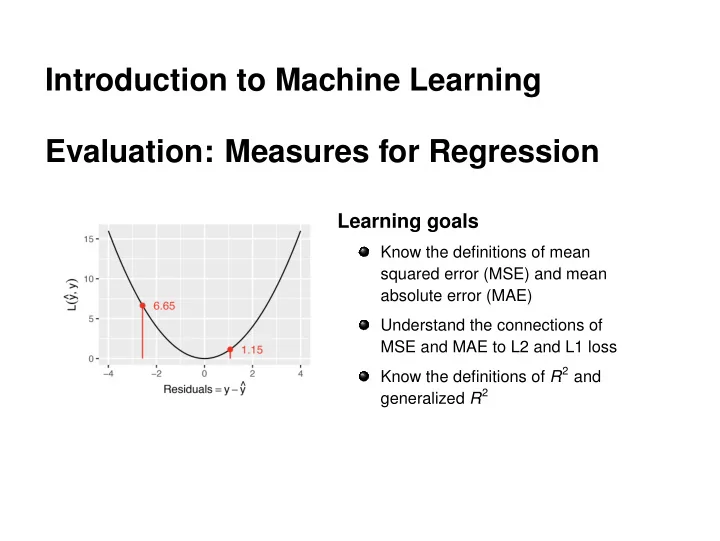

Introduction to Machine Learning Evaluation: Measures for Regression Learning goals Know the definitions of mean squared error (MSE) and mean absolute error (MAE) Understand the connections of MSE and MAE to L2 and L1 loss Know the definitions of R 2 and generalized R 2

MEAN SQUARED ERROR n ( y ( i ) − ˆ y ( i ) ) 2 ∈ [ 0 ; ∞ ) MSE = 1 � → L2 loss. n i = 1 Single observations with a large prediction error heavily influence the MSE , as they enter quadratically. 7 15 6 5 10 ^ , y ) 4 L ( y y 6.65 6.65 1.15 5 3 2 1.15 0 1 −4 −2 0 2 4 0 2 4 Residuals = y − y ^ x Similar measures: sum of squared errors (SSE), root mean squared error (RMSE, brings measurement back to the original scale of the outcome). � c Introduction to Machine Learning – 1 / 4

MEAN ABSOLUTE ERROR n | y ( i ) − ˆ MAE = 1 y ( i ) | ∈ [ 0 ; ∞ ) � → L1 loss. n i = 1 Less influenced by large errors and maybe more intuitive than the MSE. 7 15 6 5 10 ^ , y ) 4 L ( y y 2.58 6.65 1.07 5 3 2.58 2 1.07 1.15 0 1 −4 −2 0 2 4 1 2 3 4 5 Residuals = y − y ^ x Similar measures: median absolute error (for even more robustness). � c Introduction to Machine Learning – 2 / 4

R 2 Well-known measure from statistics. n ( y ( i ) − ˆ y ( i ) ) 2 � = 1 − SSE LinMod R 2 = 1 − i = 1 n SSE Intercept ( y ( i ) − ¯ y ) 2 � i = 1 Usually introduced as fraction of variance explained by the model Simpler: compares SSE of constant model (baseline) with complex model (LM) R 2 = 1: all residuals are 0, we predict perfectly, R 2 = 0: we predict as badly as the constant model If measured on the training data, R 2 ∈ [ 0 ; 1 ] (LM must be at least as good as the constant) On other data R 2 can even be negative as there is no guarantee that the LM generalizes better than a constant (overfitting) � c Introduction to Machine Learning – 3 / 4

GENERALIZED R 2 FOR ML A simple generalization of R 2 for ML seems to be: 1 − Loss ComplexModel Loss SimplerModel Works for arbitrary measures (not only SSE), for arbitrary models, on any data set of interest E.g. model vs constant, LM vs non-linear model, tree vs forest, model without some features vs model with them included Fairly unknown; our terminology (generalized R 2 ) is non-standard � c Introduction to Machine Learning – 4 / 4

Recommend

More recommend