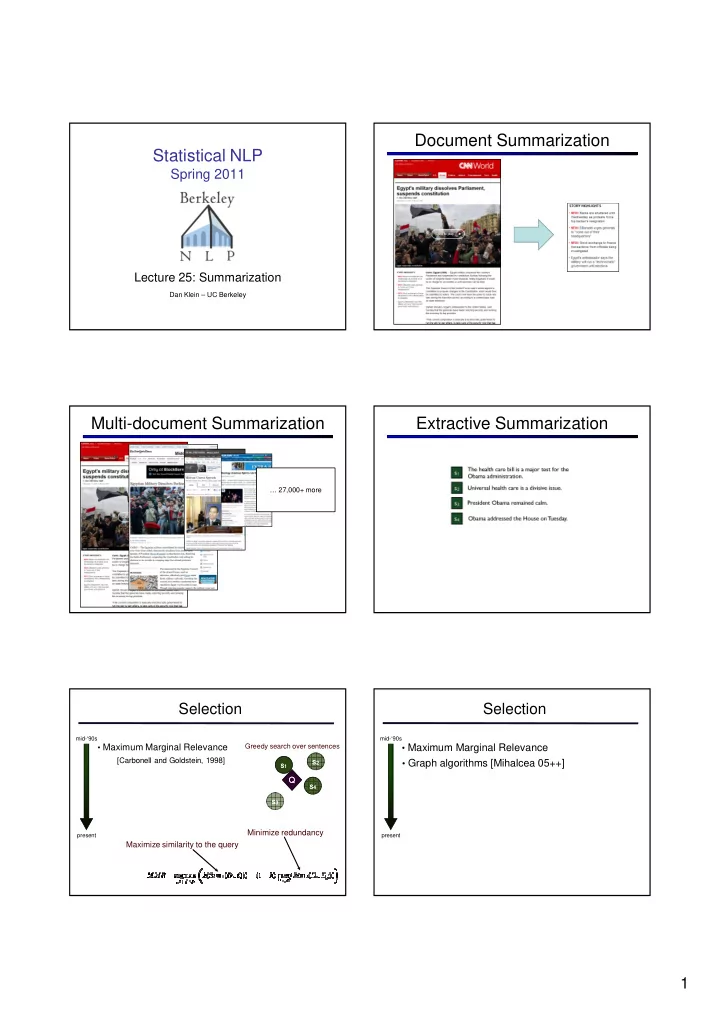

Document Summarization Statistical NLP Spring 2011 Lecture 25: Summarization Dan Klein – UC Berkeley Multi-document Summarization Extractive Summarization … 27,000+ more Selection Selection mid-‘90s mid-‘90s • Maximum Marginal Relevance Greedy search over sentences • Maximum Marginal Relevance [Carbonell and Goldstein, 1998] s 2 • Graph algorithms [Mihalcea 05++] s 1 Q Q s 4 s 3 Minimize redundancy present present Maximize similarity to the query 1

Selection Selection mid-‘90s mid-‘90s • Maximum Marginal Relevance • Maximum Marginal Relevance • Graph algorithms • Graph algorithms s 1 s 2 s 1 s 2 present present Nodes are sentences Nodes are sentences s 3 s 4 s 3 s 4 Edges are similarities Selection Selection mid-‘90s mid-‘90s • Maximum Marginal Relevance • Maximum Marginal Relevance • Graph algorithms • Graph algorithms • Word distribution models Stationary distribution represents node centrality w P D (w) (w) w w P A (w) (w) s 2 s 1 1 present present Obama 0.017 Obama ? s 4 ~ speech 0.024 speech ? Nodes are sentences s 3 health 0.009 health ? Montana 0.002 Montana ? Edges are similarities Input document distribution Summary distribution Selection Selection mid-‘90s mid-‘90s • Maximum Marginal Relevance • Maximum Marginal Relevance • Graph algorithms • Graph algorithms • Word distribution models • Word distribution models • Regression models SumBasic [Nenkova and Vanderwende, 2005] F(x) word values word values position position length length Value(w i ) = P D (w i ) present present Value(s i ) = sum of its word values s 1 12 1 24 s 1 Choose s i with largest value s 2 4 2 14 s 2 Adjust P D (w) s 3 6 3 18 s 3 Repeat until length constraint frequency is just one of many features 2

Selection mid-‘90s • Maximum Marginal Relevance • Graph algorithms • Word distribution models • Regression models • Topic model-based [Haghighi and Vanderwende, 2009] present 3

PYTHY H & V 09 Selection Optimal search using MMR mid-‘90s • Maximum Marginal Relevance s 2 s 1 • Graph algorithms Q Q s 4 • Word distribution models s 3 • Regression models • Topic models Integer Linear Program • Globally optimal search present [McDonald, 2007] 4

Selection Selection [Gillick and Favre, 2008] [Gillick and Favre, 2008] The health care bill is a major test for the The health care bill is a major test for the s 1 concept concept value value s 1 concept concept value value Obama administration. Obama administration. obama 3 s 2 Universal health care is a divisive issue. s 2 Universal health care is a divisive issue. s 3 President Obama remained calm. s 3 President Obama remained calm. s 4 Obama addressed the House on Tuesday. s 4 Obama addressed the House on Tuesday. Selection Selection [Gillick and Favre, 2008] [Gillick and Favre, 2008] The health care bill is a major test for the The health care bill is a major test for the concept concept value value concept concept value value s 1 s 1 Obama administration. Obama administration. obama 3 obama 3 s 2 s 2 Universal health care is a divisive issue. Universal health care is a divisive issue. health 2 health 2 s 3 President Obama remained calm. s 3 President Obama remained calm. house 1 s 4 Obama addressed the House on Tuesday. s 4 Obama addressed the House on Tuesday. Maximize Concept Coverage Selection Optimization problem: Set Coverage [Gillick and Favre, 2008] The health care bill is a major test for the s 1 concept concept value value Value of Obama administration. concept c obama 3 s 2 Universal health care is a divisive issue. health 2 Set of concepts s 3 President Obama remained calm. house 1 Set of extractive summaries present in summary s of document set D s 4 Obama addressed the House on Tuesday. Results Bigram Recall Pyramid summary summary length length value value Length limit: greedy Baseline 4.00 Baseline 23.5 {s 1 , s 3 } 17 5 18 words 2009 2009 35.0 optimal 6.85 {s 2 , s 3 , s 4 } 17 6 [Gillick and Favre 09] 5

Selection Selection [Gillick and Favre, 2009] Integer Linear Program for the maximum coverage model [Gillick, Riedhammer, Favre, Hakkani-Tur, 2008] total concept value summary length limit maintain consistency between selected sentences and concepts This ILP is tractable for reasonable problems Results [G & F, 2009] Error Breakdown? • 52 submissions [Gillick and Favre, 2008] • 27 teams • 44 topics • 10 input docs • 100 word summaries Gillick & Favre • Rating scale: 1-10 • Rating scale: 0-1 • Humans in [8.3, 9.3] • Humans in [0.62, 0.77] • Rating scale: 1-10 • Rating scale: 0-1 • Humans in [8.5, 9.3] • Humans in [0.11, 0.15] Selection Selection Some interesting work on sentence ordering First sentences are unique [Barzilay et. al., 1997; 2002] But choosing independent sentences is easier • First sentences usually stand alone well • Sentences without unresolved pronouns • Classifier trained on OntoNotes: <10% error rate Baseline ordering module (chronological) is not obviously worse than anything fancier How to include sentence position? 6

Problems with Extraction Problems with Extraction What would a human do? What would a human do? It is therefore unsurprising that Lindsay pleaded It is therefore unsurprising that Lindsay pleaded not guilty yesterday afternoon to the charges not guilty yesterday afternoon to the charges filed against her, according to her publicist. filed against her, according to her publicist. Sentence Rewriting Sentence Rewriting [Berg-Kirkpatrick, Gillick, and Klein 11] [Berg-Kirkpatrick, Gillick, and Klein 11] Sentence Rewriting Sentence Rewriting New Optimization problem: Safe Deletions Value of deletion d Set branch cut deletions made in creating summary s How do we know how much a given deletion costs? [Berg-Kirkpatrick, Gillick, and Klein 11] [Berg-Kirkpatrick, Gillick, and Klein 11] 7

Learning Beyond Extraction / Compression? Features: Sentence extraction is limiting ... and boring! Embed ILP in cutting plane algorithm. But abstractive summaries are Results much harder to generate… Bigram Recall Pyramid Baseline 4.00 Baseline 23.5 2009 2009 6.85 35.0 in 25 words? Now Now 7.75 41.3 [Berg-Kirkpatrick, Gillick, and Klein 11] http://www.rinkworks.com/bookaminute/ 8

Recommend

More recommend