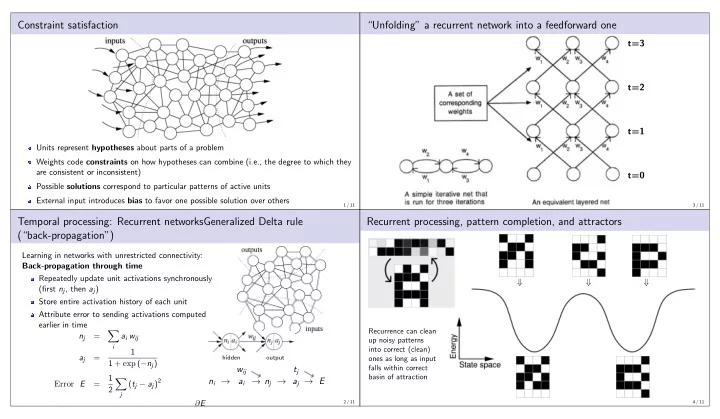

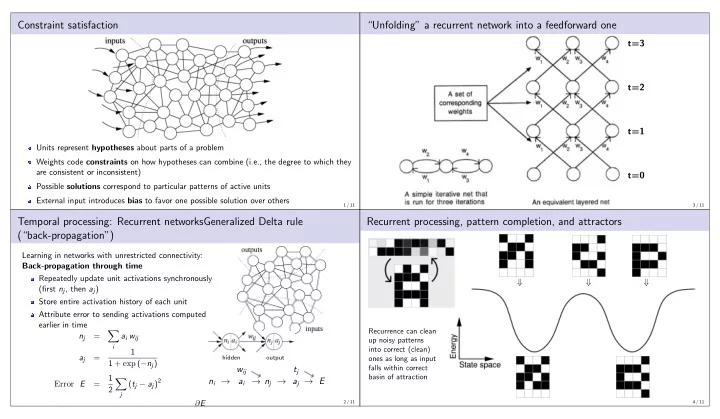

Constraint satisfaction “Unfolding” a recurrent network into a feedforward one t=3 t=2 t=1 Units represent hypotheses about parts of a problem Weights code constraints on how hypotheses can combine (i.e., the degree to which they are consistent or inconsistent) t=0 Possible solutions correspond to particular patterns of active units External input introduces bias to favor one possible solution over others 1 / 11 3 / 11 Temporal processing: Recurrent networksGeneralized Delta rule Recurrent processing, pattern completion, and attractors (“back-propagation”) Learning in networks with unrestricted connectivity: Back-propagation through time Repeatedly update unit activations synchronously ⇓ ⇓ ⇓ (first n j , then a j ) Store entire activation history of each unit Attribute error to sending activations computed earlier in time Recurrence can clean � n j = a i w ij up noisy patterns i into correct (clean) 1 a j = hidden output ones as long as input 1 + exp ( − n j ) falls within correct w ij t j basin of attraction 1 � ( t j − a j ) 2 n i → a i → n j → a j → E Error E = 2 j 2 / 11 4 / 11 − ǫ ∂ E Gradient descent: △ w =

Temporal processing: Simple recurrent networks (Elman, 1990) Letter prediction (Elman, 1990) Fully recurrent network Simple recurrent network (SRN) Network is trained to predict the next Computationally intensive to simulate Adapt feedforward network to learn letter in text (without spaces) Must update unit activities multiple times temporal tasks per input Computationally efficient but functionally limited compared to fully recurrent Prediction Error network Network discovers word boundaries as peaks in letter prediction error 5 / 11 7 / 11 Sequential prediction tasks Word prediction (Elman, 1990) Network is presented Input is a sequence of discrete elements (e.g., letters, words) with sequence of words Target is next item in the sequence (localist representation Self-supervised learning: environment provides both inputs and targets for each) Network is guessing ; cannot be completely correct but can perform better than chance if Sequence is constructed the input sequence is structured from 2-word or 3-word sentences Given a particular sequence of past elements and current input, total error is minimized by generating the probabilities of next elements (i.e., their proportion of occurrence in No punctuation or this context across examples) sentence boundaries Across examples, each next element “votes for” its targets; result is average Trained to predict the Activations next word within and Time Input A B C Example: Train on ABAC, ABCA and ACBB 0 A 0.0 0.67 0.33 across sentences 1 B 0.5 0.0 0.5 [analogous to predicting 2 C 1.0 0.0 0.0 letters within/across words] 6 / 11 8 / 11

Sentences Generalization to ZOG (man) Test network on novel input (ZOG) with no overlap with existing words, where ZOG occurs everwhere that MAN did No additional training Network produces hidden representation for ZOG that is highly similar to that of MAN (based on context ) 9 / 11 11 / 11 Learned word representations Hierarchical clustering of hidden representations for words after training Network has learned parts of speech and (rudimentary) semantic similarity 10 / 11

Recommend

More recommend