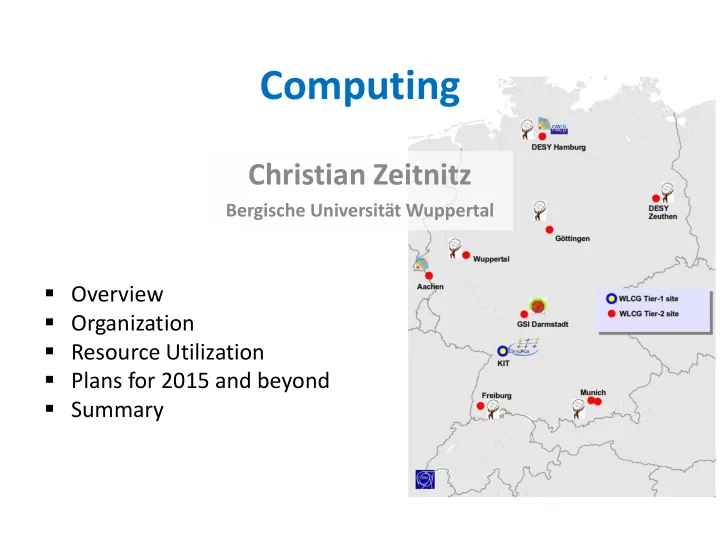

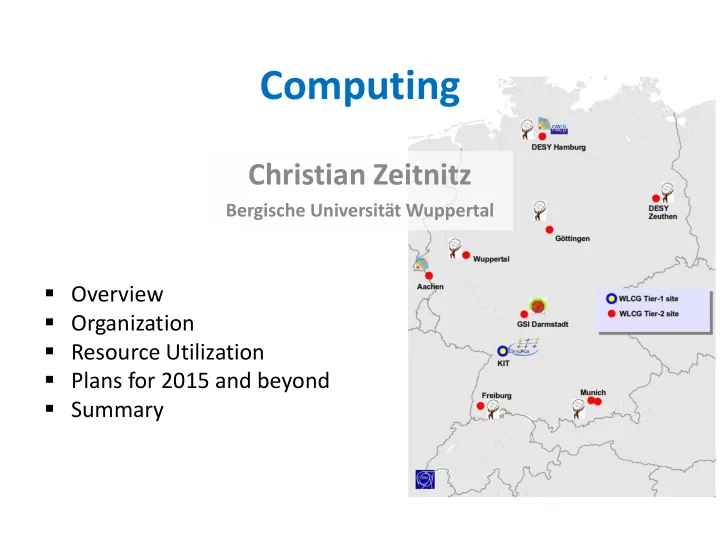

Computing Christian Zeitnitz Bergische Universität Wuppertal Overview Organization Resource Utilization Plans for 2015 and beyond Summary

Overview WLCG resources pledges in 2014 o CPU: ~190.000 CPU cores o Disk: ~180 PByte German Share o Tier-1: 15% o Tier-2: 10% Shares in Germany o Tier-1: ~60% o Tier-2: ~40% Tier sites o Tier-1: KIT o Tier-2 • HGF: DESY, GSI • MPI für Physik (MPP) • Universities: Aachen, Freiburg, Göttingen, Munich, Wuppertal C. Zeitnitz - Computing 2

German WLCG-Sites Tier-1 at KIT o 4 LHC-Experiments, Compass, Auger o Previously Belle, D0, CDF o One of the biggest Tier-1 in the WLCG o Tier-1 for A, CH, CZ, PL, SK o Main tasks: data (re-)processing and distribution Tier-2 o Main tasks: end user analysis, simulation Additional resources for analysis o National Analysis Facility o DESY (4000 cores, 1PB) o GSI (ALICE) Network o LHCOne to connect Tier-2 and Tier-1 sites Personnel financed by sites (technical) and funding agencies (experiment specific) C. Zeitnitz - Computing 3

Organization Cooperation between experiments and computing centers works extremely well Three bodies o Overview Board of the Tier-1 • KIT, funding agencies, experiments, users o “Technical Advisory Board“ of the Tier-1 • Technical decisions and coordination between experiments and Tier-1 • Find solutions across experiments o Grid Project Board of the Terascale Alliance • General discussions about the future development • Initiate projects across collaborations • Organize workshops The “GridKa School of Computing” was born already 2003 out of this collaboration C. Zeitnitz - Computing 4

Resource Utilization in Germany German Tier sites operated very successfully and with high reliability Very high load on Tier-1 and Tier-2 over the last years Tier-2 sites delivered substantially (up to 200%) more CPU resources than pledged Reason Resource usage 7/2012-6/2013 o More CPU required for analysis as well as for simulation Source of CPU cycles o unused Tier-3 resources or from other communities operated within the same cluster C. Zeitnitz - Computing 5

CPU Shares German Tier-1 and Tier-2 7/2012-6/2014 C. Zeitnitz - Computing 6

DESY NAF Utilization National Analysis Facilities o End user analysis o Direct connection to Tier-2 for good data access NAF CPU usage by institutes Dec. 2009 – Apr. 2013 +16 German DESY Institutes 31% (39%) Uni-HH 30% (partially own resources) C. Zeitnitz - Computing 7

What are the CPUs used for? ATLAS Jan-Jul 2012 100,000 Jobs Simulation 30,000 Jobs Analysis • Simulation dominates CPU usage on Tier-1/2 centers (~60%) • Analysis ~20-30% o Requires high data throughput → fast disk systems o 2/3 of hardware investment spend on disk systems • Utilization varies substantially • Not included: NAF and Tier-3 usage C. Zeitnitz - Computing 8

Future Development of Computing Resources Installed, pledged and required resources o Up to 2013: installed resources o 2014/15: Computing-Resources Review Board C-RRB April 2014 o 2016-19: 20% increase per year for CPU and 15% for disk resources (flat budget) o Resource requirements assume already a substantial optimization of the computing models and software efficiency C. Zeitnitz - Computing 9

Development of the LHC-Computing Computing models are changing o Network performance substantially better than expected • More data copied between Tier-centers o System becomes less hierarchical o Computing gets more complex and less plannable Computing Technology o Adapt to technological advances in hard- and software • Utilization of vectorization, parallization on standard CPUs and GPUs • Utilize Cloud and HPC systems • Optimize components for data storage and distribution Need to improve the overall efficiency by approximately a factor of 4 o Computing resources will increase substantially slower than the amount of data! German groups are contributing to these activities C. Zeitnitz - Computing 10

Current Plans for 2015-2019 in Germany General concept o Operation cost and technical personnel are provided by the corresponding institution of the Tier-center (approx. 50% of the total cost) o Funds for hardware and experiment specific tasks funded by external sources Tier-1 (GridKa at KIT) and Tier-2/NAF at DESY and GSI o Resource increase according to requirements scrutinized by the C-RRB o Financed by the Helmholtz Association • base funds • extra investment funds – not secured yet (application in 2014) Tier-2 at MPI für Physik o Resource increase according to requirements scrutinized by the C-RRB o Investment funds secured Tier-2 at 5 University sites o Very heterogeneous financing in the past (mainly Helmholtz Association and BMBF) o No hardware investment since 2013 o Currently no funding source for hardware for the upcoming years C. Zeitnitz - Computing 11

Options outside the Tier-Structure Data Analysis only possible with very high performance disk systems o Only Tier-1, Tier-2 and NAF centers are suitable Simulation could be run on different resources Opportunistic usage of Tier-3 resources o Resources at Tier-2 sites already used o Non-Tier-sites • Might be possible to cover up to 10-15% of the CPU requirement • Not plannable, hence partially unreliable HPC and Cloud resources o Test beds exist in the WLCG as well as in Germany o Cloud still too expensive All options require substantial personnel for development and operation Could provide up to 20% of CPU resource, but no solution for shortage of analysis resources C. Zeitnitz - Computing 12

Summary Computing was essential for the strong contribution of the German groups to the success of the LHC experiments The Germans Tier-sites are among the most reliable worldwide and provided substantially more CPU cycles than pledged Very good cooperation within the German computing community was essential for this success Future development of the Tier-centers o Operation cost and technical personnel are secured o Tier-1 and some Tier-2 (DESY, GSI) will apply for funds from the Helmholtz Association o No visible funding option for the Tier-2 at the Universities o Looking at different possibilities to find at least part of the required resources (Tier-3, HPC, Cloud …) – No solution for analysis German groups need a reliable computing for the upcoming LHC Run C. Zeitnitz - Computing 13

Backup C. Zeitnitz - Computing 14

Financing of the Tier Centers in the Past Tier-1 o Investment: ca. 4 M€/year (BMBF) o Operation and personnel: ca. 3,8 M€ (HGF PoF I and II) Tier-2 and NAF o Average over 2008-12 • Investment: ca. 2,9M€/year • Operation and personnel: ca. 2,6 M€/year (DESY, GSI, MPP, Universities) Additional personnel o Experiment specific tasks • 19,5 FTE • Financed by: BMBF, DESY, KIT, GSI, MPP o GRID Tools Development • ~10 FTE • Financed by: Terascale Allianz, DESY, KIT, GSI, BMBF C. Zeitnitz - Computing 15

NAF Utilization at DESY mpp University of Hamburg owns part of the NAF resources, hence the large share C. Zeitnitz - Computing 16

Recommend

More recommend