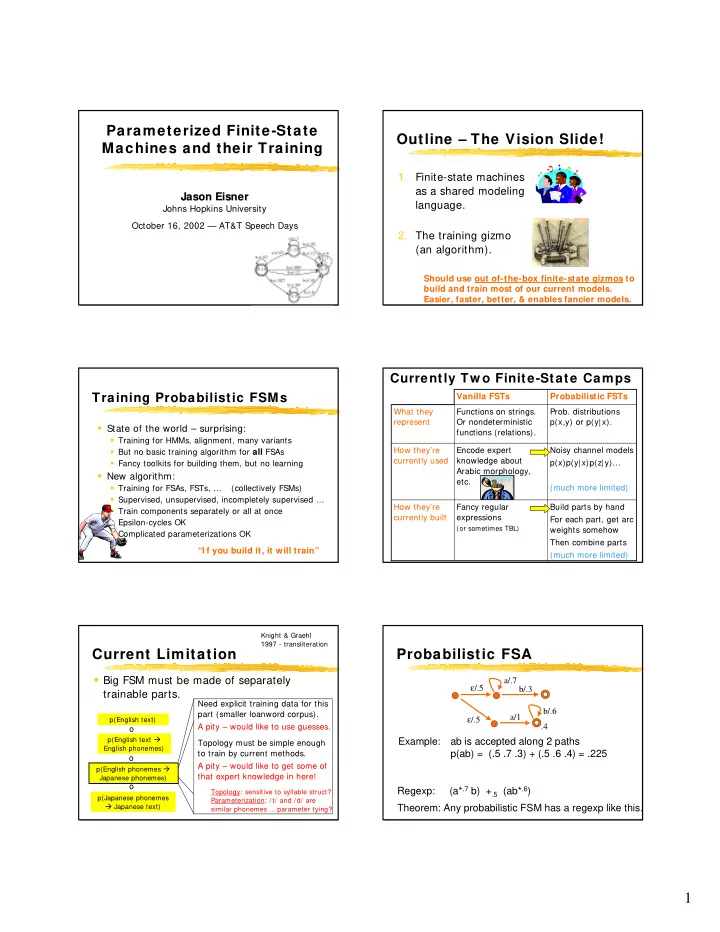

Parameterized Finite-State Outline – The Vision Slide! Machines and their Training 1. Finite-state machines as a shared modeling Jason Eisner Jason Eisner language. Johns Hopkins University October 16, 2002 — AT&T Speech Days 2. The training gizmo (an algorithm). Should use out of-the-box finite-state gizmos to build and train most of our current models. Easier, faster, better, & enables fancier models. Currently Tw o Finite-State Camps Training Probabilistic FSMs Vanilla FSTs Probabilistic FSTs What they Functions on strings. Prob. distributions represent Or nondeterministic p(x,y) or p(y|x). � State of the world – surprising: functions (relations). � Training for HMMs, alignment, many variants How they’re Encode expert Noisy channel models � But no basic training algorithm for all FSAs currently used knowledge about p(x)p(y| x)p(z| y)… � Fancy toolkits for building them, but no learning Arabic morphology, � New algorithm: etc. (much more limited) � Training for FSAs, FSTs, … (collectively FSMs) � Supervised, unsupervised, incompletely supervised … How they’re Fancy regular Build parts by hand � Train components separately or all at once currently built expressions For each part, get arc � Epsilon-cycles OK (or sometimes TBL) weights somehow � Complicated parameterizations OK Then combine parts “I f you build it, it will train” (much more limited) Knight & Graehl 1997 - transliteration Current Limitation Probabilistic FSA � Big FSM must be made of separately a/.7 ε /.5 b/.3 trainable parts. Need explicit training data for this b/.6 part (smaller loanword corpus). ε /.5 a/1 p(English text) A pity – would like to use guesses. .4 o p(English text � Example: ab is accepted along 2 paths Topology must be simple enough English phonemes) to train by current methods. p(ab) = (.5 .7 .3) + (.5 .6 .4) = .225 o A pity – would like to get some of p(English phonemes � that expert knowledge in here! Japanese phonemes) o (a* .7 b) + .5 (ab* .6 ) Regexp: Topology: sensitive to syllable struct? p(Japanese phonemes Parameterization: /t/ and /d/ are � Japanese text) Theorem: Any probabilistic FSM has a regexp like this. similar phonemes … parameter tying? 1

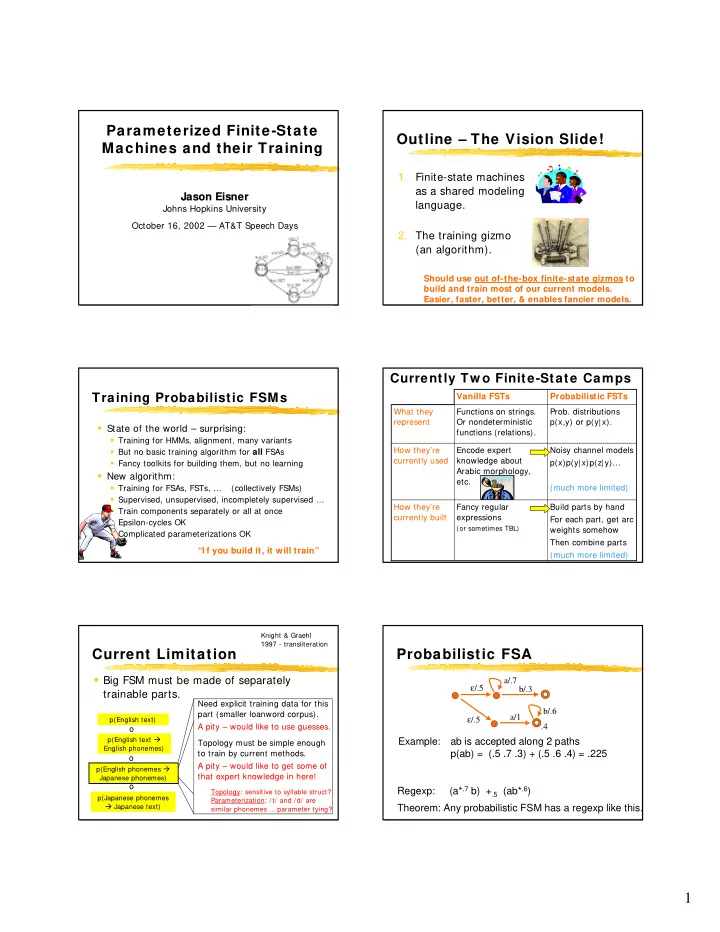

Weights Need Not be Reals Goal: Parameterized FSMs a/ q ε / p b/ r � Parameterized FSM: � An FSM whose arc probabilities depend on b/ y ε / w a/ x parameters: they are formulas. z a/ q a/ r Expert first: Construct Example: ab is accepted along 2 paths ε / p the FSM (topology & b/ (1-q)r weight(ab) = ( p ⊗ ⊗ ⊗ q ⊗ ⊗ ⊗ r ) ⊕ ⊗ ⊗ ⊕ ( w ⊗ ⊕ ⊕ ⊗ x ⊗ ⊗ ⊗ ⊗ ⊗ y ⊗ ⊗ ⊗ z ) ⊗ ⊗ 1-s parameterization). Automatic takes over: ⊗ ⊕ ⊕ * satisfy ε / 1-p If ⊗ a/ q* exp(t+ u) If * satisfy “ “semiring semiring” ” axioms, axioms, Given training data, find the finite - -state constructions state constructions the finite parameter values a/ exp(t+ v) continue to w ork correctly. continue to w ork correctly. that optimize arc probs. Knight & Graehl 1997 - transliteration Goal: Parameterized FSMs Goal: Parameterized FSMs � FSM whose arc probabilities are formulas. � Parameterized FSM: � An FSM whose arc probabilities depend on “Would like to get some of that expert knowledge in here” parameters: they are formulas. p(English text) o a/ .2 a/ .3 Expert first: Construct Use probabilistic regexps like p(English text � (a* .7 b) + .5 (ab* .6 ) … ε / .1 the FSM (topology & b/. 8 English phonemes) parameterization). .7 o If the probabilities are variables p(English phonemes � (a* x b) + y (ab* z ) … Automatic takes over: ε / .9 a/ .44 Japanese phonemes) Given training data, find then arc weights of the compiled o parameter values a/ .56 machine are nasty formulas. p(Japanese phonemes that optimize arc probs. (Especially after minimization!) � Japanese text) Knight & Graehl Knight & Graehl 1997 - transliteration 1997 - transliteration Goal: Parameterized FSMs Goal: Parameterized FSMs � An FSM whose arc probabilities are � An FSM whose arc probabilities are formulas. formulas. “/t/ and /d/ are similar …” “/t/ and /d/ are similar …” p(English text) p(English text) Tied probs for doubling them: Loosely coupled probabilities: o o p(English text � p(English text � /t/:/tt/ /t/:/tt/ English phonemes) English phonemes) p exp p+ q+ r (coronal, stop, o o unvoiced ) p(English phonemes � p(English phonemes � /d/:/dd/ Japanese phonemes) Japanese phonemes) /d/:/dd/ o o exp p+ q+ s (coronal, stop, p p(Japanese phonemes p(Japanese phonemes voiced ) (with normalization) � Japanese text) � Japanese text) 2

Outline of this talk Finite-State Operations 1. What can you build with � Projection GIVES YOU marginal distribution parameterized FSMs? 2. How do you train them? domain( p(x,y) ) p(x) = range( p(x,y) ) p(y) = a : b / 0.3 a : b / 0.3 Finite-State Operations Finite-State Operations � Probabilistic union GIVES YOU mixture model � Probabilistic union GIVES YOU mixture model α p(x) + (1- α )q(x) + α p(x) + 0.3 q(x) p(x) q(x) 0.3 p(x) + 0.7 q(x) = = α 0.3 p(x) p(x) Learn the mixture parameter α ! 1- α q(x) q(x) 0.7 Finite-State Operations Finite-State Operations � Concatenation, probabilistic closure � Composition GIVES YOU chain rule HANDLE unsegmented text p(x|y) o p(y|z) p(x| z) = * 0.3 p(x) q(x) p(x) 0.3 p(x|y) o p(y|z) p(x) p(x,z) p(x) q(x) o z = 0.7 � The most popular statistical FSM operation � Just glue together machines for the different segments, and let them figure out how to align � Cross-product construction with the text 3

Finite-State Operations Finite-State Operations � Intersection GIVES YOU product models � Directed replacement MODELS noise or � e.g., exponential / maxent, perceptron, Naïve Bayes, … postprocessing � Need a normalization op too – computes ∑ x f(x) “pathsum” or “partition function” o D p(x,y) p(x, noisy y) = & q(x) p(x) p(x)* q(x) = noise model defined by dir. replacement ∝ p(A(x)|y) & p(B(x)|y) & & p(y) p NB (y | x) � Resulting machine compensates for noise or postprocessing � Cross-product construction (like composition) Other Useful Finite-State Finite-State Operations Constructions � Complete graphs YIELD n-gram models � Conditionalization (new operation) � Other graphs YIELD fancy language models (skips, caching, etc.) condit( p(x,y) ) p(y | x) = � Compilation from other formalism � FSM: � Wordlist (cf. trie), pronunciation dictionary ... � Resulting machine can be composed with � Speech hypothesis lattice � Decision tree (Sproat & Riley) other distributions: p(y | x) * q(x) � Weighted rewrite rules (Mohri & Sproat) � Construction: � TBL or probabilistic TBL (Roche & Schabes) reciprocal(determinize(domain( ))) o p(x,y) p(x,y) � PCFG (approximation!) (e.g., Mohri & Nederhof) � Optimality theory grammars (e.g., Eisner) not possible for all weighted FSAs � Logical description of set (Vaillette; Klarlund) Regular Expression Calculus Regular Expression Calculus as a Modelling Language as a Modelling Language Many features you wish other languages had! Programming Languages The Finite-State Case Function Function on strings, Programming Languages The Finite-State Case or probability distrib. Function composition Machine composition Nondeterminism Nondeterminism Source code Regular expression Parallelism Compose FSA with FST (can be probabilistic) Object code Finite state machine Function inversion Function inversion Compiler Regexp compiler (cf. Prolog) Optimization of Determinization, Higher-order functions Transform object code object code minimization, pruning (apply operators to it) 4

Regular Expression Calculus Outline as a Modelling Language � Statistical FSMs still done in assembly language 1. What can you build with � Build machines by manipulating arcs and states parameterized FSMs? � For training, 2. How do you train them? � get the weights by some exogenous procedure and patch them onto arcs Hint: Make the finite-state � you may need extra training data for this machinery do the work. � you may need to devise and implement a new variant of EM � Would rather build models declaratively � ((a* .7 b) + .5 (ab* .6 )) ° repl .9 ((a:(b + .3 ε ))*,L,R) How Many Parameters? How Many Parameters? But really I built it as But really I built it as Final machine p(x,z) p(x,y) o p(z|y) p(x,y) o p(z|y) 5 free parameters 5 free parameters Even these 6 numbers could be tied ... or derived by formula from 17 weights a smaller parameter set. – 4 sum-to-one constraints = 13 apparently free parameters 1 free parameter 1 free parameter How Many Parameters? Training a Parameterized FST But really I built it as Given: an expression (or code) to build the FST p(x,y) o p(z|y) from a parameter vector θ Really I built this as Pick an initial value of θ 1. (a:p)* .7 (b: (p + .2 q))* .5 2. Build the FST – implements fast prob. model 3 free parameters 3. Run FST on some training examples 5 free parameters to compute an objective function F( θ ) Collect E-counts or gradient ∇ F( θ ) 4. Update θ to increase F( θ ) 5. 6. Unless we converged, return to step 2 1 free parameter 5

Recommend

More recommend