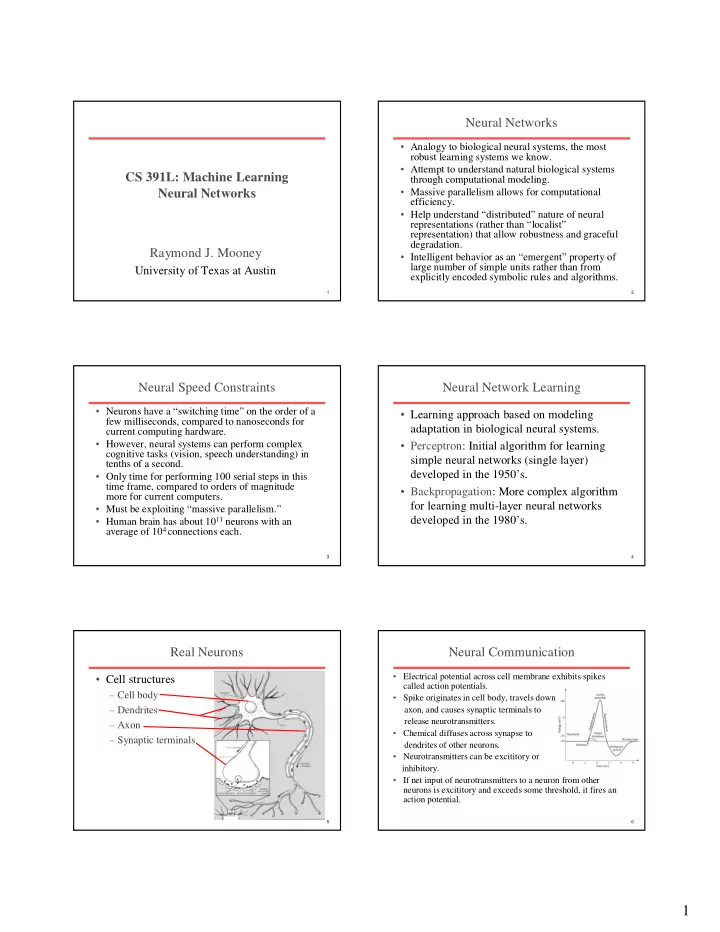

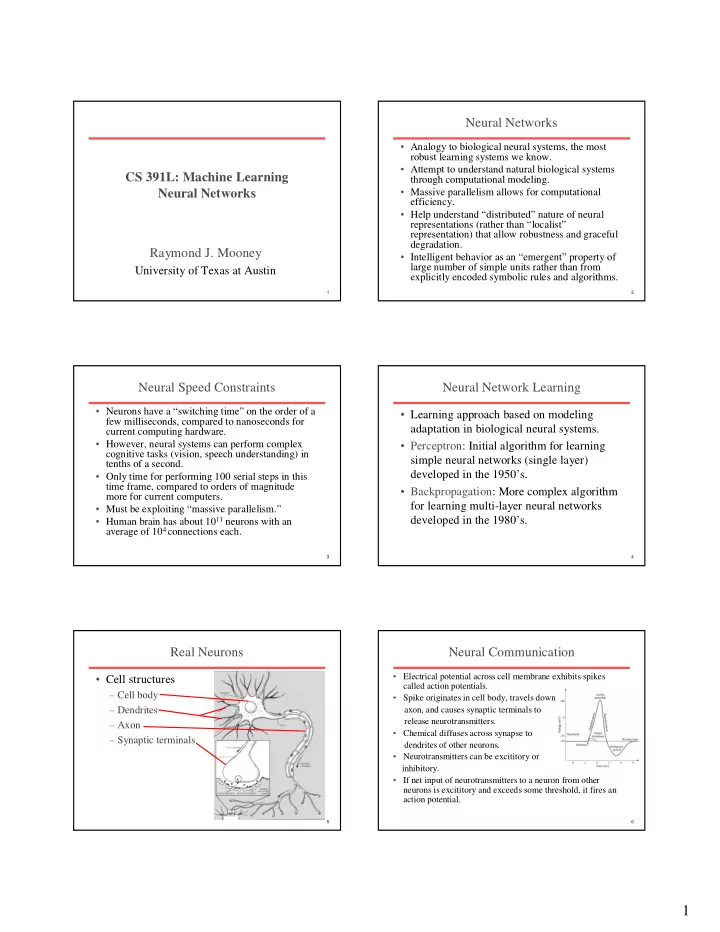

Neural Networks • Analogy to biological neural systems, the most robust learning systems we know. • Attempt to understand natural biological systems CS 391L: Machine Learning through computational modeling. • Massive parallelism allows for computational Neural Networks efficiency. • Help understand “distributed” nature of neural representations (rather than “localist” representation) that allow robustness and graceful degradation. Raymond J. Mooney • Intelligent behavior as an “emergent” property of large number of simple units rather than from University of Texas at Austin explicitly encoded symbolic rules and algorithms. 1 2 Neural Speed Constraints Neural Network Learning • Neurons have a “switching time” on the order of a • Learning approach based on modeling few milliseconds, compared to nanoseconds for adaptation in biological neural systems. current computing hardware. • However, neural systems can perform complex • Perceptron: Initial algorithm for learning cognitive tasks (vision, speech understanding) in simple neural networks (single layer) tenths of a second. developed in the 1950’s. • Only time for performing 100 serial steps in this time frame, compared to orders of magnitude • Backpropagation: More complex algorithm more for current computers. for learning multi-layer neural networks • Must be exploiting “massive parallelism.” • Human brain has about 10 11 neurons with an developed in the 1980’s. average of 10 4 connections each. 3 4 Real Neurons Neural Communication • Electrical potential across cell membrane exhibits spikes • Cell structures called action potentials. – Cell body • Spike originates in cell body, travels down – Dendrites axon, and causes synaptic terminals to release neurotransmitters. – Axon • Chemical diffuses across synapse to – Synaptic terminals dendrites of other neurons. • Neurotransmitters can be excititory or inhibitory. • If net input of neurotransmitters to a neuron from other neurons is excititory and exceeds some threshold, it fires an action potential. 5 6 1

Real Neural Learning Artificial Neuron Model • Model network as a graph with cells as nodes and synaptic • Synapses change size and strength with connections as weighted edges from node i to node j , w ji experience. 1 • Hebbian learning: When two connected • Model net input to cell as w 12 w 16 w 15 neurons are firing at the same time, the w 13 w 14 = net w o j ji i strength of the synapse between them ∑ i 2 3 4 5 6 increases. • Cell output is: o j net < T 0 if • “Neurons that fire together, wire together.” = j j o ≥ j net T 1 1 if i j ( T j is threshold for unit j ) 0 T j net j 7 8 Neural Computation Perceptron Training • McCollough and Pitts (1943) showed how such model • Assume supervised training examples neurons could compute logical functions and be used to giving the desired output for a unit given a construct finite-state machines. set of known input activations. • Can be used to simulate logic gates: – AND: Let all w ji be T j / n, where n is the number of inputs. • Learn synaptic weights so that unit – OR: Let all w ji be T j produces the correct output for each – NOT: Let threshold be 0, single input with a negative weight. example. • Can build arbitrary logic circuits, sequential machines, and computers with such gates. • Perceptron uses iterative update algorithm • Given negated inputs, two layer network can compute any to learn a correct set of weights. boolean function using a two level AND-OR network. 9 10 Perceptron Learning Rule Perceptron Learning Algorithm • Update weights by: • Iteratively update weights until convergence. w = w + η t − o o ( ) ji ji j j i Initialize weights to random values where η is the “learning rate” Until outputs of all training examples are correct For each training pair, E , do: t j is the teacher specified output for unit j . Compute current output o j for E given its inputs • Equivalent to rules: Compare current output to target value, t j , for E Update synaptic weights and threshold using learning rule – If output is correct do nothing. • Each execution of the outer loop is typically – If output is high, lower weights on active inputs called an epoch . – If output is low, increase weights on active inputs • Also adjust threshold to compensate: = − η − T T t o ( ) j j j j 11 12 2

Perceptron as a Linear Separator Concept Perceptron Cannot Learn • Since perceptron uses linear threshold function, it is • Cannot learn exclusive-or, or parity searching for a linear separator that discriminates the function in general. classes. o 3 o 3 w o + w o > T 12 2 13 3 1 1 + – ?? ?? w T > − + o o 12 1 3 w 2 w 13 13 Or hyperplane in – + n -dimensional space o 2 0 o 2 1 13 14 Perceptron Convergence Perceptron Limits and Cycling Theorems • Perceptron convergence theorem : If the data is • System obviously cannot learn concepts it linearly separable and therefore a set of weights cannot represent. exist that are consistent with the data, then the • Minksy and Papert (1969) wrote a book Perceptron algorithm will eventually converge to a consistent set of weights. analyzing the perceptron and demonstrating • Perceptron cycling theorem : If the data is not many functions it could not learn. linearly separable, the Perceptron algorithm will • These results discouraged further research eventually repeat a set of weights and threshold at on neural nets; and symbolic AI became the the end of some epoch and therefore enter an infinite loop. dominate paradigm. – By checking for repeated weights+threshold, one can guarantee termination with either a positive or negative result. 15 16 Perceptron as Hill Climbing Perceptron Performance • Linear threshold functions are restrictive (high bias) but • The hypothesis space being search is a set of weights and a still reasonably expressive; more general than: threshold. – Pure conjunctive • Objective is to minimize classification error on the training set. – Pure disjunctive • Perceptron effectively does hill-climbing (gradient descent) in – M-of-N (at least M of a specified set of N features must be this space, changing the weights a small amount at each point present) to decrease training set error. • In practice, converges fairly quickly for linearly separable data. • For a single model neuron, the space is well behaved with a • Can effectively use even incompletely converged results single minima. when only a few outliers are misclassified. • Experimentally, Perceptron does quite well on many training benchmark data sets. error weights 17 18 0 3

Multi-Layer Networks Hill-Climbing in Multi-Layer Nets • Multi-layer networks can represent arbitrary functions, but • Since “greed is good” perhaps hill-climbing can be used to an effective learning algorithm for such networks was learn multi-layer networks in practice although its thought to be difficult. theoretical limits are clear. • A typical multi-layer network consists of an input, hidden • However, to do gradient descent, we need the output of a and output layer, each fully connected to the next, with unit to be a differentiable function of its input and weights. activation feeding forward. • Standard linear threshold function is not differentiable at output the threshold. activation hidden o i input • The weights determine the function computed. Given an 1 arbitrary number of hidden units, any boolean function can be computed with a single hidden layer. 0 T j net j 19 20 Differentiable Output Function Gradient Descent • Need non-linear output function to move beyond linear • Define objective to minimize error: functions. = ∑∑ − E W t o 2 ( ) ( ) kd kd – A multi-layer linear network is still linear. d ∈ D k ∈ K • Standard solution is to use the non-linear, differentiable where D is the set of training examples, K is the set of sigmoidal “logistic” function: output units, t kd and o kd are, respectively, the teacher and current output for unit k for example d . 1 • The derivative of a sigmoid unit with respect to net input is: o = 1 ∂ o j − net j T − + ( ) e j j = o − o 1 ( 1 ) ∂ j j net j 0 T j net j • Learning rule to change weights to minimize error is: ∂ E ∆ = − η w Can also use tanh or Gaussian output function ji ∂ w ji 21 22 Backpropagation Learning Rule Error Backpropagation • First calculate error of output units and use this to • Each weight changed by: ∆ w = ηδ o change the top layer of weights. ji j i Current output: o j =0.2 δ = − − o o t o j Correct output: t j = 1.0 ( 1 )( ) if is an output unit j j j j j Error δ j = o j (1– o j )( t j – o j ) output δ = − δ o o w j ( 1 ) if is a hidden unit 0.2(1–0.2)(1–0.2)=0.128 j j j k kj ∑ k Update weights into j where η is a constant called the learning rate ∆ w = ηδ o hidden ji j i t j is the correct teacher output for unit j δ j is the error measure for unit j input 23 24 4

Recommend

More recommend